Keifer Lee

SO-NeRF: Active View Planning for NeRF using Surrogate Objectives

Dec 06, 2023

Abstract:Despite the great success of Neural Radiance Fields (NeRF), its data-gathering process remains vague with only a general rule of thumb of sampling as densely as possible. The lack of understanding of what actually constitutes good views for NeRF makes it difficult to actively plan a sequence of views that yield the maximal reconstruction quality. We propose Surrogate Objectives for Active Radiance Fields (SOAR), which is a set of interpretable functions that evaluates the goodness of views using geometric and photometric visual cues - surface coverage, geometric complexity, textural complexity, and ray diversity. Moreover, by learning to infer the SOAR scores from a deep network, SOARNet, we are able to effectively select views in mere seconds instead of hours, without the need for prior visits to all the candidate views or training any radiance field during such planning. Our experiments show SOARNet outperforms the baselines with $\sim$80x speed-up while achieving better or comparable reconstruction qualities. We finally show that SOAR is model-agnostic, thus it generalizes across fully neural-implicit to fully explicit approaches.

BOBBY2: Buffer Based Robust High-Speed Object Tracking

Oct 18, 2019

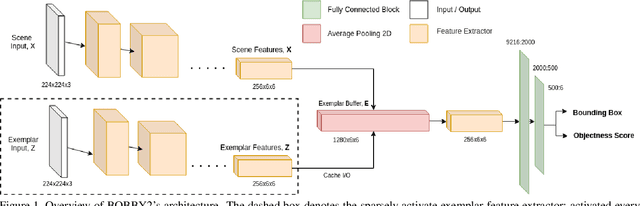

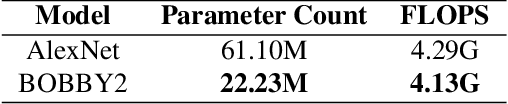

Abstract:In this work, a novel high-speed single object tracker that is robust against non-semantic distractor exemplars is introduced; dubbed BOBBY2. It incorporates a novel exemplar buffer module that sparsely caches the target's appearance across time, enabling it to adapt to potential target deformation. As for training, an augmented ImageNet-VID dataset was used in conjunction with the one cycle policy, enabling it to reach convergence with less than 2 epoch worth of data. For validation, the model was benchmarked on the GOT-10k dataset and on an additional small, albeit challenging custom UAV dataset collected with the TU-3 UAV. We demonstrate that the exemplar buffer is capable of providing redundancies in case of unintended target drifts, a desirable trait in any middle to long term tracking. Even when the buffer is predominantly filled with distractors instead of valid exemplars, BOBBY2 is capable of maintaining a near-optimal level of accuracy. BOBBY2 manages to achieve a very competitive result on the GOT-10k dataset and to a lesser degree on the challenging custom TU-3 dataset, without fine-tuning, demonstrating its generalizability. In terms of speed, BOBBY2 utilizes a stripped down AlexNet as feature extractor with 63% less parameters than a vanilla AlexNet, thus being able to run at a competitive 85 FPS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge