Keerthi Selvaraj

Neural Optimization with Adaptive Heuristics for Intelligent Marketing System

May 17, 2024

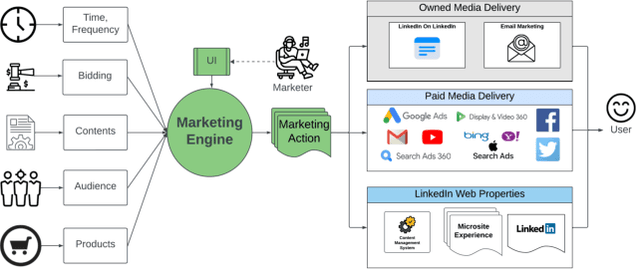

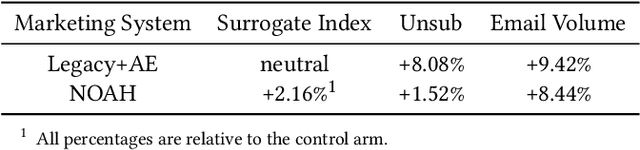

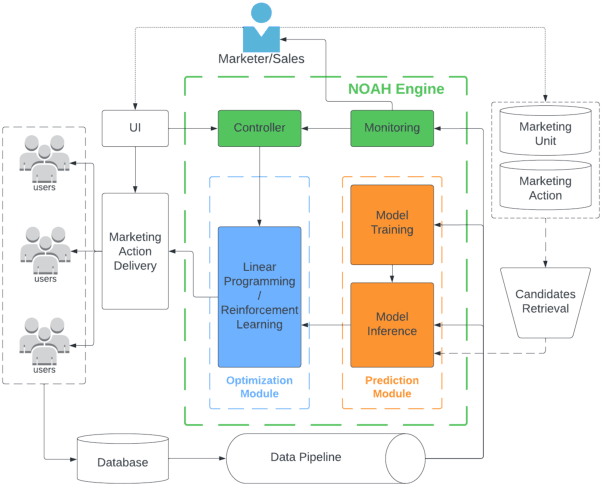

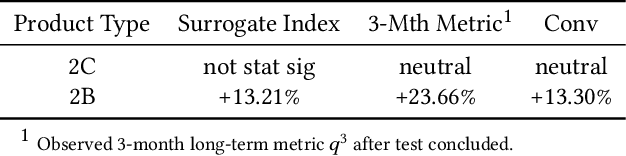

Abstract:Computational marketing has become increasingly important in today's digital world, facing challenges such as massive heterogeneous data, multi-channel customer journeys, and limited marketing budgets. In this paper, we propose a general framework for marketing AI systems, the Neural Optimization with Adaptive Heuristics (NOAH) framework. NOAH is the first general framework for marketing optimization that considers both to-business (2B) and to-consumer (2C) products, as well as both owned and paid channels. We describe key modules of the NOAH framework, including prediction, optimization, and adaptive heuristics, providing examples for bidding and content optimization. We then detail the successful application of NOAH to LinkedIn's email marketing system, showcasing significant wins over the legacy ranking system. Additionally, we share details and insights that are broadly useful, particularly on: (i) addressing delayed feedback with lifetime value, (ii) performing large-scale linear programming with randomization, (iii) improving retrieval with audience expansion, (iv) reducing signal dilution in targeting tests, and (v) handling zero-inflated heavy-tail metrics in statistical testing.

Learning Semantically Coherent and Reusable Kernels in Convolution Neural Nets for Sentence Classification

Oct 10, 2016

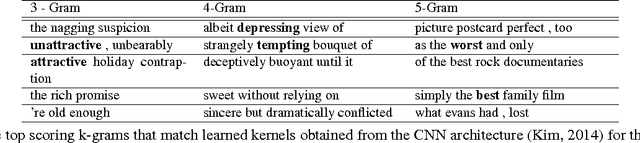

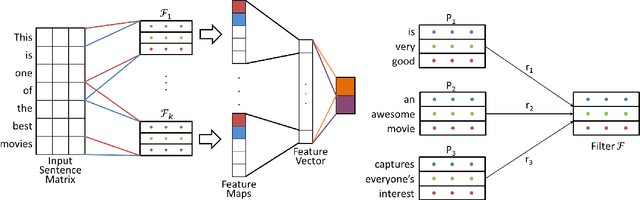

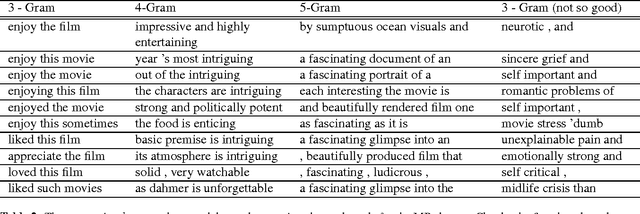

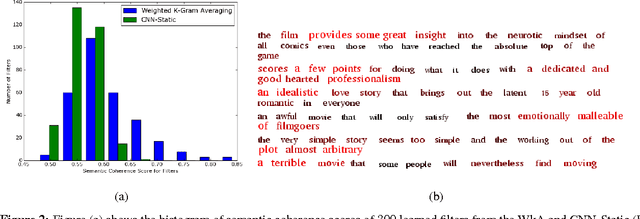

Abstract:The state-of-the-art CNN models give good performance on sentence classification tasks. The purpose of this work is to empirically study desirable properties such as semantic coherence, attention mechanism and reusability of CNNs in these tasks. Semantically coherent kernels are preferable as they are a lot more interpretable for explaining the decision of the learned CNN model. We observe that the learned kernels do not have semantic coherence. Motivated by this observation, we propose to learn kernels with semantic coherence using clustering scheme combined with Word2Vec representation and domain knowledge such as SentiWordNet. We suggest a technique to visualize attention mechanism of CNNs for decision explanation purpose. Reusable property enables kernels learned on one problem to be used in another problem. This helps in efficient learning as only a few additional domain specific filters may have to be learned. We demonstrate the efficacy of our core ideas of learning semantically coherent kernels and leveraging reusable kernels for efficient learning on several benchmark datasets. Experimental results show the usefulness of our approach by achieving performance close to the state-of-the-art methods but with semantic and reusable properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge