Kaushik Manchella

PassGoodPool: Joint Passengers and Goods Fleet Management with Reinforcement Learning aided Pricing, Matching, and Route Planning

Nov 17, 2020

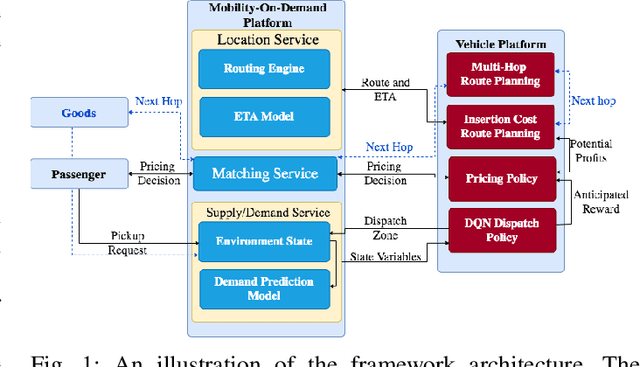

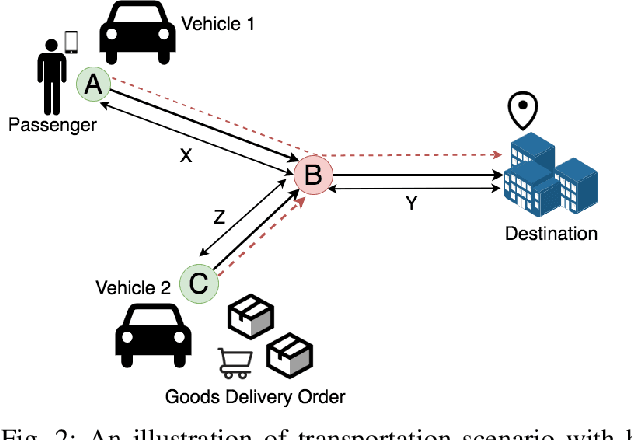

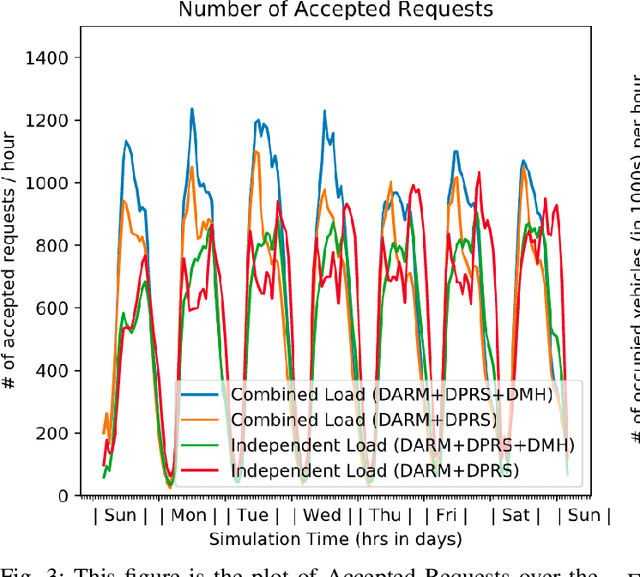

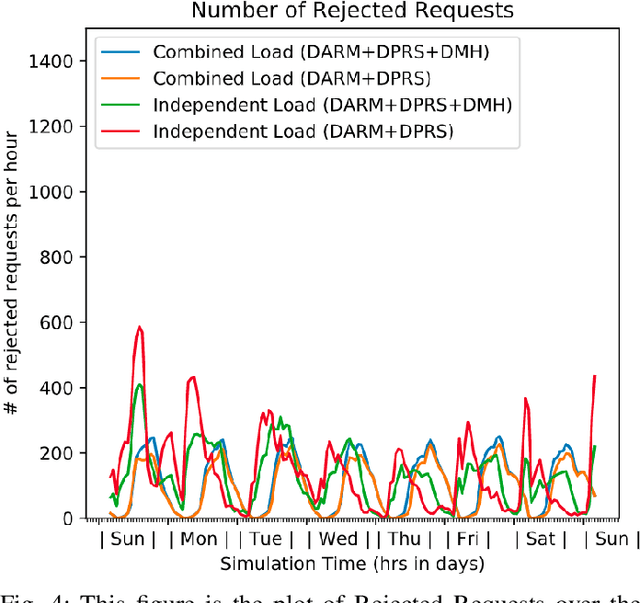

Abstract:In this paper, we present a dynamic, demand aware, and pricing-based matching and route planning framework that allows efficient pooling of multiple passengers and goods in each vehicle. This approach includes the flexibility for transferring goods through multiple hops from source to destination as well as pooling of passengers. The key components of the proposed approach include (i) Pricing by the vehicles to passengers which is based on the insertion cost, which determines the matching based on passenger's acceptance/rejection, (ii) Matching of goods to vehicles, and the multi-hop routing of goods, (iii) Route planning of the vehicles to pick. up and drop passengers and goods, (i) Dispatching idle vehicles to areas of anticipated high passenger and goods demand using Deep Reinforcement Learning, and (v) Allowing for distributed inference at each vehicle while collectively optimizing fleet objectives. Our proposed framework can be deployed independently within each vehicle as this minimizes computational costs associated with the gorwth of distributed systems and democratizes decision-making to each individual. The proposed framework is validated in a simulated environment, where we leverage realistic delivery datasets such as the New York City Taxi public dataset and Google Maps traffic data from delivery offering businesses.Simulations on a variety of vehicle types, goods, and passenger utility functions show the effectiveness of our approach as compared to baselines that do not consider combined load transportation or dynamic multi-hop route planning. Our proposed method showed improvements over the next best baseline in various aspects including a 15% increase in fleet utilization and 20% increase in average vehicle profits.

FlexPool: A Distributed Model-Free Deep Reinforcement Learning Algorithm for Joint Passengers & Goods Transportation

Jul 27, 2020

Abstract:The growth in online goods delivery is causing a dramatic surge in urban vehicle traffic from last-mile deliveries. On the other hand, ride-sharing has been on the rise with the success of ride-sharing platforms and increased research on using autonomous vehicle technologies for routing and matching. The future of urban mobility for passengers and goods relies on leveraging new methods that minimize operational costs and environmental footprints of transportation systems. This paper considers combining passenger transportation with goods delivery to improve vehicle-based transportation. Even though the problem has been studied with a defined dynamics model of the transportation system environment, this paper considers a model-free approach that has been demonstrated to be adaptable to new or erratic environment dynamics. We propose FlexPool, a distributed model-free deep reinforcement learning algorithm that jointly serves passengers & goods workloads by learning optimal dispatch policies from its interaction with the environment. The proposed algorithm pools passengers for a ride-sharing service and delivers goods using a multi-hop transit method. These flexibilities decrease the fleet's operational cost and environmental footprint while maintaining service levels for passengers and goods. Through simulations on a realistic multi-agent urban mobility platform, we demonstrate that FlexPool outperforms other model-free settings in serving the demands from passengers & goods. FlexPool achieves 30% higher fleet utilization and 35% higher fuel efficiency in comparison to (i) model-free approaches where vehicles transport a combination of passengers & goods without the use of multi-hop transit, and (ii) model-free approaches where vehicles exclusively transport either passengers or goods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge