Katherine Deck

A Physics-Constrained Neural Differential Equation Framework for Data-Driven Snowpack Simulation

Dec 03, 2024Abstract:This paper presents a physics-constrained neural differential equation framework for parameterization, and employs it to model the time evolution of seasonal snow depth given hydrometeorological forcings. When trained on data from multiple SNOTEL sites, the parameterization predicts daily snow depth with under 9% median error and Nash Sutcliffe Efficiencies over 0.94 across a wide variety of snow climates. The parameterization also generalizes to new sites not seen during training, which is not often true for calibrated snow models. Requiring the parameterization to predict snow water equivalent in addition to snow depth only increases error to ~12%. The structure of the approach guarantees the satisfaction of physical constraints, enables these constraints during model training, and allows modeling at different temporal resolutions without additional retraining of the parameterization. These benefits hold potential in climate modeling, and could extend to other dynamical systems with physical constraints.

Toward Routing River Water in Land Surface Models with Recurrent Neural Networks

Apr 26, 2024Abstract:Machine learning is playing an increasing role in hydrology, supplementing or replacing physics-based models. One notable example is the use of recurrent neural networks (RNNs) for forecasting streamflow given observed precipitation and geographic characteristics. Training of such a model over the continental United States has demonstrated that a single set of model parameters can be used across independent catchments, and that RNNs can outperform physics-based models. In this work, we take a next step and study the performance of RNNs for river routing in land surface models (LSMs). Instead of observed precipitation, the LSM-RNN uses instantaneous runoff calculated from physics-based models as an input. We train the model with data from river basins spanning the globe and test it in streamflow hindcasts. The model demonstrates skill at generalization across basins (predicting streamflow in unseen catchments) and across time (predicting streamflow during years not used in training). We compare the predictions from the LSM-RNN to an existing physics-based model calibrated with a similar dataset and find that the LSM-RNN outperforms the physics-based model. Our results give further evidence that RNNs are effective for global streamflow prediction from runoff inputs and motivate the development of complete routing models that can capture nested sub-basis connections.

Easing Color Shifts in Score-Based Diffusion Models

Jun 27, 2023Abstract:Generated images of score-based models can suffer from errors in their spatial means, an effect, referred to as a color shift, which grows for larger images. This paper introduces a computationally inexpensive solution to mitigate color shifts in score-based diffusion models. We propose a simple nonlinear bypass connection in the score network, designed to process the spatial mean of the input and to predict the mean of the score function. This network architecture substantially improves the resulting spatial means of the generated images, and we show that the improvement is approximately independent of the size of the generated images. As a result, our solution offers a comparatively inexpensive solution for the color shift problem across image sizes. Lastly, we discuss the origin of color shifts in an idealized setting in order to motivate our approach.

Unpaired Downscaling of Fluid Flows with Diffusion Bridges

May 02, 2023

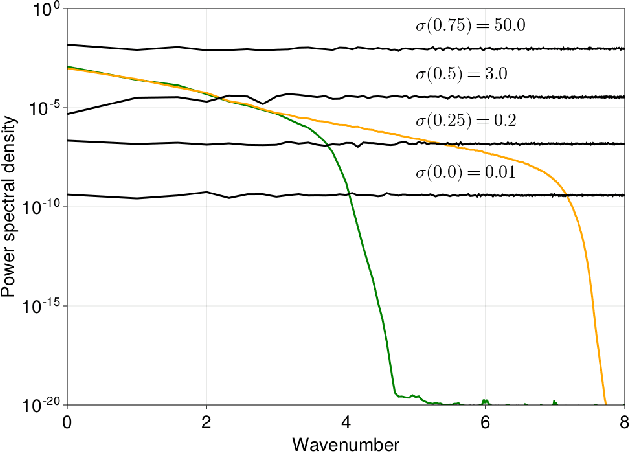

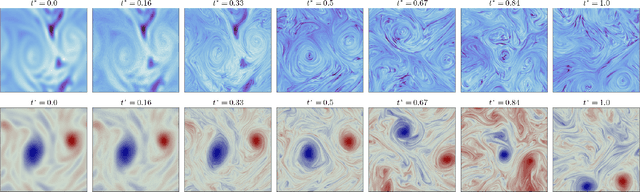

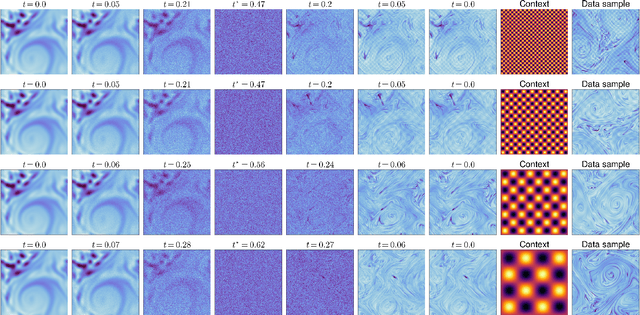

Abstract:We present a method to downscale idealized geophysical fluid simulations using generative models based on diffusion maps. By analyzing the Fourier spectra of images drawn from different data distributions, we show how one can chain together two independent conditional diffusion models for use in domain translation. The resulting transformation is a diffusion bridge between a low resolution and a high resolution dataset and allows for new sample generation of high-resolution images given specific low resolution features. The ability to generate new samples allows for the computation of any statistic of interest, without any additional calibration or training. Our unsupervised setup is also designed to downscale images without access to paired training data; this flexibility allows for the combination of multiple source and target domains without additional training. We demonstrate that the method enhances resolution and corrects context-dependent biases in geophysical fluid simulations, including in extreme events. We anticipate that the same method can be used to downscale the output of climate simulations, including temperature and precipitation fields, without needing to train a new model for each application and providing a significant computational cost savings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge