Katarina Grolinger

Any-Class Presence Likelihood for Robust Multi-Label Classification with Abundant Negative Data

Jun 06, 2025Abstract:Multi-label Classification (MLC) assigns an instance to one or more non-exclusive classes. A challenge arises when the dataset contains a large proportion of instances with no assigned class, referred to as negative data, which can overwhelm the learning process and hinder the accurate identification and classification of positive instances. Nevertheless, it is common in MLC applications such as industrial defect detection, agricultural disease identification, and healthcare diagnosis to encounter large amounts of negative data. Assigning a separate negative class to these instances further complicates the learning objective and introduces unnecessary redundancies. To address this challenge, we redesign standard MLC loss functions by deriving a likelihood of any class being present, formulated by a normalized weighted geometric mean of the predicted class probabilities. We introduce a regularization parameter that controls the relative contribution of the absent class probabilities to the any-class presence likelihood in positive instances. The any-class presence likelihood complements the multi-label learning by encouraging the network to become more aware of implicit positive instances and improve the label classification within those positive instances. Experiments on large-scale datasets with negative data: SewerML, modified COCO, and ChestX-ray14, across various networks and base loss functions show that our loss functions consistently improve MLC performance of their standard loss counterparts, achieving gains of up to 6.01 percentage points in F1, 8.06 in F2, and 3.11 in mean average precision, all without additional parameters or computational complexity. Code available at: https://github.com/ML-for-Sensor-Data-Western/gmean-mlc

Graph Attention Convolutional U-NET: A Semantic Segmentation Model for Identifying Flooded Areas

Feb 21, 2025Abstract:The increasing impact of human-induced climate change and unplanned urban constructions has increased flooding incidents in recent years. Accurate identification of flooded areas is crucial for effective disaster management and urban planning. While few works have utilized convolutional neural networks and transformer-based semantic segmentation techniques for identifying flooded areas from aerial footage, recent developments in graph neural networks have created improvement opportunities. This paper proposes an innovative approach, the Graph Attention Convolutional U-NET (GAC-UNET) model, based on graph neural networks for automated identification of flooded areas. The model incorporates a graph attention mechanism and Chebyshev layers into the U-Net architecture. Furthermore, this paper explores the applicability of transfer learning and model reprogramming to enhance the accuracy of flood area segmentation models. Empirical results demonstrate that the proposed GAC-UNET model, outperforms other approaches with 91\% mAP, 94\% dice score, and 89\% IoU, providing valuable insights for informed decision-making and better planning of future infrastructures in flood-prone areas.

Leveraging Hypernetworks and Learnable Kernels for Consumer Energy Forecasting Across Diverse Consumer Types

Feb 07, 2025Abstract:Consumer energy forecasting is essential for managing energy consumption and planning, directly influencing operational efficiency, cost reduction, personalized energy management, and sustainability efforts. In recent years, deep learning techniques, especially LSTMs and transformers, have been greatly successful in the field of energy consumption forecasting. Nevertheless, these techniques have difficulties in capturing complex and sudden variations, and, moreover, they are commonly examined only on a specific type of consumer (e.g., only offices, only schools). Consequently, this paper proposes HyperEnergy, a consumer energy forecasting strategy that leverages hypernetworks for improved modeling of complex patterns applicable across a diversity of consumers. Hypernetwork is responsible for predicting the parameters of the primary prediction network, in our case LSTM. A learnable adaptable kernel, comprised of polynomial and radial basis function kernels, is incorporated to enhance performance. The proposed HyperEnergy was evaluated on diverse consumers including, student residences, detached homes, a home with electric vehicle charging, and a townhouse. Across all consumer types, HyperEnergy consistently outperformed 10 other techniques, including state-of-the-art models such as LSTM, AttentionLSTM, and transformer.

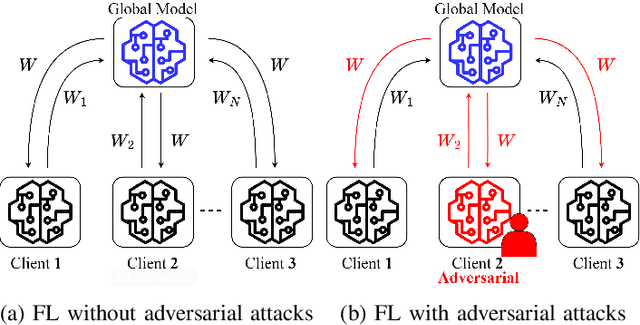

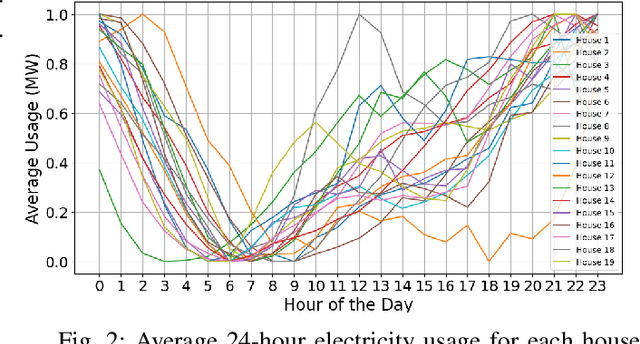

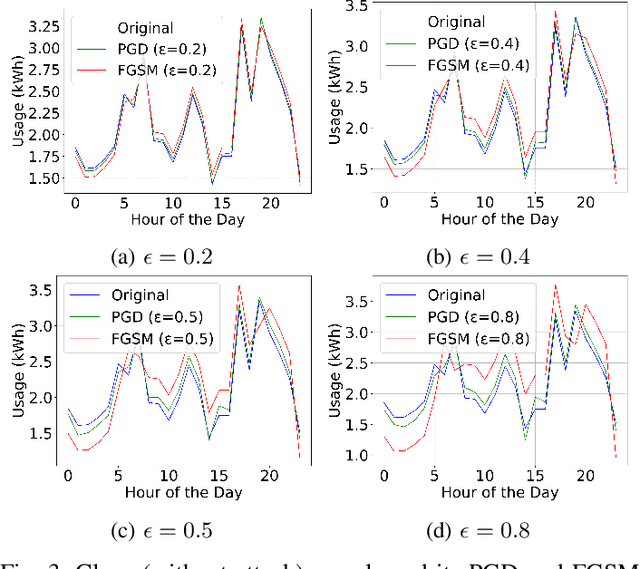

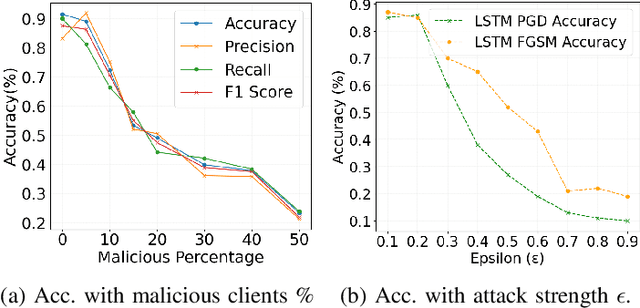

Federated Learning for Anomaly Detection in Energy Consumption Data: Assessing the Vulnerability to Adversarial Attacks

Feb 07, 2025

Abstract:Anomaly detection is crucial in the energy sector to identify irregular patterns indicating equipment failures, energy theft, or other issues. Machine learning techniques for anomaly detection have achieved great success, but are typically centralized, involving sharing local data with a central server which raises privacy and security concerns. Federated Learning (FL) has been gaining popularity as it enables distributed learning without sharing local data. However, FL depends on neural networks, which are vulnerable to adversarial attacks that manipulate data, leading models to make erroneous predictions. While adversarial attacks have been explored in the image domain, they remain largely unexplored in time series problems, especially in the energy domain. Moreover, the effect of adversarial attacks in the FL setting is also mostly unknown. This paper assesses the vulnerability of FL-based anomaly detection in energy data to adversarial attacks. Specifically, two state-of-the-art models, Long Short Term Memory (LSTM) and Transformers, are used to detect anomalies in an FL setting, and two white-box attack methods, Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD), are employed to perturb the data. The results show that FL is more sensitive to PGD attacks than to FGSM attacks, attributed to PGD's iterative nature, resulting in an accuracy drop of over 10% even with naive, weaker attacks. Moreover, FL is more affected by these attacks than centralized learning, highlighting the need for defense mechanisms in FL.

Kolmogorov-Arnold Recurrent Network for Short Term Load Forecasting Across Diverse Consumers

Jan 12, 2025Abstract:Load forecasting plays a crucial role in energy management, directly impacting grid stability, operational efficiency, cost reduction, and environmental sustainability. Traditional Vanilla Recurrent Neural Networks (RNNs) face issues such as vanishing and exploding gradients, whereas sophisticated RNNs such as LSTMs have shown considerable success in this domain. However, these models often struggle to accurately capture complex and sudden variations in energy consumption, and their applicability is typically limited to specific consumer types, such as offices or schools. To address these challenges, this paper proposes the Kolmogorov-Arnold Recurrent Network (KARN), a novel load forecasting approach that combines the flexibility of Kolmogorov-Arnold Networks with RNN's temporal modeling capabilities. KARN utilizes learnable temporal spline functions and edge-based activations to better model non-linear relationships in load data, making it adaptable across a diverse range of consumer types. The proposed KARN model was rigorously evaluated on a variety of real-world datasets, including student residences, detached homes, a home with electric vehicle charging, a townhouse, and industrial buildings. Across all these consumer categories, KARN consistently outperformed traditional Vanilla RNNs, while it surpassed LSTM and Gated Recurrent Units (GRUs) in six buildings. The results demonstrate KARN's superior accuracy and applicability, making it a promising tool for enhancing load forecasting in diverse energy management scenarios.

Explaining Deep Learning-based Anomaly Detection in Energy Consumption Data by Focusing on Contextually Relevant Data

Jan 10, 2025Abstract:Detecting anomalies in energy consumption data is crucial for identifying energy waste, equipment malfunction, and overall, for ensuring efficient energy management. Machine learning, and specifically deep learning approaches, have been greatly successful in anomaly detection; however, they are black-box approaches that do not provide transparency or explanations. SHAP and its variants have been proposed to explain these models, but they suffer from high computational complexity (SHAP) or instability and inconsistency (e.g., Kernel SHAP). To address these challenges, this paper proposes an explainability approach for anomalies in energy consumption data that focuses on context-relevant information. The proposed approach leverages existing explainability techniques, focusing on SHAP variants, together with global feature importance and weighted cosine similarity to select background dataset based on the context of each anomaly point. By focusing on the context and most relevant features, this approach mitigates the instability of explainability algorithms. Experimental results across 10 different machine learning models, five datasets, and five XAI techniques, demonstrate that our method reduces the variability of explanations providing consistent explanations. Statistical analyses confirm the robustness of our approach, showing an average reduction in variability of approximately 38% across multiple datasets.

* 26 pages, 8 figures

Global-Local Image Perceptual Score (GLIPS): Evaluating Photorealistic Quality of AI-Generated Images

May 16, 2024

Abstract:This paper introduces the Global-Local Image Perceptual Score (GLIPS), an image metric designed to assess the photorealistic image quality of AI-generated images with a high degree of alignment to human visual perception. Traditional metrics such as FID and KID scores do not align closely with human evaluations. The proposed metric incorporates advanced transformer-based attention mechanisms to assess local similarity and Maximum Mean Discrepancy (MMD) to evaluate global distributional similarity. To evaluate the performance of GLIPS, we conducted a human study on photorealistic image quality. Comprehensive tests across various generative models demonstrate that GLIPS consistently outperforms existing metrics like FID, SSIM, and MS-SSIM in terms of correlation with human scores. Additionally, we introduce the Interpolative Binning Scale (IBS), a refined scaling method that enhances the interpretability of metric scores by aligning them more closely with human evaluative standards. The proposed metric and scaling approach not only provides more reliable assessments of AI-generated images but also suggest pathways for future enhancements in image generation technologies.

Interval Load Forecasting for Individual Households in the Presence of Electric Vehicle Charging

Jun 05, 2023Abstract:The transition to Electric Vehicles (EV) in place of traditional internal combustion engines is increasing societal demand for electricity. The ability to integrate the additional demand from EV charging into forecasting electricity demand is critical for maintaining the reliability of electricity generation and distribution. Load forecasting studies typically exclude households with home EV charging, focusing on offices, schools, and public charging stations. Moreover, they provide point forecasts which do not offer information about prediction uncertainty. Consequently, this paper proposes the Long Short-Term Memory Bayesian Neural Networks (LSTM-BNNs) for household load forecasting in presence of EV charging. The approach takes advantage of the LSTM model to capture the time dependencies and uses the dropout layer with Bayesian inference to generate prediction intervals. Results show that the proposed LSTM-BNNs achieve accuracy similar to point forecasts with the advantage of prediction intervals. Moreover, the impact of lockdowns related to the COVID-19 pandemic on the load forecasting model is examined, and the analysis shows that there is no major change in the model performance as, for the considered households, the randomness of the EV charging outweighs the change due to pandemic.

Agglomerative Hierarchical Clustering with Dynamic Time Warping for Household Load Curve Clustering

Oct 18, 2022

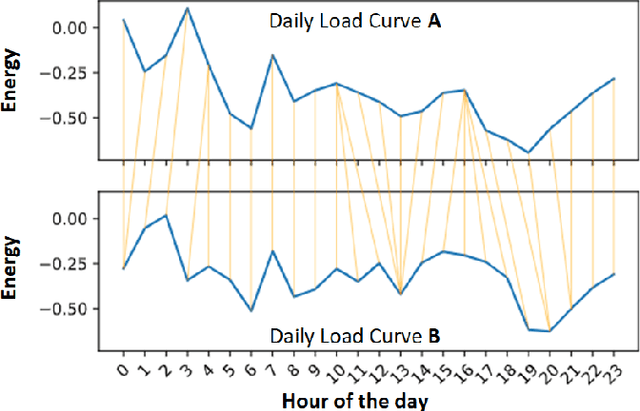

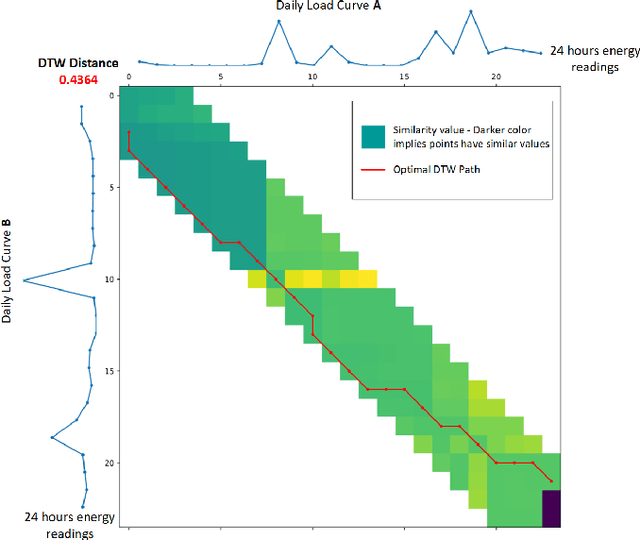

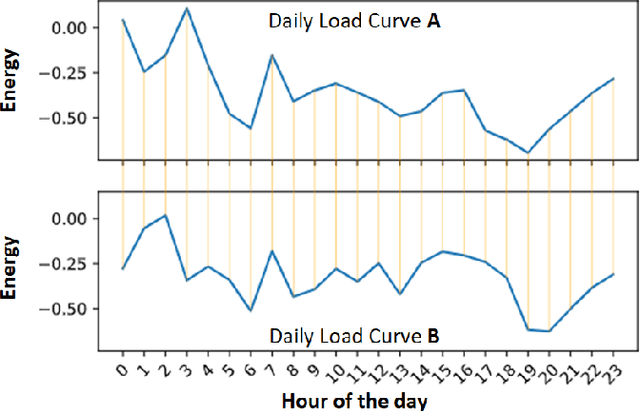

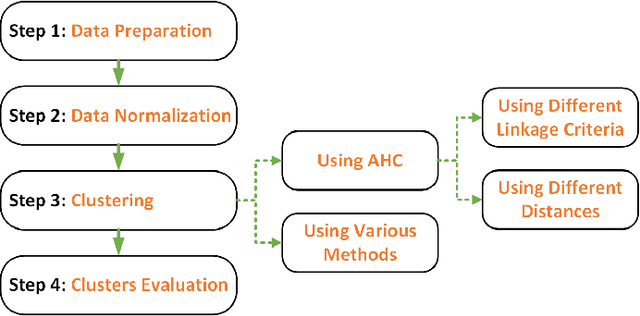

Abstract:Energy companies often implement various demand response (DR) programs to better match electricity demand and supply by offering the consumers incentives to reduce their demand during critical periods. Classifying clients according to their consumption patterns enables targeting specific groups of consumers for DR. Traditional clustering algorithms use standard distance measurement to find the distance between two points. The results produced by clustering algorithms such as K-means, K-medoids, and Gaussian Mixture Models depend on the clustering parameters or initial clusters. In contrast, our methodology uses a shape-based approach that combines Agglomerative Hierarchical Clustering (AHC) with Dynamic Time Warping (DTW) to classify residential households' daily load curves based on their consumption patterns. While DTW seeks the optimal alignment between two load curves, AHC provides a realistic initial clusters center. In this paper, we compare the results with other clustering algorithms such as K-means, K-medoids, and GMM using different distance measures, and we show that AHC using DTW outperformed other clustering algorithms and needed fewer clusters.

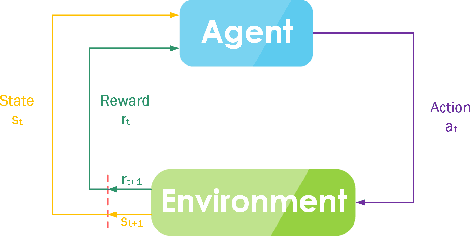

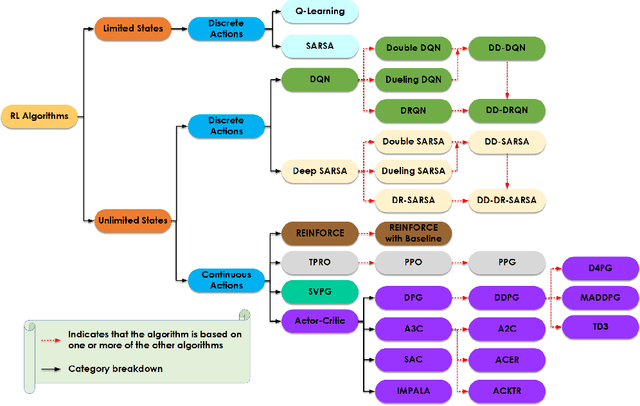

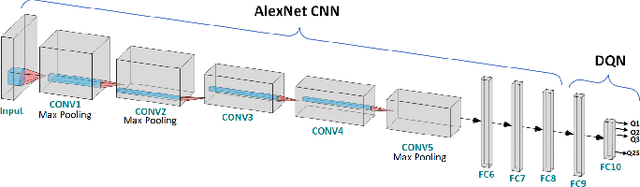

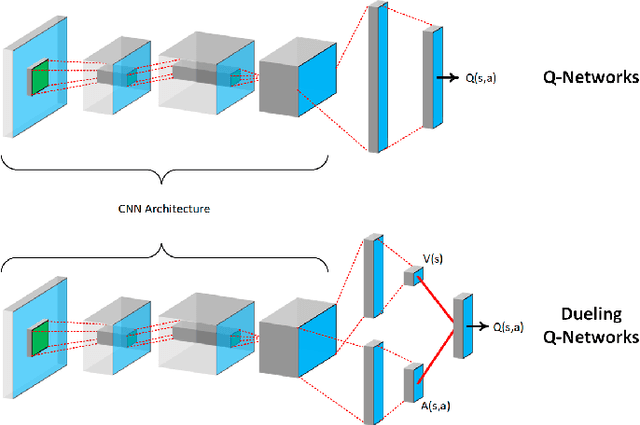

Reinforcement Learning Algorithms: An Overview and Classification

Sep 29, 2022

Abstract:The desire to make applications and machines more intelligent and the aspiration to enable their operation without human interaction have been driving innovations in neural networks, deep learning, and other machine learning techniques. Although reinforcement learning has been primarily used in video games, recent advancements and the development of diverse and powerful reinforcement algorithms have enabled the reinforcement learning community to move from playing video games to solving complex real-life problems in autonomous systems such as self-driving cars, delivery drones, and automated robotics. Understanding the environment of an application and the algorithms' limitations plays a vital role in selecting the appropriate reinforcement learning algorithm that successfully solves the problem on hand in an efficient manner. Consequently, in this study, we identify three main environment types and classify reinforcement learning algorithms according to those environment types. Moreover, within each category, we identify relationships between algorithms. The overview of each algorithm provides insight into the algorithms' foundations and reviews similarities and differences among algorithms. This study provides a perspective on the field and helps practitioners and researchers to select the appropriate algorithm for their use case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge