Fadi AlMahamid

A Multi-Step Comparative Framework for Anomaly Detection in IoT Data Streams

May 22, 2025Abstract:The rapid expansion of Internet of Things (IoT) devices has introduced critical security challenges, underscoring the need for accurate anomaly detection. Although numerous studies have proposed machine learning (ML) methods for this purpose, limited research systematically examines how different preprocessing steps--normalization, transformation, and feature selection--interact with distinct model architectures. To address this gap, this paper presents a multi-step evaluation framework assessing the combined impact of preprocessing choices on three ML algorithms: RNN-LSTM, autoencoder neural networks (ANN), and Gradient Boosting (GBoosting). Experiments on the IoTID20 dataset shows that GBoosting consistently delivers superior accuracy across preprocessing configurations, while RNN-LSTM shows notable gains with z-score normalization and autoencoders excel in recall, making them well-suited for unsupervised scenarios. By offering a structured analysis of preprocessing decisions and their interplay with various ML techniques, the proposed framework provides actionable guidance to enhance anomaly detection performance in IoT environments.

Agglomerative Hierarchical Clustering with Dynamic Time Warping for Household Load Curve Clustering

Oct 18, 2022

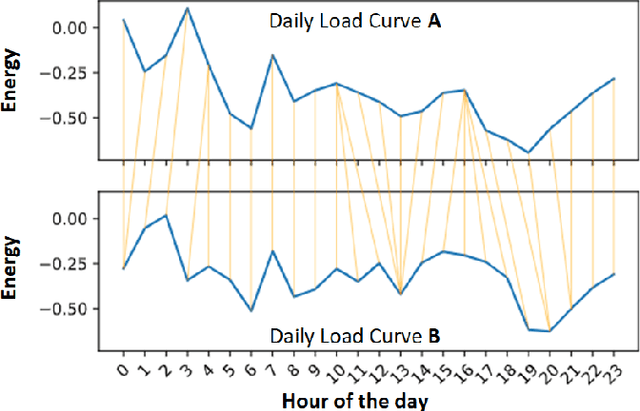

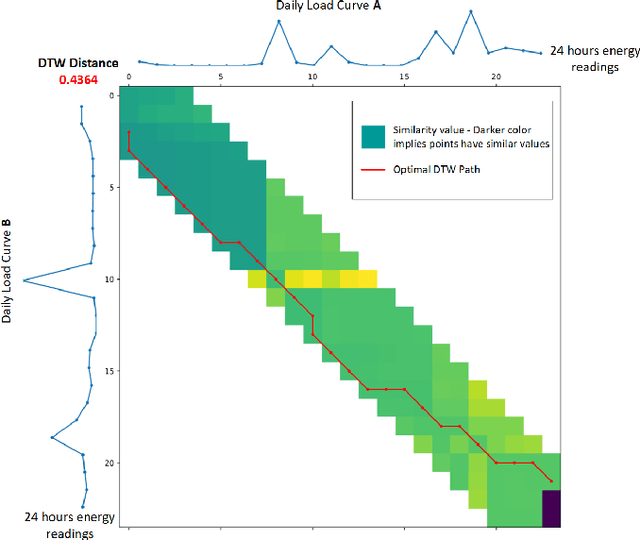

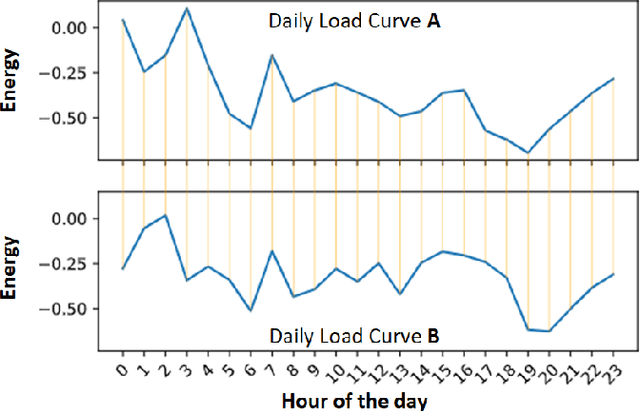

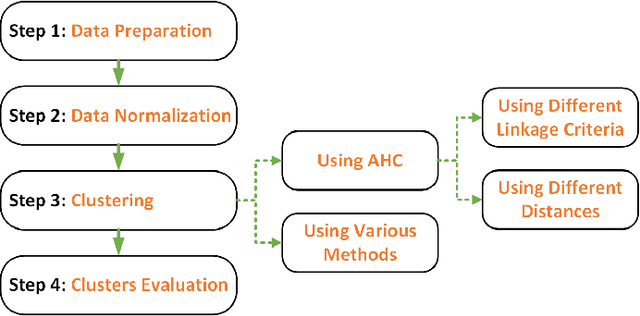

Abstract:Energy companies often implement various demand response (DR) programs to better match electricity demand and supply by offering the consumers incentives to reduce their demand during critical periods. Classifying clients according to their consumption patterns enables targeting specific groups of consumers for DR. Traditional clustering algorithms use standard distance measurement to find the distance between two points. The results produced by clustering algorithms such as K-means, K-medoids, and Gaussian Mixture Models depend on the clustering parameters or initial clusters. In contrast, our methodology uses a shape-based approach that combines Agglomerative Hierarchical Clustering (AHC) with Dynamic Time Warping (DTW) to classify residential households' daily load curves based on their consumption patterns. While DTW seeks the optimal alignment between two load curves, AHC provides a realistic initial clusters center. In this paper, we compare the results with other clustering algorithms such as K-means, K-medoids, and GMM using different distance measures, and we show that AHC using DTW outperformed other clustering algorithms and needed fewer clusters.

Reinforcement Learning Algorithms: An Overview and Classification

Sep 29, 2022

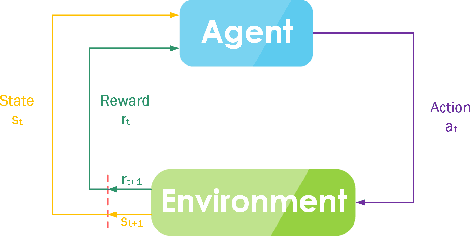

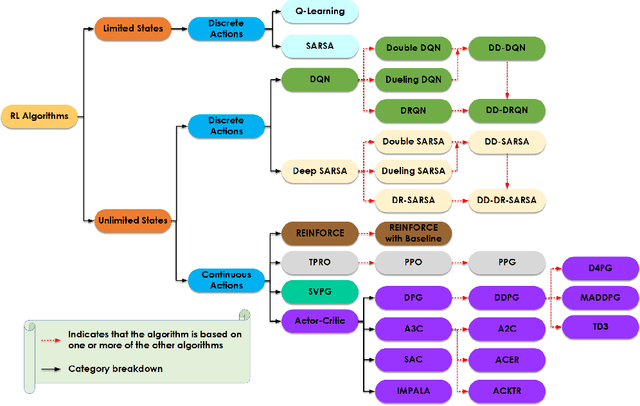

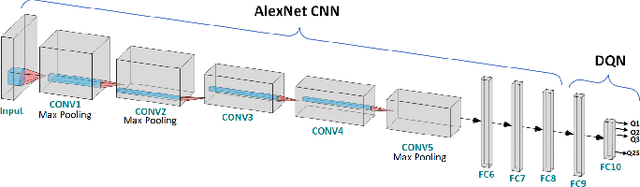

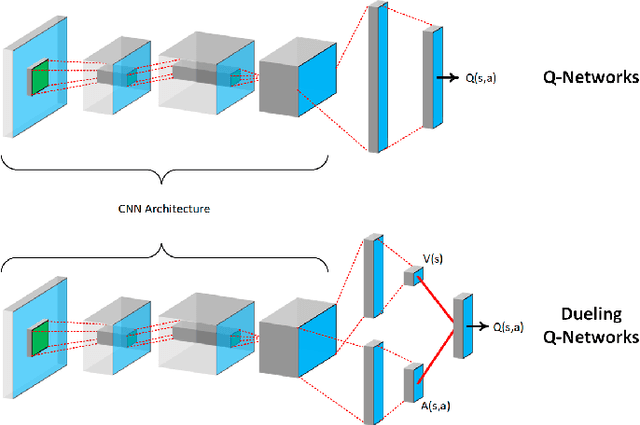

Abstract:The desire to make applications and machines more intelligent and the aspiration to enable their operation without human interaction have been driving innovations in neural networks, deep learning, and other machine learning techniques. Although reinforcement learning has been primarily used in video games, recent advancements and the development of diverse and powerful reinforcement algorithms have enabled the reinforcement learning community to move from playing video games to solving complex real-life problems in autonomous systems such as self-driving cars, delivery drones, and automated robotics. Understanding the environment of an application and the algorithms' limitations plays a vital role in selecting the appropriate reinforcement learning algorithm that successfully solves the problem on hand in an efficient manner. Consequently, in this study, we identify three main environment types and classify reinforcement learning algorithms according to those environment types. Moreover, within each category, we identify relationships between algorithms. The overview of each algorithm provides insight into the algorithms' foundations and reviews similarities and differences among algorithms. This study provides a perspective on the field and helps practitioners and researchers to select the appropriate algorithm for their use case.

Autonomous Unmanned Aerial Vehicle Navigation using Reinforcement Learning: A Systematic Review

Aug 25, 2022

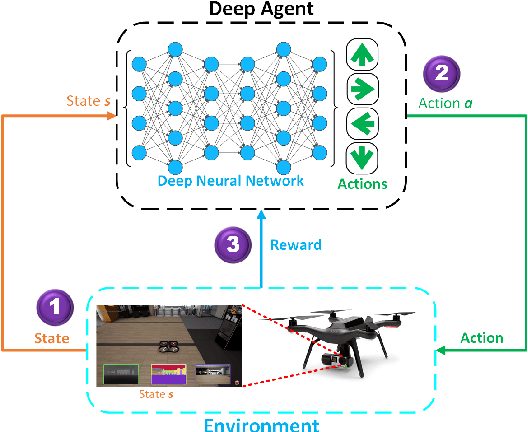

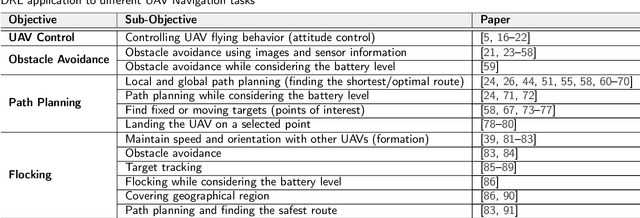

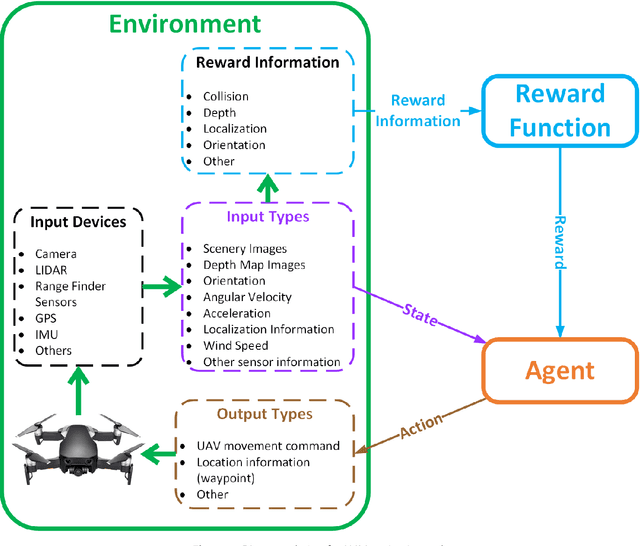

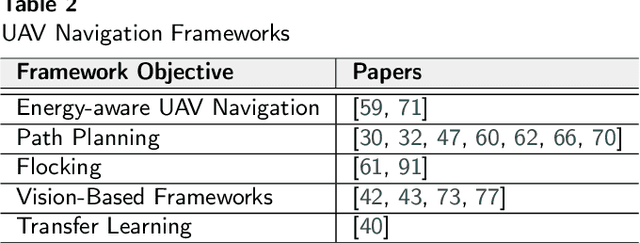

Abstract:There is an increasing demand for using Unmanned Aerial Vehicle (UAV), known as drones, in different applications such as packages delivery, traffic monitoring, search and rescue operations, and military combat engagements. In all of these applications, the UAV is used to navigate the environment autonomously - without human interaction, perform specific tasks and avoid obstacles. Autonomous UAV navigation is commonly accomplished using Reinforcement Learning (RL), where agents act as experts in a domain to navigate the environment while avoiding obstacles. Understanding the navigation environment and algorithmic limitations plays an essential role in choosing the appropriate RL algorithm to solve the navigation problem effectively. Consequently, this study first identifies the main UAV navigation tasks and discusses navigation frameworks and simulation software. Next, RL algorithms are classified and discussed based on the environment, algorithm characteristics, abilities, and applications in different UAV navigation problems, which will help the practitioners and researchers select the appropriate RL algorithms for their UAV navigation use cases. Moreover, identified gaps and opportunities will drive UAV navigation research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge