Karolina Stosio

The Notorious Difficulty of Comparing Human and Machine Perception

Apr 20, 2020

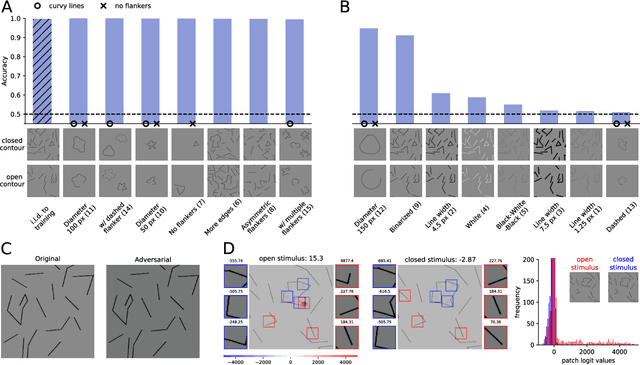

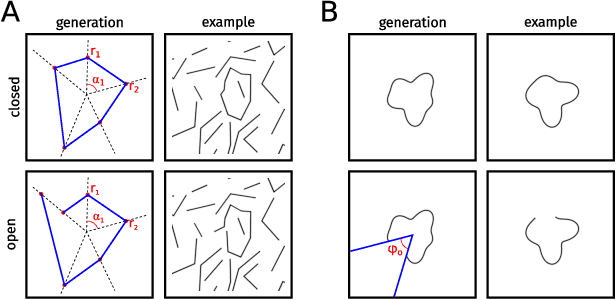

Abstract:With the rise of machines to human-level performance in complex recognition tasks, a growing amount of work is directed towards comparing information processing in humans and machines. These works have the potential to deepen our understanding of the inner mechanisms of human perception and to improve machine learning. Drawing robust conclusions from comparison studies, however, turns out to be difficult. Here, we highlight common shortcomings that can easily lead to fragile conclusions. First, if a model does achieve high performance on a task similar to humans, its decision-making process is not necessarily human-like. Moreover, further analyses can reveal differences. Second, the performance of neural networks is sensitive to training procedures and architectural details. Thus, generalizing conclusions from specific architectures is difficult. Finally, when comparing humans and machines, equivalent experimental settings are crucial in order to identify innate differences. Addressing these shortcomings alters or refines the conclusions of studies. We show that, despite their ability to solve closed-contour tasks, our neural networks use different decision-making strategies than humans. We further show that there is no fundamental difference between same-different and spatial tasks for common feed-forward neural networks and finally, that neural networks do experience a "recognition gap" on minimal recognizable images. All in all, care has to be taken to not impose our human systematic bias when comparing human and machine perception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge