Karol Grzegorczyk

Evaluation of Transfer Learning for Polish with a Text-to-Text Model

May 18, 2022

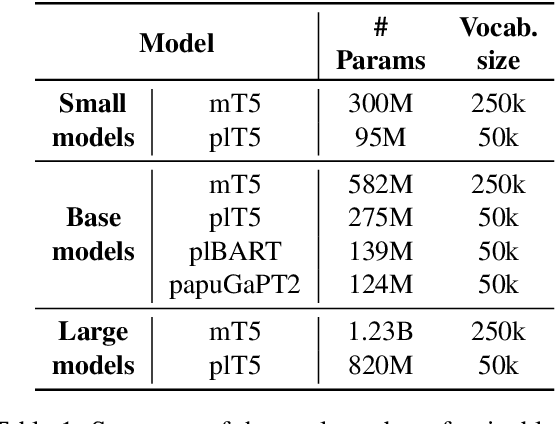

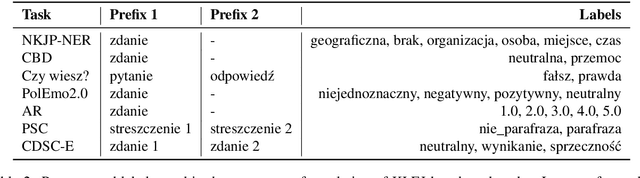

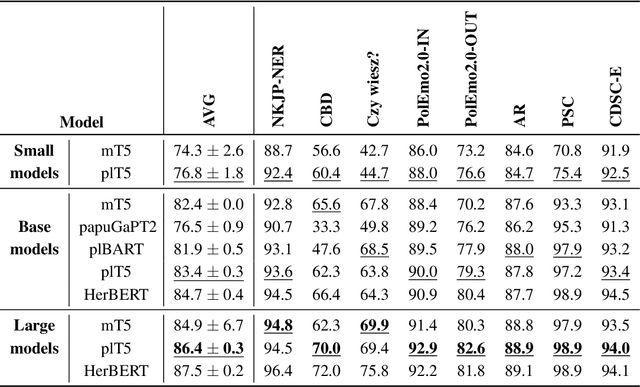

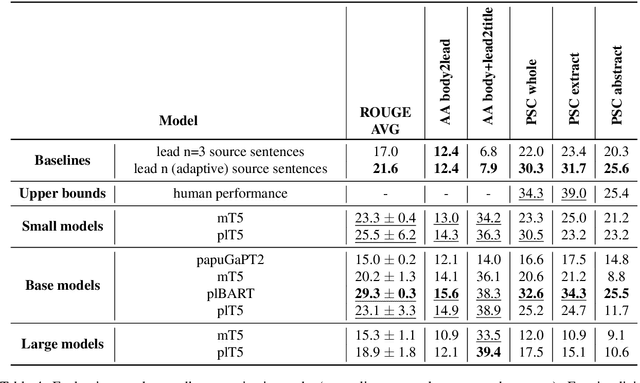

Abstract:We introduce a new benchmark for assessing the quality of text-to-text models for Polish. The benchmark consists of diverse tasks and datasets: KLEJ benchmark adapted for text-to-text, en-pl translation, summarization, and question answering. In particular, since summarization and question answering lack benchmark datasets for the Polish language, we describe their construction and make them publicly available. Additionally, we present plT5 - a general-purpose text-to-text model for Polish that can be fine-tuned on various Natural Language Processing (NLP) tasks with a single training objective. Unsupervised denoising pre-training is performed efficiently by initializing the model weights with a multi-lingual T5 (mT5) counterpart. We evaluate the performance of plT5, mT5, Polish BART (plBART), and Polish GPT-2 (papuGaPT2). The plT5 scores top on all of these tasks except summarization, where plBART is best. In general (except for summarization), the larger the model, the better the results. The encoder-decoder architectures prove to be better than the decoder-only equivalent.

Vector representations of text data in deep learning

Jan 07, 2019

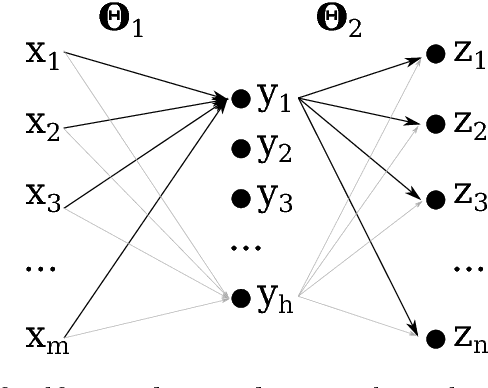

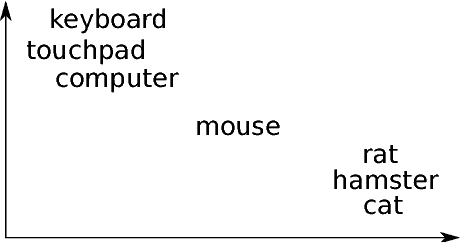

Abstract:In this dissertation we report results of our research on dense distributed representations of text data. We propose two novel neural models for learning such representations. The first model learns representations at the document level, while the second model learns word-level representations. For document-level representations we propose Binary Paragraph Vector: a neural network models for learning binary representations of text documents, which can be used for fast document retrieval. We provide a thorough evaluation of these models and demonstrate that they outperform the seminal method in the field in the information retrieval task. We also report strong results in transfer learning settings, where our models are trained on a generic text corpus and then used to infer codes for documents from a domain-specific dataset. In contrast to previously proposed approaches, Binary Paragraph Vector models learn embeddings directly from raw text data. For word-level representations we propose Disambiguated Skip-gram: a neural network model for learning multi-sense word embeddings. Representations learned by this model can be used in downstream tasks, like part-of-speech tagging or identification of semantic relations. In the word sense induction task Disambiguated Skip-gram outperforms state-of-the-art models on three out of four benchmarks datasets. Our model has an elegant probabilistic interpretation. Furthermore, unlike previous models of this kind, it is differentiable with respect to all its parameters and can be trained with backpropagation. In addition to quantitative results, we present qualitative evaluation of Disambiguated Skip-gram, including two-dimensional visualisations of selected word-sense embeddings.

Binary Paragraph Vectors

Jun 09, 2017

Abstract:Recently Le & Mikolov described two log-linear models, called Paragraph Vector, that can be used to learn state-of-the-art distributed representations of documents. Inspired by this work, we present Binary Paragraph Vector models: simple neural networks that learn short binary codes for fast information retrieval. We show that binary paragraph vectors outperform autoencoder-based binary codes, despite using fewer bits. We also evaluate their precision in transfer learning settings, where binary codes are inferred for documents unrelated to the training corpus. Results from these experiments indicate that binary paragraph vectors can capture semantics relevant for various domain-specific documents. Finally, we present a model that simultaneously learns short binary codes and longer, real-valued representations. This model can be used to rapidly retrieve a short list of highly relevant documents from a large document collection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge