Karl Mason

Forecast Aware Deep Reinforcement Learning for Efficient Electricity Load Scheduling in Dairy Farms

Jan 12, 2026Abstract:Dairy farming is an energy intensive sector that relies heavily on grid electricity. With increasing renewable energy integration, sustainable energy management has become essential for reducing grid dependence and supporting the United Nations Sustainable Development Goal 7 on affordable and clean energy. However, the intermittent nature of renewables poses challenges in balancing supply and demand in real time. Intelligent load scheduling is therefore crucial to minimize operational costs while maintaining reliability. Reinforcement Learning has shown promise in improving energy efficiency and reducing costs. However, most RL-based scheduling methods assume complete knowledge of future prices or generation, which is unrealistic in dynamic environments. Moreover, standard PPO variants rely on fixed clipping or KL divergence thresholds, often leading to unstable training under variable tariffs. To address these challenges, this study proposes a Deep Reinforcement Learning framework for efficient load scheduling in dairy farms, focusing on battery storage and water heating under realistic operational constraints. The proposed Forecast Aware PPO incorporates short term forecasts of demand and renewable generation using hour of day and month based residual calibration, while the PID KL PPO variant employs a proportional integral derivative controller to regulate KL divergence for stable policy updates adaptively. Trained on real world dairy farm data, the method achieves up to 1% lower electricity cost than PPO, 4.8% than DQN, and 1.5% than SAC. For battery scheduling, PPO reduces grid imports by 13.1%, demonstrating scalability and effectiveness for sustainable energy management in modern dairy farming.

Demonstration-Guided Continual Reinforcement Learning in Dynamic Environments

Dec 21, 2025Abstract:Reinforcement learning (RL) excels in various applications but struggles in dynamic environments where the underlying Markov decision process evolves. Continual reinforcement learning (CRL) enables RL agents to continually learn and adapt to new tasks, but balancing stability (preserving prior knowledge) and plasticity (acquiring new knowledge) remains challenging. Existing methods primarily address the stability-plasticity dilemma through mechanisms where past knowledge influences optimization but rarely affects the agent's behavior directly, which may hinder effective knowledge reuse and efficient learning. In contrast, we propose demonstration-guided continual reinforcement learning (DGCRL), which stores prior knowledge in an external, self-evolving demonstration repository that directly guides RL exploration and adaptation. For each task, the agent dynamically selects the most relevant demonstration and follows a curriculum-based strategy to accelerate learning, gradually shifting from demonstration-guided exploration to fully self-exploration. Extensive experiments on 2D navigation and MuJoCo locomotion tasks demonstrate its superior average performance, enhanced knowledge transfer, mitigation of forgetting, and training efficiency. The additional sensitivity analysis and ablation study further validate its effectiveness.

Uncertainty-Aware Knowledge Transformers for Peer-to-Peer Energy Trading with Multi-Agent Reinforcement Learning

Jul 22, 2025Abstract:This paper presents a novel framework for Peer-to-Peer (P2P) energy trading that integrates uncertainty-aware prediction with multi-agent reinforcement learning (MARL), addressing a critical gap in current literature. In contrast to previous works relying on deterministic forecasts, the proposed approach employs a heteroscedastic probabilistic transformer-based prediction model called Knowledge Transformer with Uncertainty (KTU) to explicitly quantify prediction uncertainty, which is essential for robust decision-making in the stochastic environment of P2P energy trading. The KTU model leverages domain-specific features and is trained with a custom loss function that ensures reliable probabilistic forecasts and confidence intervals for each prediction. Integrating these uncertainty-aware forecasts into the MARL framework enables agents to optimize trading strategies with a clear understanding of risk and variability. Experimental results show that the uncertainty-aware Deep Q-Network (DQN) reduces energy purchase costs by up to 5.7% without P2P trading and 3.2% with P2P trading, while increasing electricity sales revenue by 6.4% and 44.7%, respectively. Additionally, peak hour grid demand is reduced by 38.8% without P2P and 45.6% with P2P. These improvements are even more pronounced when P2P trading is enabled, highlighting the synergy between advanced forecasting and market mechanisms for resilient, economically efficient energy communities.

Lo-MARVE: A Low Cost Autonomous Underwater Vehicle for Marine Exploration

Nov 13, 2024

Abstract:This paper presents Low-cost Marine Autonomous Robotic Vehicle Explorer (Lo-MARVE), a novel autonomous underwater vehicle (AUV) designed to provide a low cost solution for underwater exploration and environmental monitoring in shallow water environments. Lo-MARVE offers a cost-effective alternative to existing AUVs, featuring a modular design, low-cost sensors, and wireless communication capabilities. The total cost of Lo-MARVE is approximately EUR 500. Lo-MARVE is developed using the Raspberry Pi 4B microprocessor, with control software written in Python. The proposed AUV was validated through field testing outside of a laboratory setting, in the freshwater environment of the River Corrib in Galway, Ireland. This demonstrates its ability to navigate autonomously, collect data, and communicate effectively outside of a controlled laboratory setting. The successful deployment of Lo-MARVE in a real-world environment validates its proof of concept.

A Meta-Learning Approach for Multi-Objective Reinforcement Learning in Sustainable Home Environments

Jul 16, 2024Abstract:Effective residential appliance scheduling is crucial for sustainable living. While multi-objective reinforcement learning (MORL) has proven effective in balancing user preferences in appliance scheduling, traditional MORL struggles with limited data in non-stationary residential settings characterized by renewable generation variations. Significant context shifts that can invalidate previously learned policies. To address these challenges, we extend state-of-the-art MORL algorithms with the meta-learning paradigm, enabling rapid, few-shot adaptation to shifting contexts. Additionally, we employ an auto-encoder (AE)-based unsupervised method to detect environment context changes. We have also developed a residential energy environment to evaluate our method using real-world data from London residential settings. This study not only assesses the application of MORL in residential appliance scheduling but also underscores the effectiveness of meta-learning in energy management. Our top-performing method significantly surpasses the best baseline, while the trained model saves 3.28% on electricity bills, a 2.74% increase in user comfort, and a 5.9% improvement in expected utility. Additionally, it reduces the sparsity of solutions by 62.44%. Remarkably, these gains were accomplished using 96.71% less training data and 61.1% fewer training steps.

Reinforcement Learning Enabled Peer-to-Peer Energy Trading for Dairy Farms

May 21, 2024

Abstract:Farm businesses are increasingly adopting renewables to enhance energy efficiency and reduce reliance on fossil fuels and the grid. This shift aims to decrease dairy farms' dependence on traditional electricity grids by enabling the sale of surplus renewable energy in Peer-to-Peer markets. However, the dynamic nature of farm communities poses challenges, requiring specialized algorithms for P2P energy trading. To address this, the Multi-Agent Peer-to-Peer Dairy Farm Energy Simulator (MAPDES) has been developed, providing a platform to experiment with Reinforcement Learning techniques. The simulations demonstrate significant cost savings, including a 43% reduction in electricity expenses, a 42% decrease in peak demand, and a 1.91% increase in energy sales compared to baseline scenarios lacking peer-to-peer energy trading or renewable energy sources.

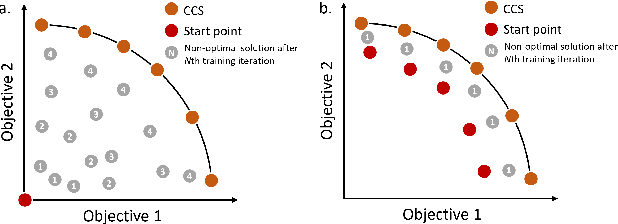

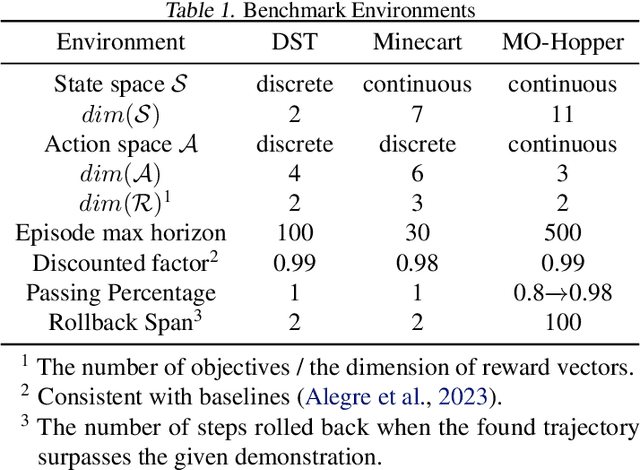

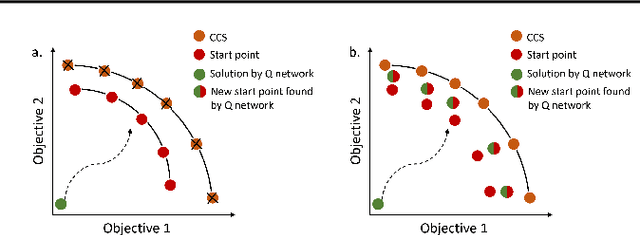

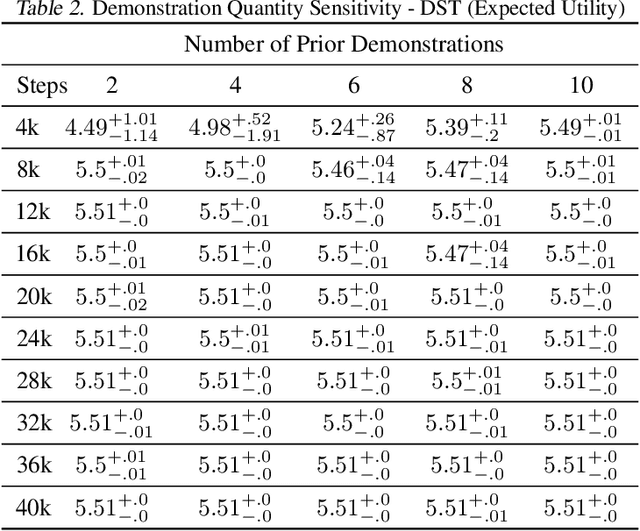

Demonstration Guided Multi-Objective Reinforcement Learning

Apr 05, 2024

Abstract:Multi-objective reinforcement learning (MORL) is increasingly relevant due to its resemblance to real-world scenarios requiring trade-offs between multiple objectives. Catering to diverse user preferences, traditional reinforcement learning faces amplified challenges in MORL. To address the difficulty of training policies from scratch in MORL, we introduce demonstration-guided multi-objective reinforcement learning (DG-MORL). This novel approach utilizes prior demonstrations, aligns them with user preferences via corner weight support, and incorporates a self-evolving mechanism to refine suboptimal demonstrations. Our empirical studies demonstrate DG-MORL's superiority over existing MORL algorithms, establishing its robustness and efficacy, particularly under challenging conditions. We also provide an upper bound of the algorithm's sample complexity.

A Reinforcement Learning Approach to Dairy Farm Battery Management using Q Learning

Mar 19, 2024Abstract:Dairy farming consumes a significant amount of energy, making it an energy-intensive sector within agriculture. Integrating renewable energy generation into dairy farming could help address this challenge. Effective battery management is important for integrating renewable energy generation. Managing battery charging and discharging poses significant challenges because of fluctuations in electrical consumption, the intermittent nature of renewable energy generation, and fluctuations in energy prices. Artificial Intelligence (AI) has the potential to significantly improve the use of renewable energy in dairy farming, however, there is limited research conducted in this particular domain. This research considers Ireland as a case study as it works towards attaining its 2030 energy strategy centered on the utilization of renewable sources. This study proposes a Q-learning-based algorithm for scheduling battery charging and discharging in a dairy farm setting. This research also explores the effect of the proposed algorithm by adding wind generation data and considering additional case studies. The proposed algorithm reduces the cost of imported electricity from the grid by 13.41\%, peak demand by 2\%, and 24.49\% when utilizing wind generation. These results underline how reinforcement learning is highly effective in managing batteries in the dairy farming sector.

Inferring Preferences from Demonstrations in Multi-Objective Residential Energy Management

Jan 15, 2024Abstract:It is often challenging for a user to articulate their preferences accurately in multi-objective decision-making problems. Demonstration-based preference inference (DemoPI) is a promising approach to mitigate this problem. Understanding the behaviours and values of energy customers is an example of a scenario where preference inference can be used to gain insights into the values of energy customers with multiple objectives, e.g. cost and comfort. In this work, we applied the state-of-art DemoPI method, i.e., the dynamic weight-based preference inference (DWPI) algorithm in a multi-objective residential energy consumption setting to infer preferences from energy consumption demonstrations by simulated users following a rule-based approach. According to our experimental results, the DWPI model achieves accurate demonstration-based preference inferring in three scenarios. These advancements enhance the usability and effectiveness of multi-objective reinforcement learning (MORL) in energy management, enabling more intuitive and user-friendly preference specifications, and opening the door for DWPI to be applied in real-world settings.

Go-Explore for Residential Energy Management

Jan 15, 2024Abstract:Reinforcement learning is commonly applied in residential energy management, particularly for optimizing energy costs. However, RL agents often face challenges when dealing with deceptive and sparse rewards in the energy control domain, especially with stochastic rewards. In such situations, thorough exploration becomes crucial for learning an optimal policy. Unfortunately, the exploration mechanism can be misled by deceptive reward signals, making thorough exploration difficult. Go-Explore is a family of algorithms which combines planning methods and reinforcement learning methods to achieve efficient exploration. We use the Go-Explore algorithm to solve the cost-saving task in residential energy management problems and achieve an improvement of up to 19.84\% compared to the well-known reinforcement learning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge