Karl Bäckström

Distribution Network Fault Prediction Utilising Protection Relay Disturbance Recordings And Machine Learning

Jun 22, 2023

Abstract:As society becomes increasingly reliant on electricity, the reliability requirements for electricity supply continue to rise. In response, transmission/distribution system operators (T/DSOs) must improve their networks and operational practices to reduce the number of interruptions and enhance their fault localization, isolation, and supply restoration processes to minimize fault duration. This paper proposes a machine learning based fault prediction method that aims to predict incipient faults, allowing T/DSOs to take action before the fault occurs and prevent customer outages.

Controlled time series generation for automotive software-in-the-loop testing using GANs

Feb 18, 2020

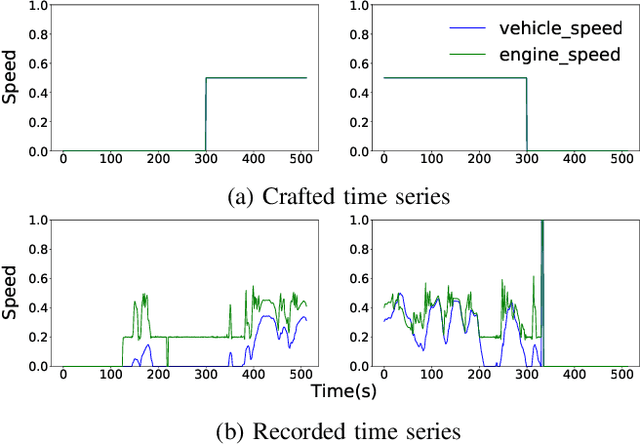

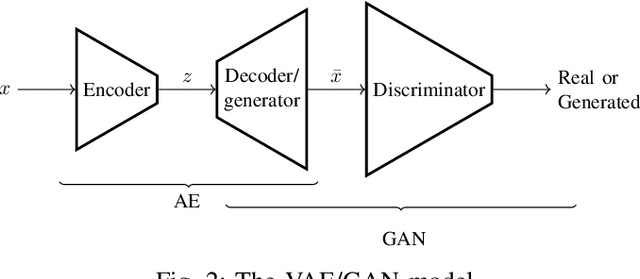

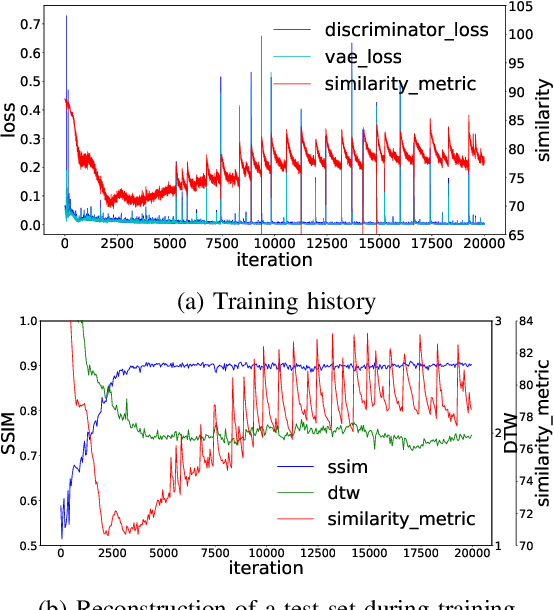

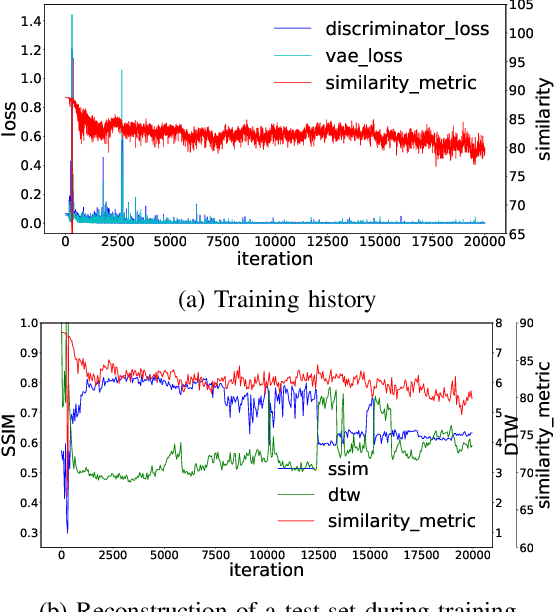

Abstract:Testing automotive mechatronic systems partly uses the software-in-the-loop approach, where systematically covering inputs of the system-under-test remains a major challenge. In current practice, there are two major techniques of input stimulation. One approach is to craft input sequences which eases control and feedback of the test process but falls short of exposing the system to realistic scenarios. The other is to replay sequences recorded from field operations which accounts for reality but requires collecting a well-labeled dataset of sufficient capacity for widespread use, which is expensive. This work applies the well-known unsupervised learning framework of Generative Adversarial Networks (GAN) to learn an unlabeled dataset of recorded in-vehicle signals and uses it for generation of synthetic input stimuli. Additionally, a metric-based linear interpolation algorithm is demonstrated, which guarantees that generated stimuli follow a customizable similarity relationship with specified references. This combination of techniques enables controlled generation of a rich range of meaningful and realistic input patterns, improving virtual test coverage and reducing the need for expensive field tests.

MindTheStep-AsyncPSGD: Adaptive Asynchronous Parallel Stochastic Gradient Descent

Nov 08, 2019

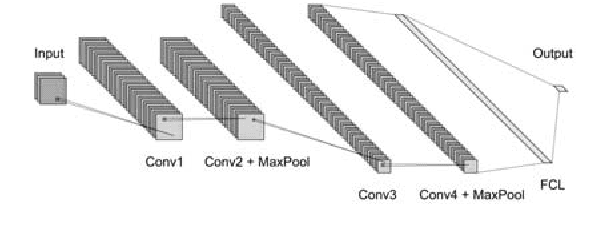

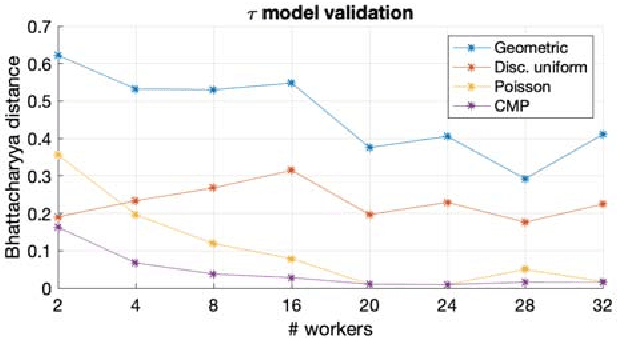

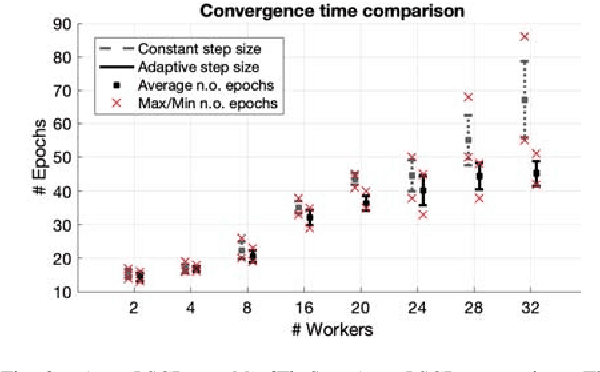

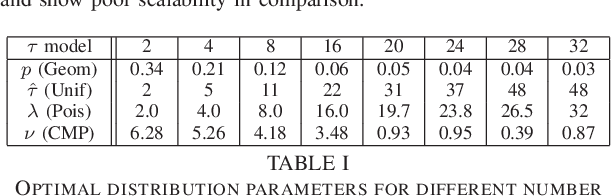

Abstract:Stochastic Gradient Descent (SGD) is very useful in optimization problems with high-dimensional non-convex target functions, and hence constitutes an important component of several Machine Learning and Data Analytics methods. Recently there have been significant works on understanding the parallelism inherent to SGD, and its convergence properties. Asynchronous, parallel SGD (AsyncPSGD) has received particular attention, due to observed performance benefits. On the other hand, asynchrony implies inherent challenges in understanding the execution of the algorithm and its convergence, stemming from the fact that the contribution of a thread might be based on an old (stale) view of the state. In this work we aim to deepen the understanding of AsyncPSGD in order to increase the statistical efficiency in the presence of stale gradients. We propose new models for capturing the nature of the staleness distribution in a practical setting. Using the proposed models, we derive a staleness-adaptive SGD framework, MindTheStep-AsyncPSGD, for adapting the step size in an online-fashion, which provably reduces the negative impact of asynchrony. Moreover, we provide general convergence time bounds for a wide class of staleness-adaptive step size strategies for convex target functions. We also provide a detailed empirical study, showing how our approach implies faster convergence for deep learning applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge