Karen Eguiazarian

Geometry-Aware Video Inpainting for Joint Headset Occlusion Removal and Face Reconstruction in Social XR

Aug 17, 2025Abstract:Head-mounted displays (HMDs) are essential for experiencing extended reality (XR) environments and observing virtual content. However, they obscure the upper part of the user's face, complicating external video recording and significantly impacting social XR applications such as teleconferencing, where facial expressions and eye gaze details are crucial for creating an immersive experience. This study introduces a geometry-aware learning-based framework to jointly remove HMD occlusions and reconstruct complete 3D facial geometry from RGB frames captured from a single viewpoint. The method integrates a GAN-based video inpainting network, guided by dense facial landmarks and a single occlusion-free reference frame, to restore missing facial regions while preserving identity. Subsequently, a SynergyNet-based module regresses 3D Morphable Model (3DMM) parameters from the inpainted frames, enabling accurate 3D face reconstruction. Dense landmark optimization is incorporated throughout the pipeline to improve both the inpainting quality and the fidelity of the recovered geometry. Experimental results demonstrate that the proposed framework can successfully remove HMDs from RGB facial videos while maintaining facial identity and realism, producing photorealistic 3D face geometry outputs. Ablation studies further show that the framework remains robust across different landmark densities, with only minor quality degradation under sparse landmark configurations.

Headset: Human emotion awareness under partial occlusions multimodal dataset

Feb 14, 2024

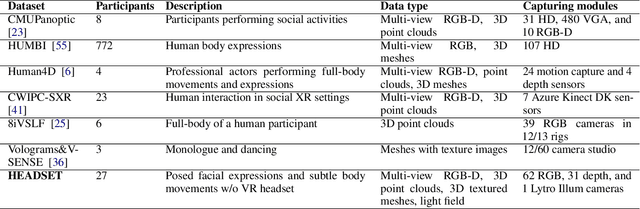

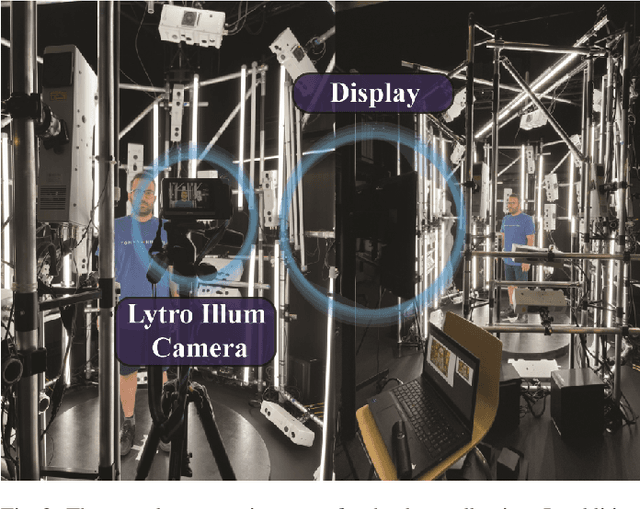

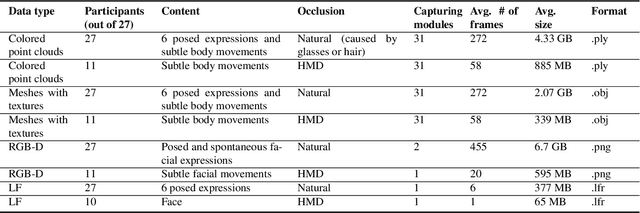

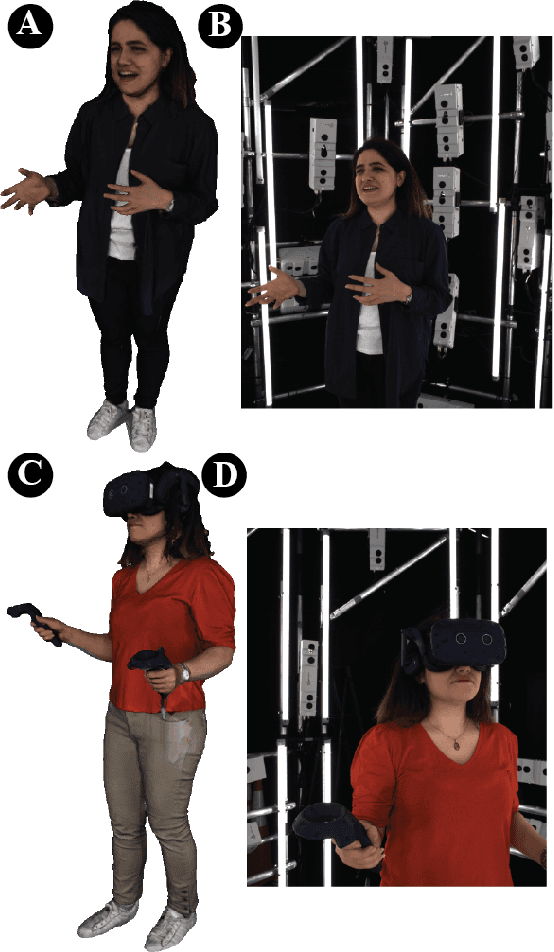

Abstract:The volumetric representation of human interactions is one of the fundamental domains in the development of immersive media productions and telecommunication applications. Particularly in the context of the rapid advancement of Extended Reality (XR) applications, this volumetric data has proven to be an essential technology for future XR elaboration. In this work, we present a new multimodal database to help advance the development of immersive technologies. Our proposed database provides ethically compliant and diverse volumetric data, in particular 27 participants displaying posed facial expressions and subtle body movements while speaking, plus 11 participants wearing head-mounted displays (HMDs). The recording system consists of a volumetric capture (VoCap) studio, including 31 synchronized modules with 62 RGB cameras and 31 depth cameras. In addition to textured meshes, point clouds, and multi-view RGB-D data, we use one Lytro Illum camera for providing light field (LF) data simultaneously. Finally, we also provide an evaluation of our dataset employment with regard to the tasks of facial expression classification, HMDs removal, and point cloud reconstruction. The dataset can be helpful in the evaluation and performance testing of various XR algorithms, including but not limited to facial expression recognition and reconstruction, facial reenactment, and volumetric video. HEADSET and its all associated raw data and license agreement will be publicly available for research purposes.

Hybrid Diffractive Optics Design via Hardware-in-the-Loop Methodology for Achromatic Extended-Depth-of-Field Imaging

Mar 30, 2022

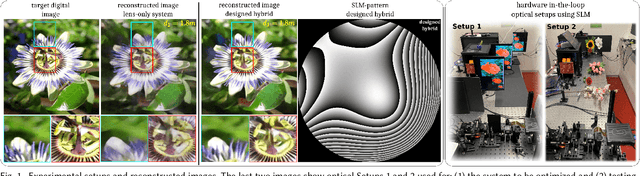

Abstract:End-to-end optimization of diffractive optical elements (DOEs) profile through a digital differentiable model combined with computational imaging have gained an increasing attention in emerging applications due to the compactness of resultant physical setups. Despite recent works have shown the potential of this methodology to design optics, its performance in physical setups is still limited and affected by manufacturing artifacts of DOE, mismatch between simulated and resultant experimental point spread functions, and calibration errors. Additionally, the computational burden of the digital differentiable model to effectively design the DOE is increasing, thus limiting the size of the DOE that can be designed. To overcome the above mentioned limitations, the broadband imaging system with phase-only spatial light modulator (SLM) as an encoded DOE is proposed and developed in this paper. A co-design of the SLM phase pattern and image reconstruction algorithm is produced following the end-to-end strategy, using for optimization a convolutional neural network equipped with quantitative and qualitative loss functions. The optics of the imaging system is hybrid consisting of SLM as DOE and refractive lens. SLM phase-pattern is optimized by applying the Hardware-in-the-loop technique, which helps to eliminate the mismatch between numerical modeling and physical reality of image formation as light propagation is not numerically modeled but is physically done. In our experiments, the hybrid optics is implemented by the optical projection of the SLM phase-pattern on a lens plane for a depth range 0.4-1.9m. Comparison with compound multi-lens optics such as Sony A7 III and iPhone Xs Max cameras show that the proposed system is advanced in all-in-focus sharp imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge