Karan Grover

Privado: Practical and Secure DNN Inference

Oct 01, 2018

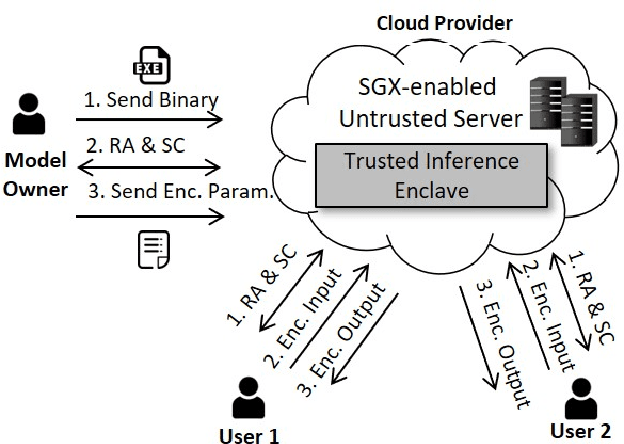

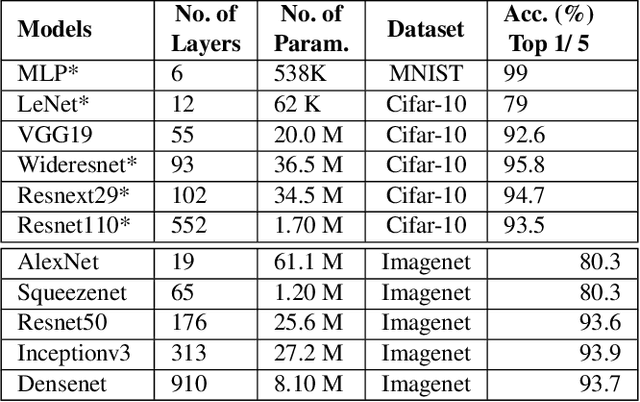

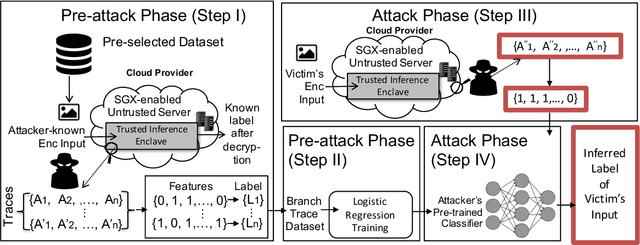

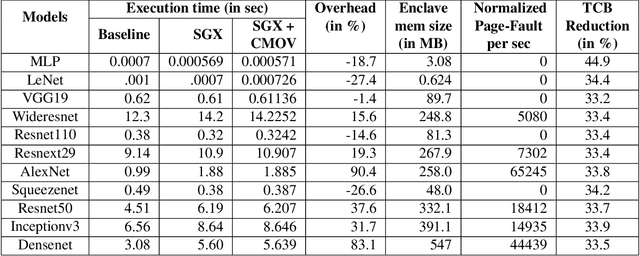

Abstract:Recently, cloud providers have extended support for trusted hardware primitives such as Intel SGX. Simultaneously, the field of deep learning is seeing enormous innovation and increase in adoption. In this paper, we therefore ask the question: "Can third-party cloud services use SGX to provide practical, yet secure DNN Inference-as-a-service? " Our work addresses the three main challenges that SGX-based DNN inferencing faces, namely, security, ease-of-use, and performance. We first demonstrate that side-channel based attacks on DNN models are indeed possible. We show that, by observing access patterns, we can recover inputs to the DNN model. This motivates the need for Privado, a system we have designed for secure inference-as-a-service. Privado is input-oblivious: it transforms any deep learning framework written in C/C++ to be free of input-dependent access patterns. Privado is fully-automated and has a low TCB: with zero developer effort, given an ONNX description, it generates compact C code for the model which can run within SGX-enclaves. Privado has low performance overhead: we have used Privado with Torch, and have shown its overhead to be 20.77\% on average on 10 contemporary networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge