Kaoru Amano

MAME: Multidimensional Adaptive Metamer Exploration with Human Perceptual Feedback

Mar 17, 2025

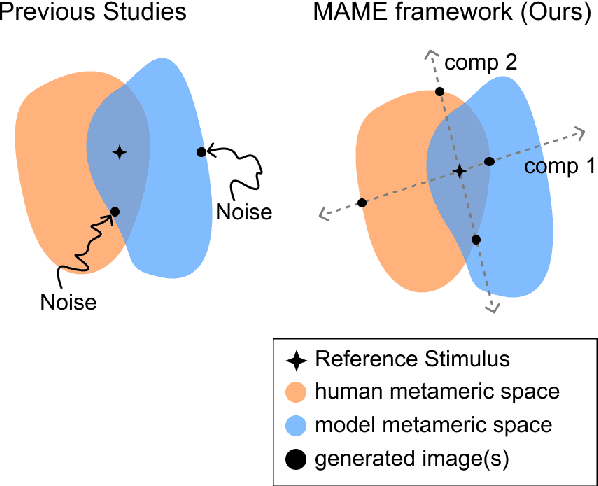

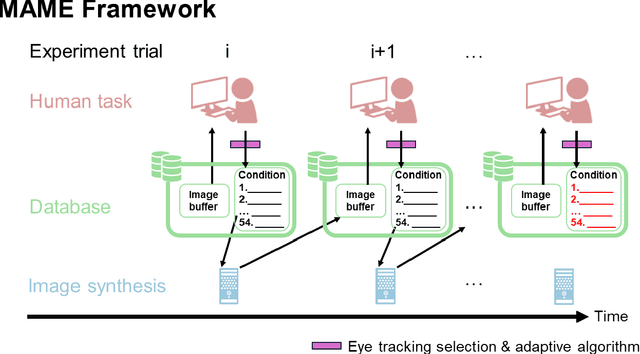

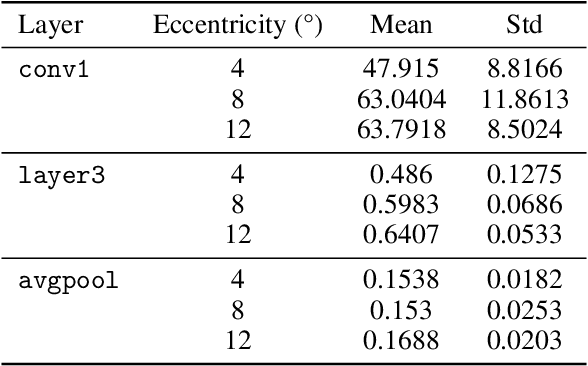

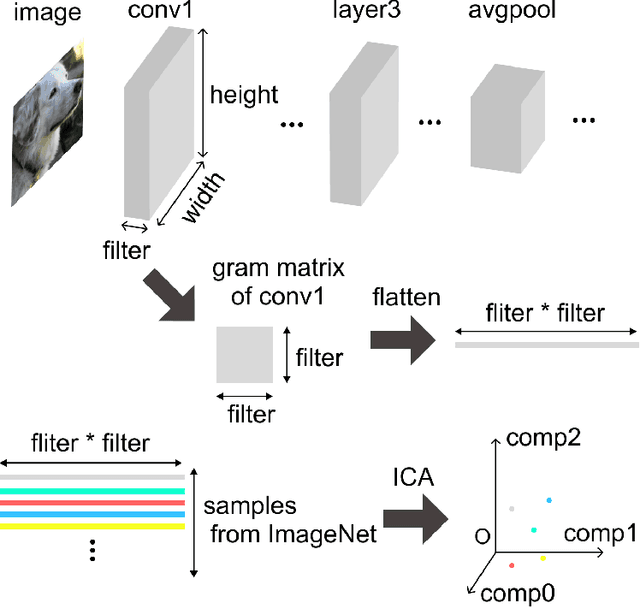

Abstract:Alignment between human brain networks and artificial models is actively studied in machine learning and neuroscience. A widely adopted approach to explore their functional alignment is to identify metamers for both humans and models. Metamers refer to input stimuli that are physically different but equivalent within a given system. If a model's metameric space completely matched the human metameric space, the model would achieve functional alignment with humans. However, conventional methods lack direct ways to search for human metamers. Instead, researchers first develop biologically inspired models and then infer about human metamers indirectly by testing whether model metamers also appear as metamers to humans. Here, we propose the Multidimensional Adaptive Metamer Exploration (MAME) framework, enabling direct high-dimensional exploration of human metameric space. MAME leverages online image generation guided by human perceptual feedback. Specifically, it modulates reference images across multiple dimensions by leveraging hierarchical responses from convolutional neural networks (CNNs). Generated images are presented to participants whose perceptual discriminability is assessed in a behavioral task. Based on participants' responses, subsequent image generation parameters are adaptively updated online. Using our MAME framework, we successfully measured a human metameric space of over fifty dimensions within a single experiment. Experimental results showed that human discrimination sensitivity was lower for metameric images based on low-level features compared to high-level features, which image contrast metrics could not explain. The finding suggests that the model computes low-level information not essential for human perception. Our framework has the potential to contribute to developing interpretable AI and understanding of brain function in neuroscience.

BrainCodec: Neural fMRI codec for the decoding of cognitive brain states

Oct 06, 2024Abstract:Recently, leveraging big data in deep learning has led to significant performance improvements, as confirmed in applications like mental state decoding using fMRI data. However, fMRI datasets remain relatively small in scale, and the inherent issue of low signal-to-noise ratios (SNR) in fMRI data further exacerbates these challenges. To address this, we apply compression techniques as a preprocessing step for fMRI data. We propose BrainCodec, a novel fMRI codec inspired by the neural audio codec. We evaluated BrainCodec's compression capability in mental state decoding, demonstrating further improvements over previous methods. Furthermore, we analyzed the latent representations obtained through BrainCodec, elucidating the similarities and differences between task and resting state fMRI, highlighting the interpretability of BrainCodec. Additionally, we demonstrated that fMRI reconstructions using BrainCodec can enhance the visibility of brain activity by achieving higher SNR, suggesting its potential as a novel denoising method. Our study shows that BrainCodec not only enhances performance over previous methods but also offers new analytical possibilities for neuroscience. Our codes, dataset, and model weights are available at https://github.com/amano-k-lab/BrainCodec.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge