Kannan A

Hybrid Convolution Neural Network Integrated with Pseudo-Newton Boosting for Lumbar Spine Degeneration Detection

Nov 17, 2025

Abstract:This paper proposes a new enhanced model architecture to perform classification of lumbar spine degeneration with DICOM images while using a hybrid approach, integrating EfficientNet and VGG19 together with custom-designed components. The proposed model is differentiated from traditional transfer learning methods as it incorporates a Pseudo-Newton Boosting layer along with a Sparsity-Induced Feature Reduction Layer that forms a multi-tiered framework, further improving feature selection and representation. The Pseudo-Newton Boosting layer makes smart variations of feature weights, with more detailed anatomical features, which are mostly left out in a transfer learning setup. In addition, the Sparsity-Induced Layer removes redundancy for learned features, producing lean yet robust representations for pathology in the lumbar spine. This architecture is novel as it overcomes the constraints in the traditional transfer learning approach, especially in the high-dimensional context of medical images, and achieves a significant performance boost, reaching a precision of 0.9, recall of 0.861, F1 score of 0.88, loss of 0.18, and an accuracy of 88.1%, compared to the baseline model, EfficientNet. This work will present the architectures, preprocessing pipeline, and experimental results. The results contribute to the development of automated diagnostic tools for medical images.

A Novel Adaptive Hybrid Focal-Entropy Loss for Enhancing Diabetic Retinopathy Detection Using Convolutional Neural Networks

Nov 16, 2024

Abstract:Diabetic retinopathy is a leading cause of blindness around the world and demands precise AI-based diagnostic tools. Traditional loss functions in multi-class classification, such as Categorical Cross-Entropy (CCE), are very common but break down with class imbalance, especially in cases with inherently challenging or overlapping classes, which leads to biased and less sensitive models. Since a heavy imbalance exists in the number of examples for higher severity stage 4 diabetic retinopathy, etc., classes compared to those very early stages like class 0, achieving class balance is key. For this purpose, we propose the Adaptive Hybrid Focal-Entropy Loss which combines the ideas of focal loss and entropy loss with adaptive weighting in order to focus on minority classes and highlight the challenging samples. The state-of-the art models applied for diabetic retinopathy detection with AHFE revealed good performance improvements, indicating the top performances of ResNet50 at 99.79%, DenseNet121 at 98.86%, Xception at 98.92%, MobileNetV2 at 97.84%, and InceptionV3 at 93.62% accuracy. This sheds light into how AHFE promotes enhancement in AI-driven diagnostics for complex and imbalanced medical datasets.

A Channel Attention-Driven Hybrid CNN Framework for Paddy Leaf Disease Detection

Jul 16, 2024

Abstract:Farmers face various challenges when it comes to identifying diseases in rice leaves during their early stages of growth, which is a major reason for poor produce. Therefore, early and accurate disease identification is important in agriculture to avoid crop loss and improve cultivation. In this research, we propose a novel hybrid deep learning (DL) classifier designed by extending the Squeeze-and-Excitation network architecture with a channel attention mechanism and the Swish ReLU activation function. The channel attention mechanism in our proposed model identifies the most important feature channels required for classification during feature extraction and selection. The dying ReLU problem is mitigated by utilizing the Swish ReLU activation function, and the Squeeze-andExcitation blocks improve information propagation and cross-channel interaction. Upon evaluation, our model achieved a high F1-score of 99.76% and an accuracy of 99.74%, surpassing the performance of existing models. These outcomes demonstrate the potential of state-of-the-art DL techniques in agriculture, contributing to the advancement of more efficient and reliable disease detection systems.

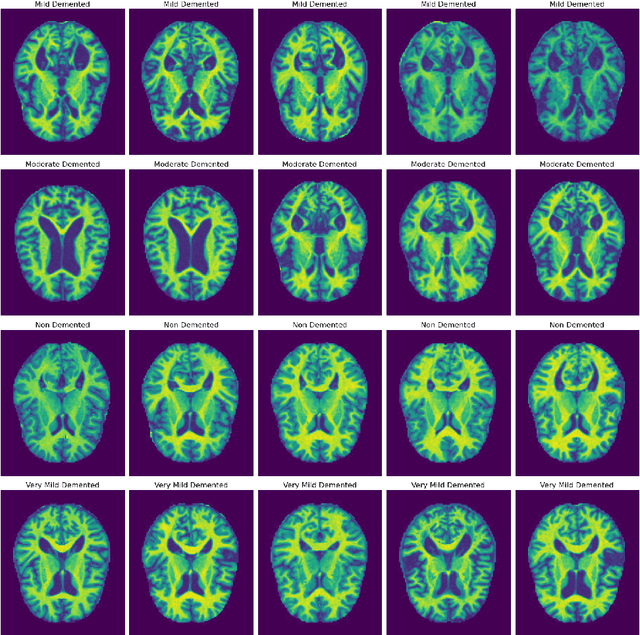

A Dual-Attention Aware Deep Convolutional Neural Network for Early Alzheimer's Detection

Jul 15, 2024

Abstract:Alzheimer's disease (AD) represents the primary form of neurodegeneration, impacting millions of individuals each year and causing progressive cognitive decline. Accurately diagnosing and classifying AD using neuroimaging data presents ongoing challenges in medicine, necessitating advanced interventions that will enhance treatment measures. In this research, we introduce a dual attention enhanced deep learning (DL) framework for classifying AD from neuroimaging data. Combined spatial and self-attention mechanisms play a vital role in emphasizing focus on neurofibrillary tangles and amyloid plaques from the MRI images, which are difficult to discern with regular imaging techniques. Results demonstrate that our model yielded remarkable performance in comparison to existing state of the art (SOTA) convolutional neural networks (CNNs), with an accuracy of 99.1%. Moreover, it recorded remarkable metrics, with an F1-Score of 99.31%, a precision of 99.24%, and a recall of 99.5%. These results highlight the promise of cutting edge DL methods in medical diagnostics, contributing to highly reliable and more efficient healthcare solutions.

Multi-Attention Integrated Deep Learning Frameworks for Enhanced Breast Cancer Segmentation and Identification

Jul 03, 2024

Abstract:Breast cancer poses a profound threat to lives globally, claiming numerous lives each year. Therefore, timely detection is crucial for early intervention and improved chances of survival. Accurately diagnosing and classifying breast tumors using ultrasound images is a persistent challenge in medicine, demanding cutting-edge solutions for improved treatment strategies. This research introduces multiattention-enhanced deep learning (DL) frameworks designed for the classification and segmentation of breast cancer tumors from ultrasound images. A spatial channel attention mechanism is proposed for segmenting tumors from ultrasound images, utilizing a novel LinkNet DL framework with an InceptionResNet backbone. Following this, the paper proposes a deep convolutional neural network with an integrated multi-attention framework (DCNNIMAF) to classify the segmented tumor as benign, malignant, or normal. From experimental results, it is observed that the segmentation model has recorded an accuracy of 98.1%, with a minimal loss of 0.6%. It has also achieved high Intersection over Union (IoU) and Dice Coefficient scores of 96.9% and 97.2%, respectively. Similarly, the classification model has attained an accuracy of 99.2%, with a low loss of 0.31%. Furthermore, the classification framework has achieved outstanding F1-Score, precision, and recall values of 99.1%, 99.3%, and 99.1%, respectively. By offering a robust framework for early detection and accurate classification of breast cancer, this proposed work significantly advances the field of medical image analysis, potentially improving diagnostic precision and patient outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge