Kan Huang

GreenEyes: An Air Quality Evaluating Model based on WaveNet

Dec 08, 2022

Abstract:Accompanying rapid industrialization, humans are suffering from serious air pollution problems. The demand for air quality prediction is becoming more and more important to the government's policy-making and people's daily life. In this paper, We propose GreenEyes -- a deep neural network model, which consists of a WaveNet-based backbone block for learning representations of sequences and an LSTM with a Temporal Attention module for capturing the hidden interactions between features of multi-channel inputs. To evaluate the effectiveness of our proposed method, we carry out several experiments including an ablation study on our collected and preprocessed air quality data near HKUST. The experimental results show our model can effectively predict the air quality level of the next timestamp given any segment of the air quality data from the data set. We have also released our standalone dataset at https://github.com/AI-Huang/IAQI_Dataset The model and code for this paper are publicly available at https://github.com/AI-Huang/AirEvaluation

PDNet: Prior-model Guided Depth-enhanced Network for Salient Object Detection

Oct 13, 2018

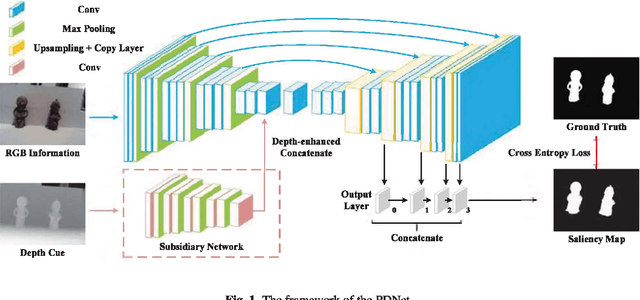

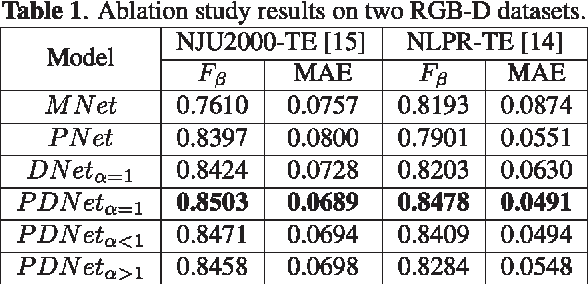

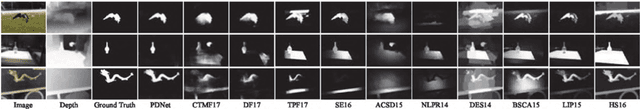

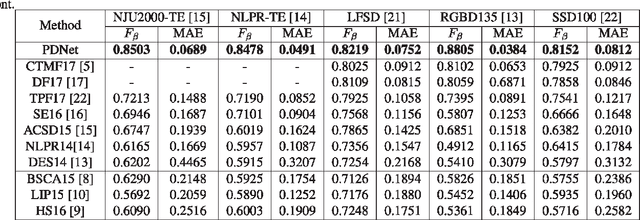

Abstract:Fully convolutional neural networks (FCNs) have shown outstanding performance in many computer vision tasks including salient object detection. However, there still remains two issues needed to be addressed in deep learning based saliency detection. One is the lack of tremendous amount of annotated data to train a network. The other is the lack of robustness for extracting salient objects in images containing complex scenes. In this paper, we present a new architecture$ - $PDNet, a robust prior-model guided depth-enhanced network for RGB-D salient object detection. In contrast to existing works, in which RGB-D values of image pixels are fed directly to a network, the proposed architecture is composed of a master network for processing RGB values, and a sub-network making full use of depth cues and incorporate depth-based features into the master network. To overcome the limited size of the labeled RGB-D dataset for training, we employ a large conventional RGB dataset to pre-train the master network, which proves to contribute largely to the final accuracy. Extensive evaluations over five benchmark datasets demonstrate that our proposed method performs favorably against the state-of-the-art approaches.

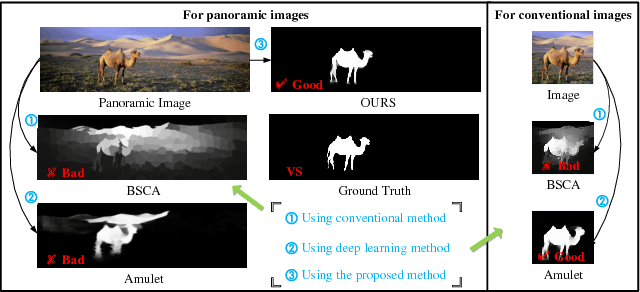

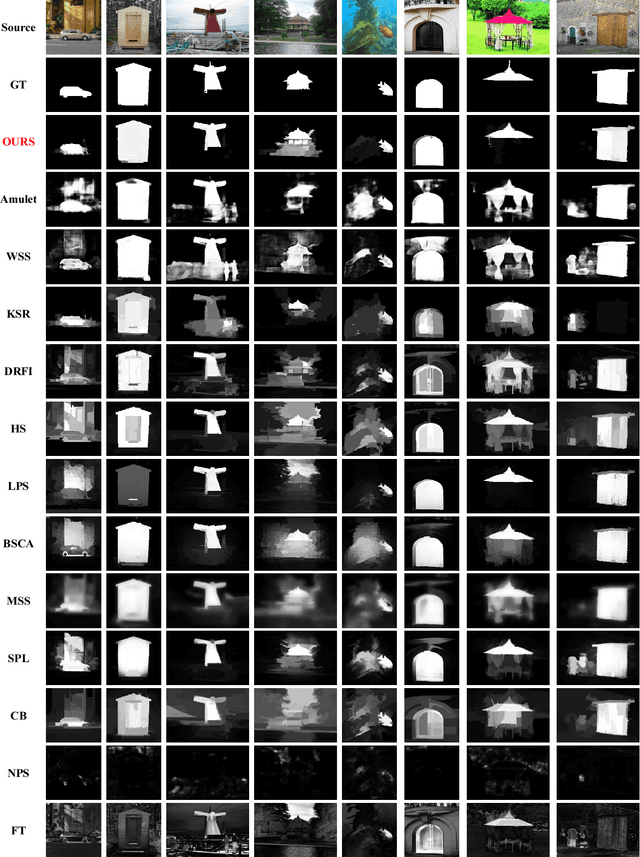

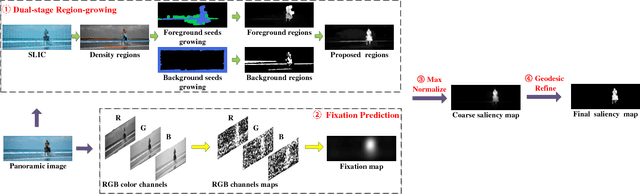

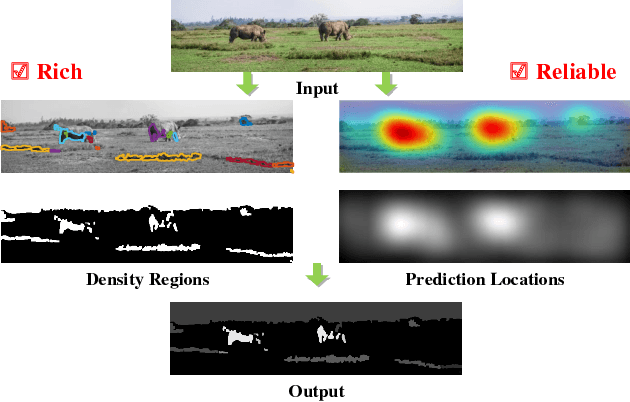

Automatic Salient Object Detection for Panoramic Images Using Region Growing and Fixation Prediction Model

Apr 10, 2018

Abstract:Almost all previous works on saliency detection have been dedicated to conventional images, however, with the outbreak of panoramic images due to the rapid development of VR or AR technology, it is becoming more challenging, meanwhile valuable for extracting salient contents in panoramic images. In this paper, we propose a novel bottom-up salient object detection framework for panoramic images. First, we employ a spatial density estimation method to roughly extract object proposal regions, with the help of region growing algorithm. Meanwhile, an eye fixation model is utilized to predict visually attractive parts in the image from the perspective of the human visual search mechanism. Then, the previous results are combined by the maxima normalization to get the coarse saliency map. Finally, a refinement step based on geodesic distance is utilized for post-processing to derive the final saliency map. To fairly evaluate the performance of the proposed approach, we propose a high-quality dataset of panoramic images (SalPan). Extensive evaluations demonstrate the effectiveness of our proposed method on panoramic images and the superiority of the proposed method against other methods.

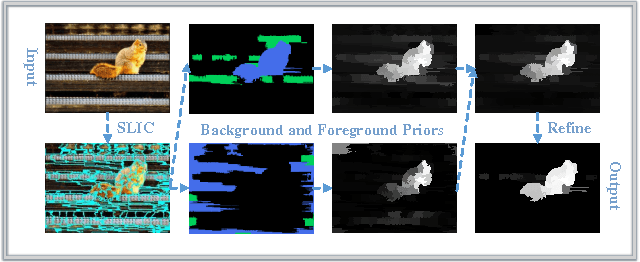

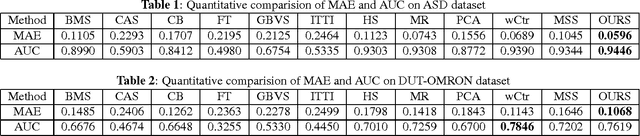

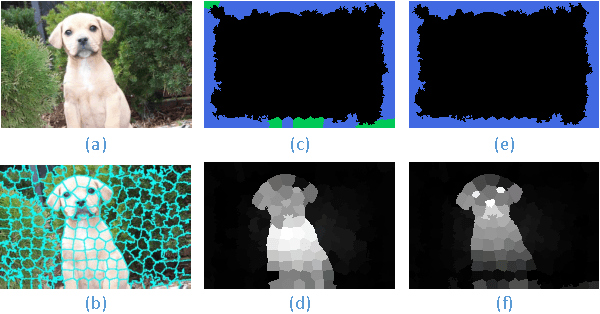

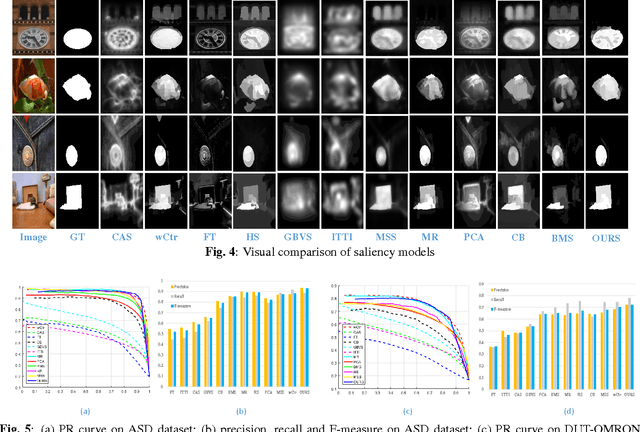

Robust Saliency Detection via Fusing Foreground and Background Priors

Nov 01, 2017

Abstract:Automatic Salient object detection has received tremendous attention from research community and has been an increasingly important tool in many computer vision tasks. This paper proposes a novel bottom-up salient object detection framework which considers both foreground and background cues. First, A series of background and foreground seeds are selected from an image reliably, and then used for calculation of saliency map separately. Next, a combination of foreground and background saliency map is performed. Last, a refinement step based on geodesic distance is utilized to enhance salient regions, thus deriving the final saliency map. Particularly we provide a robust scheme for seeds selection which contributes a lot to accuracy improvement in saliency detection. Extensive experimental evaluations demonstrate the effectiveness of our proposed method against other outstanding methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge