Kamal Z. Zamli

Study of keyword extraction techniques for Electric Double Layer Capacitor domain using text similarity indexes: An experimental analysis

Nov 13, 2021

Abstract:Keywords perform a significant role in selecting various topic-related documents quite easily. Topics or keywords assigned by humans or experts provide accurate information. However, this practice is quite expensive in terms of resources and time management. Hence, it is more satisfying to utilize automated keyword extraction techniques. Nevertheless, before beginning the automated process, it is necessary to check and confirm how similar expert-provided and algorithm-generated keywords are. This paper presents an experimental analysis of similarity scores of keywords generated by different supervised and unsupervised automated keyword extraction algorithms with expert provided keywords from the Electric Double Layer Capacitor (EDLC) domain. The paper also analyses which texts provide better keywords like positive sentences or all sentences of the document. From the unsupervised algorithms, YAKE, TopicRank, MultipartiteRank, and KPMiner are employed for keyword extraction. From the supervised algorithms, KEA and WINGNUS are employed for keyword extraction. To assess the similarity of the extracted keywords with expert-provided keywords, Jaccard, Cosine, and Cosine with word vector similarity indexes are employed in this study. The experiment shows that the MultipartiteRank keyword extraction technique measured with cosine with word vector similarity index produces the best result with 92% similarity with expert provided keywords. This study can help the NLP researchers working with the EDLC domain or recommender systems to select more suitable keyword extraction and similarity index calculation techniques.

Hybrid Henry Gas Solubility Optimization Algorithm with Dynamic Cluster-to-Algorithm Mapping for Search-based Software Engineering Problems

May 31, 2021

Abstract:This paper discusses a new variant of the Henry Gas Solubility Optimization (HGSO) Algorithm, called Hybrid HGSO (HHGSO). Unlike its predecessor, HHGSO allows multiple clusters serving different individual meta-heuristic algorithms (i.e., with its own defined parameters and local best) to coexist within the same population. Exploiting the dynamic cluster-to-algorithm mapping via penalized and reward model with adaptive switching factor, HHGSO offers a novel approach for meta-heuristic hybridization consisting of Jaya Algorithm, Sooty Tern Optimization Algorithm, Butterfly Optimization Algorithm, and Owl Search Algorithm, respectively. The acquired results from the selected two case studies (i.e., involving team formation problem and combinatorial test suite generation) indicate that the hybridization has notably improved the performance of HGSO and gives superior performance against other competing meta-heuristic and hyper-heuristic algorithms.

* 31 pages

A Hybrid Q-Learning Sine-Cosine-based Strategy for Addressing the Combinatorial Test Suite Minimization Problem

Apr 27, 2018

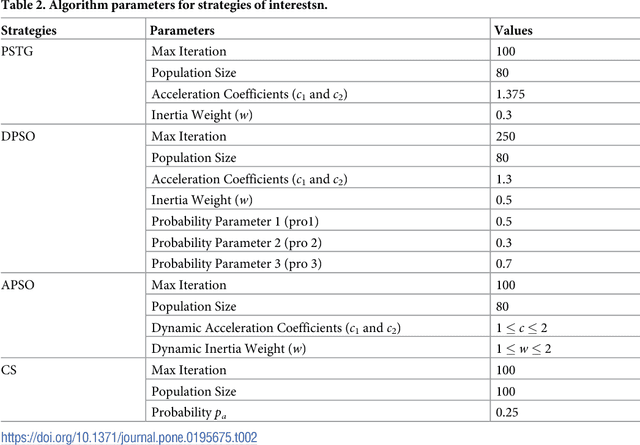

Abstract:The sine-cosine algorithm (SCA) is a new population-based meta-heuristic algorithm. In addition to exploiting sine and cosine functions to perform local and global searches (hence the name sine-cosine), the SCA introduces several random and adaptive parameters to facilitate the search process. Although it shows promising results, the search process of the SCA is vulnerable to local minima/maxima due to the adoption of a fixed switch probability and the bounded magnitude of the sine and cosine functions (from -1 to 1). In this paper, we propose a new hybrid Q-learning sine-cosine- based strategy, called the Q-learning sine-cosine algorithm (QLSCA). Within the QLSCA, we eliminate the switching probability. Instead, we rely on the Q-learning algorithm (based on the penalty and reward mechanism) to dynamically identify the best operation during runtime. Additionally, we integrate two new operations (L\'evy flight motion and crossover) into the QLSCA to facilitate jumping out of local minima/maxima and enhance the solution diversity. To assess its performance, we adopt the QLSCA for the combinatorial test suite minimization problem. Experimental results reveal that the QLSCA is statistically superior with regard to test suite size reduction compared to recent state-of-the-art strategies, including the original SCA, the particle swarm test generator (PSTG), adaptive particle swarm optimization (APSO) and the cuckoo search strategy (CS) at the 95% confidence level. However, concerning the comparison with discrete particle swarm optimization (DPSO), there is no significant difference in performance at the 95% confidence level. On a positive note, the QLSCA statistically outperforms the DPSO in certain configurations at the 90% confidence level.

* 41 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge