Kaike Ping

Human-Human-AI Triadic Programming: Uncovering the Role of AI Agent and the Value of Human Partner in Collaborative Learning

Jan 17, 2026Abstract:As AI assistance becomes embedded in programming practice, researchers have increasingly examined how these systems help learners generate code and work more efficiently. However, these studies often position AI as a replacement for human collaboration and overlook the social and learning-oriented aspects that emerge in collaborative programming. Our work introduces human-human-AI (HHAI) triadic programming, where an AI agent serves as an additional collaborator rather than a substitute for a human partner. Through a within-subjects study with 20 participants, we show that triadic collaboration enhances collaborative learning and social presence compared to the dyadic human-AI (HAI) baseline. In the triadic HHAI conditions, participants relied significantly less on AI-generated code in their work. This effect was strongest in the HHAI-shared condition, where participants had an increased sense of responsibility to understand AI suggestions before applying them. These findings demonstrate how triadic settings activate socially shared regulation of learning by making AI use visible and accountable to a human peer, suggesting that AI systems that augment rather than automate peer collaboration can better preserve the learning processes that collaborative programming relies on.

CounterQuill: Investigating the Potential of Human-AI Collaboration in Online Counterspeech Writing

Oct 03, 2024

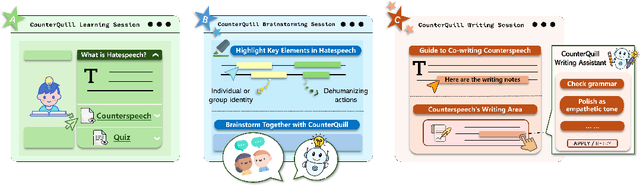

Abstract:Online hate speech has become increasingly prevalent on social media platforms, causing harm to individuals and society. While efforts have been made to combat this issue through content moderation, the potential of user-driven counterspeech as an alternative solution remains underexplored. Existing counterspeech methods often face challenges such as fear of retaliation and skill-related barriers. To address these challenges, we introduce CounterQuill, an AI-mediated system that assists users in composing effective and empathetic counterspeech. CounterQuill provides a three-step process: (1) a learning session to help users understand hate speech and counterspeech; (2) a brainstorming session that guides users in identifying key elements of hate speech and exploring counterspeech strategies; and (3) a co-writing session that enables users to draft and refine their counterspeech with CounterQuill. We conducted a within-subjects user study with 20 participants to evaluate CounterQuill in comparison to ChatGPT. Results show that CounterQuill's guidance and collaborative writing process provided users a stronger sense of ownership over their co-authored counterspeech. Users perceived CounterQuill as a writing partner and thus were more willing to post the co-written counterspeech online compared to the one written with ChatGPT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge