Kaie Kubjas

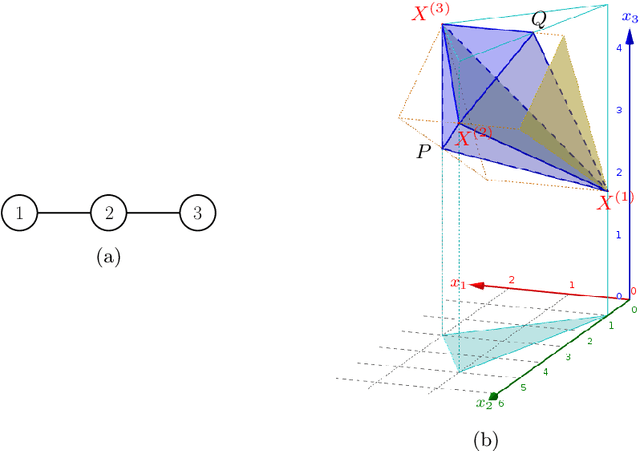

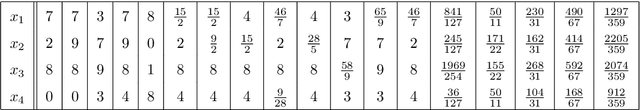

Geometry of Polynomial Neural Networks

Feb 01, 2024

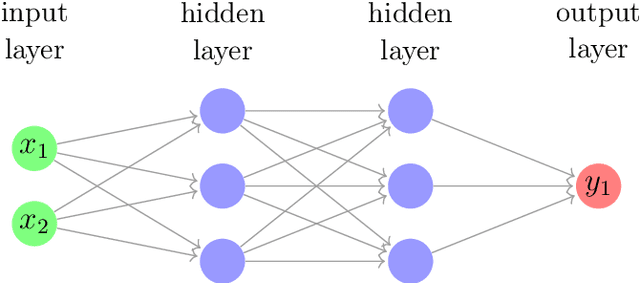

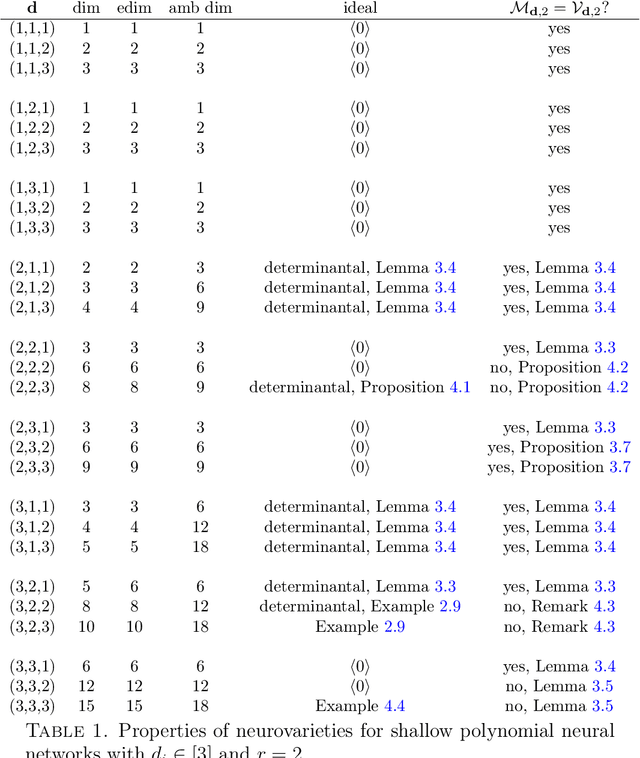

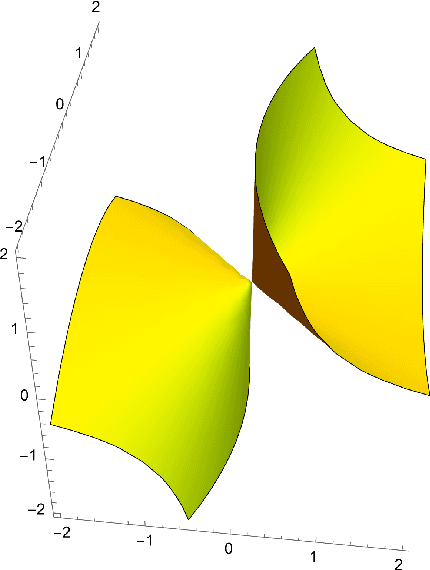

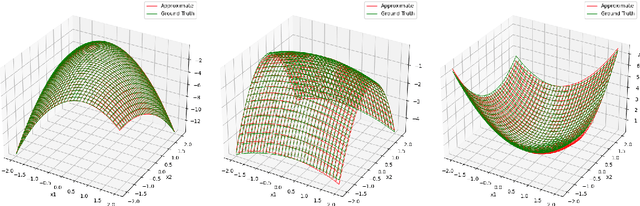

Abstract:We study the expressivity and learning process for polynomial neural networks (PNNs) with monomial activation functions. The weights of the network parametrize the neuromanifold. In this paper, we study certain neuromanifolds using tools from algebraic geometry: we give explicit descriptions as semialgebraic sets and characterize their Zariski closures, called neurovarieties. We study their dimension and associate an algebraic degree, the learning degree, to the neurovariety. The dimension serves as a geometric measure for the expressivity of the network, the learning degree is a measure for the complexity of training the network and provides upper bounds on the number of learnable functions. These theoretical results are accompanied with experiments.

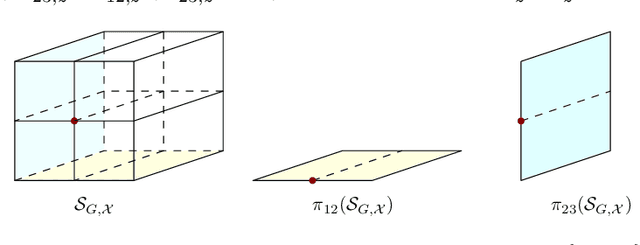

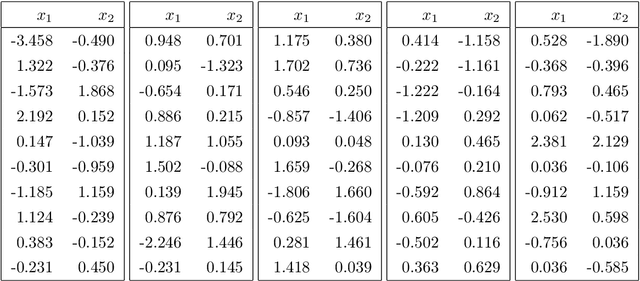

Log-concave density estimation in undirected graphical models

Jun 10, 2022

Abstract:We study the problem of maximum likelihood estimation of densities that are log-concave and lie in the graphical model corresponding to a given undirected graph $G$. We show that the maximum likelihood estimate (MLE) is the product of the exponentials of several tent functions, one for each maximal clique of $G$. While the set of log-concave densities in a graphical model is infinite-dimensional, our results imply that the MLE can be found by solving a finite-dimensional convex optimization problem. We provide an implementation and a few examples. Furthermore, we show that the MLE exists and is unique with probability 1 as long as the number of sample points is larger than the size of the largest clique of $G$ when $G$ is chordal. We show that the MLE is consistent when the graph $G$ is a disjoint union of cliques. Finally, we discuss the conditions under which a log-concave density in the graphical model of $G$ has a log-concave factorization according to $G$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge