Junying Zhang

BeniFul: Backdoor Defense via Middle Feature Analysis for Deep Neural Networks

Oct 15, 2024

Abstract:Backdoor defenses have recently become important in resisting backdoor attacks in deep neural networks (DNNs), where attackers implant backdoors into the DNN model by injecting backdoor samples into the training dataset. Although there are many defense methods to achieve backdoor detection for DNN inputs and backdoor elimination for DNN models, they still have not presented a clear explanation of the relationship between these two missions. In this paper, we use the features from the middle layer of the DNN model to analyze the difference between backdoor and benign samples and propose Backdoor Consistency, which indicates that at least one backdoor exists in the DNN model if the backdoor trigger is detected exactly on input. By analyzing the middle features, we design an effective and comprehensive backdoor defense method named BeniFul, which consists of two parts: a gray-box backdoor input detection and a white-box backdoor elimination. Specifically, we use the reconstruction distance from the Variational Auto-Encoder and model inference results to implement backdoor input detection and a feature distance loss to achieve backdoor elimination. Experimental results on CIFAR-10 and Tiny ImageNet against five state-of-the-art attacks demonstrate that our BeniFul exhibits a great defense capability in backdoor input detection and backdoor elimination.

Adaptive Affinity Propagation Clustering

May 08, 2008

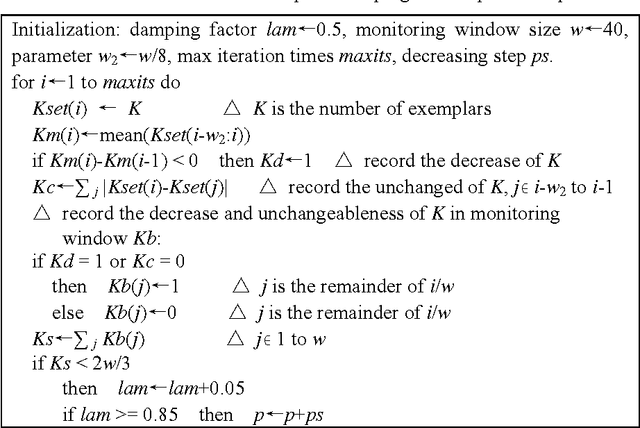

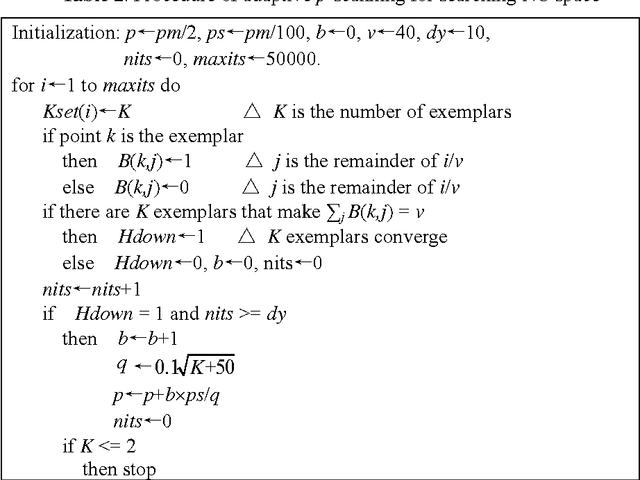

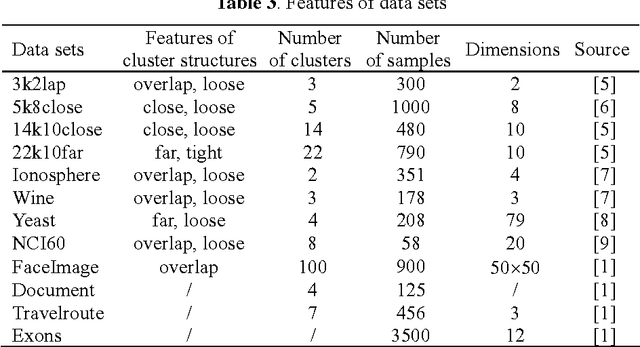

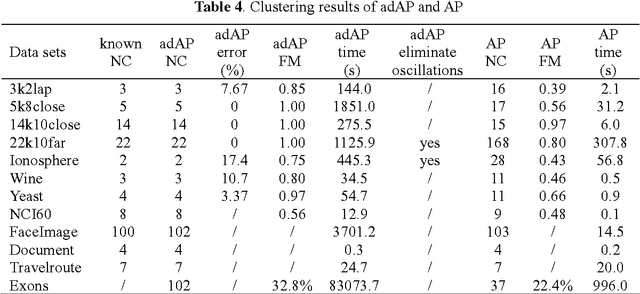

Abstract:Affinity propagation clustering (AP) has two limitations: it is hard to know what value of parameter 'preference' can yield an optimal clustering solution, and oscillations cannot be eliminated automatically if occur. The adaptive AP method is proposed to overcome these limitations, including adaptive scanning of preferences to search space of the number of clusters for finding the optimal clustering solution, adaptive adjustment of damping factors to eliminate oscillations, and adaptive escaping from oscillations when the damping adjustment technique fails. Experimental results on simulated and real data sets show that the adaptive AP is effective and can outperform AP in quality of clustering results.

* an English version of original paper

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge