Junya Sato

Large Batch and Patch Size Training for Medical Image Segmentation

Oct 24, 2022

Abstract:Multi-organ segmentation enables organ evaluation, accounts the relationship between multiple organs, and facilitates accurate diagnosis and treatment decisions. However, only few models can perform segmentation accurately because of the lack of datasets and computational resources. On AMOS2022 challenge, which is a large-scale, clinical, and diverse abdominal multiorgan segmentation benchmark, we trained a 3D-UNet model with large batch and patch sizes using multi-GPU distributed training. Segmentation performance tended to increase for models with large batch and patch sizes compared with the baseline settings. The accuracy was further improved by using ensemble models that were trained with different settings. These results provide a reference for parameter selection in organ segmentation.

Anatomy-aware Self-supervised Learning for Anomaly Detection in Chest Radiographs

May 09, 2022

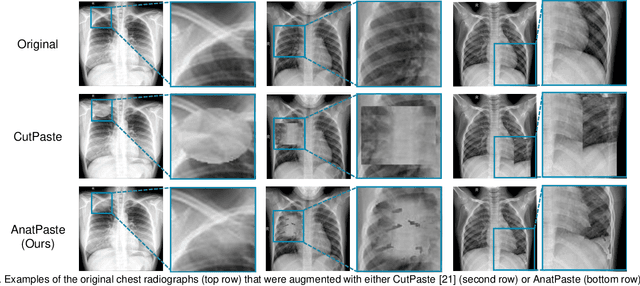

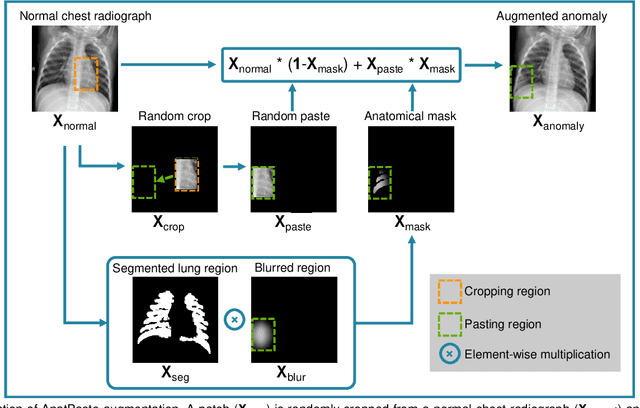

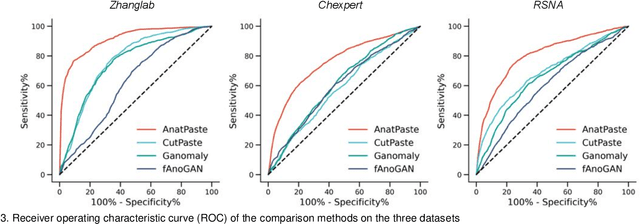

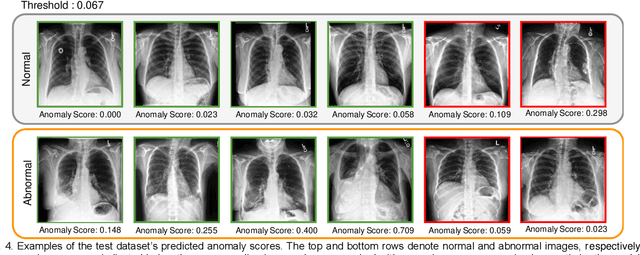

Abstract:Large numbers of labeled medical images are essential for the accurate detection of anomalies, but manual annotation is labor-intensive and time-consuming. Self-supervised learning (SSL) is a training method to learn data-specific features without manual annotation. Several SSL-based models have been employed in medical image anomaly detection. These SSL methods effectively learn representations in several field-specific images, such as natural and industrial product images. However, owing to the requirement of medical expertise, typical SSL-based models are inefficient in medical image anomaly detection. We present an SSL-based model that enables anatomical structure-based unsupervised anomaly detection (UAD). The model employs the anatomy-aware pasting (AnatPaste) augmentation tool. AnatPaste employs a threshold-based lung segmentation pretext task to create anomalies in normal chest radiographs, which are used for model pretraining. These anomalies are similar to real anomalies and help the model recognize them. We evaluate our model on three opensource chest radiograph datasets. Our model exhibit area under curves (AUC) of 92.1%, 78.7%, and 81.9%, which are the highest among existing UAD models. This is the first SSL model to employ anatomical information as a pretext task. AnatPaste can be applied in various deep learning models and downstream tasks. It can be employed for other modalities by fixing appropriate segmentation. Our code is publicly available at: https://github.com/jun-sato/AnatPaste.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge