Junxing Zhang

DEPT: Deep Extreme Point Tracing for Ultrasound Image Segmentation

Mar 19, 2025Abstract:Automatic medical image segmentation plays a crucial role in computer aided diagnosis. However, fully supervised learning approaches often require extensive and labor-intensive annotation efforts. To address this challenge, weakly supervised learning methods, particularly those using extreme points as supervisory signals, have the potential to offer an effective solution. In this paper, we introduce Deep Extreme Point Tracing (DEPT) integrated with Feature-Guided Extreme Point Masking (FGEPM) algorithm for ultrasound image segmentation. Notably, our method generates pseudo labels by identifying the lowest-cost path that connects all extreme points on the feature map-based cost matrix. Additionally, an iterative training strategy is proposed to refine pseudo labels progressively, enabling continuous network improvement. Experimental results on two public datasets demonstrate the effectiveness of our proposed method. The performance of our method approaches that of the fully supervised method and outperforms several existing weakly supervised methods.

STM-UNet: An Efficient U-shaped Architecture Based on Swin Transformer and Multi-scale MLP for Medical Image Segmentation

Apr 25, 2023

Abstract:Automated medical image segmentation can assist doctors to diagnose faster and more accurate. Deep learning based models for medical image segmentation have made great progress in recent years. However, the existing models fail to effectively leverage Transformer and MLP for improving U-shaped architecture efficiently. In addition, the multi-scale features of the MLP have not been fully extracted in the bottleneck of U-shaped architecture. In this paper, we propose an efficient U-shaped architecture based on Swin Transformer and multi-scale MLP, namely STM-UNet. Specifically, the Swin Transformer block is added to skip connection of STM-UNet in form of residual connection, which can enhance the modeling ability of global features and long-range dependency. Meanwhile, a novel PCAS-MLP with parallel convolution module is designed and placed into the bottleneck of our architecture to contribute to the improvement of segmentation performance. The experimental results on ISIC 2016 and ISIC 2018 demonstrate the effectiveness of our proposed method. Our method also outperforms several state-of-the-art methods in terms of IoU and Dice. Our method has achieved a better trade-off between high segmentation accuracy and low model complexity.

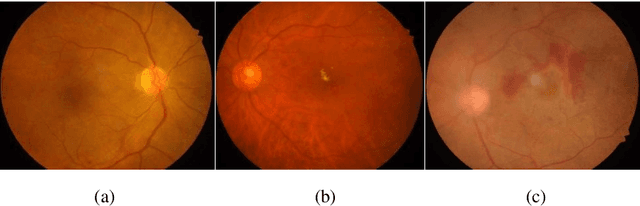

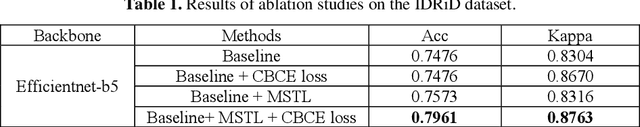

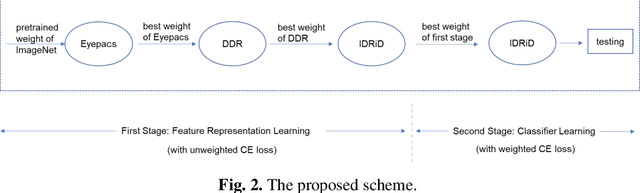

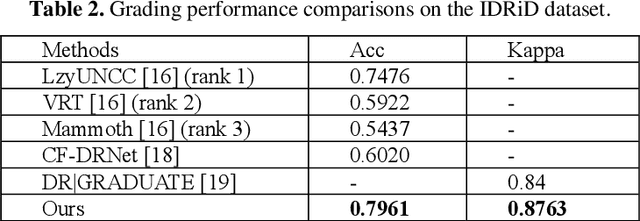

Few-shot Learning Based on Multi-stage Transfer and Class-Balanced Loss for Diabetic Retinopathy Grading

Sep 24, 2021

Abstract:Diabetic retinopathy (DR) is one of the major blindness-causing diseases current-ly known. Automatic grading of DR using deep learning methods not only speeds up the diagnosis of the disease but also reduces the rate of misdiagnosis. However, problems such as insufficient samples and imbalanced class distribu-tion in DR datasets have constrained the improvement of grading performance. In this paper, we introduce the idea of multi-stage transfer into the grading task of DR. The new transfer learning technique leverages multiple datasets with differ-ent scales to enable the model to learn more feature representation information. Meanwhile, to cope with imbalanced DR datasets, we present a class-balanced loss function that performs well in natural image classification tasks, and adopt a simple and easy-to-implement training method for it. The experimental results show that the application of multi-stage transfer and class-balanced loss function can effectively improve the grading performance metrics such as accuracy and quadratic weighted kappa. In fact, our method has outperformed two state-of-the-art methods and achieved the best result on the DR grading task of IDRiD Sub-Challenge 2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge