Juncheng Shen

Towards Efficient and Secure Delivery of Data for Deep Learning with Privacy-Preserving

Sep 17, 2019

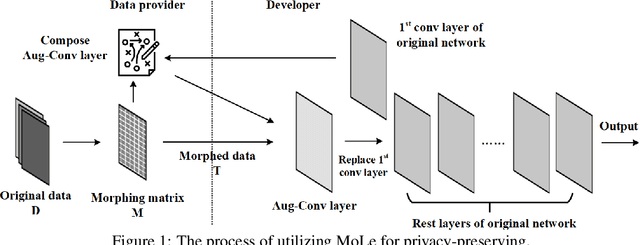

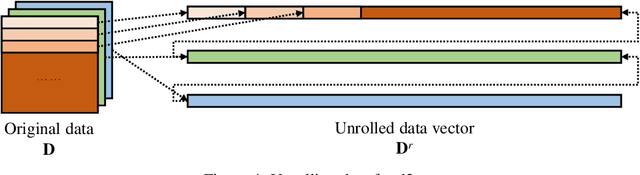

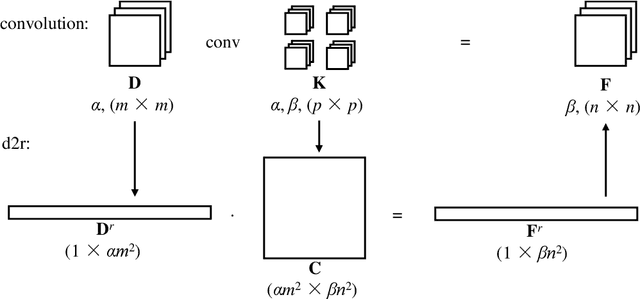

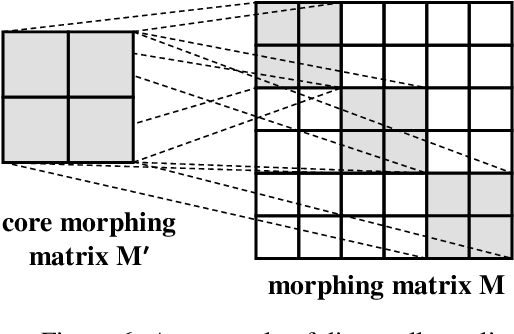

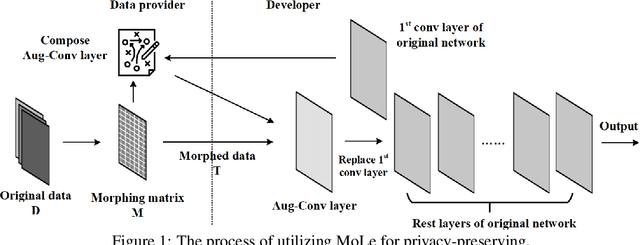

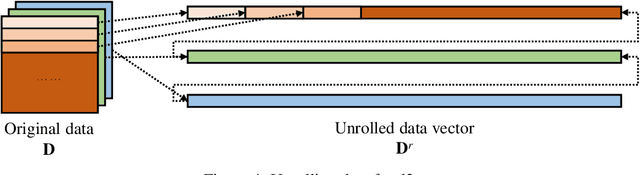

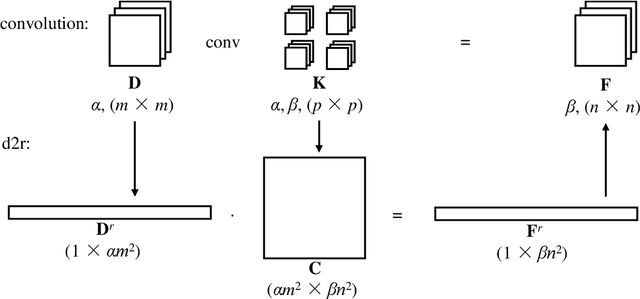

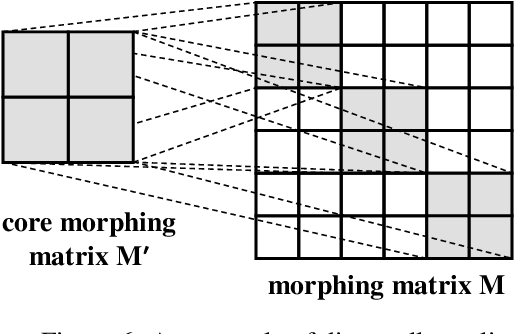

Abstract:Privacy recently emerges as a severe concern in deep learning, that is, sensitive data must be prohibited from being shared with the third party during deep neural network development. In this paper, we propose Morphed Learning (MoLe), an efficient and secure scheme to deliver deep learning data. MoLe has two main components: data morphing and Augmented Convolutional (Aug-Conv) layer. Data morphing allows data providers to send morphed data without privacy information, while Aug-Conv layer helps deep learning developers to apply their networks on the morphed data without performance penalty. MoLe provides stronger security while introducing lower overhead compared to GAZELLE (USENIX Security 2018), which is another method with no performance penalty on the neural network. When using MoLe for VGG-16 network on CIFAR dataset, the computational overhead is only 9% and the data transmission overhead is 5.12%. As a comparison, GAZELLE has computational overhead of 10,000 times and data transmission overhead of 421,000 times. In this setting, the attack success rate of adversary is 7.9 x 10^{-90} for MoLe and 2.9 x 10^{-30} for GAZELLE, respectively.

Morphed Learning: Towards Privacy-Preserving for Deep Learning Based Applications

Sep 20, 2018

Abstract:The concern of potential privacy violation has prevented efficient use of big data for improving deep learning based applications. In this paper, we propose Morphed Learning, a privacy-preserving technique for deep learning based on data morphing that, allows data owners to share their data without leaking sensitive privacy information. Morphed Learning allows the data owners to send securely morphed data and provides the server with an Augmented Convolutional layer to train the network on morphed data without performance loss. Morphed Learning has these three features: (1) Strong protection against reverse-engineering on the morphed data; (2) Acceptable computational and data transmission overhead with no correlation to the depth of the neural network; (3) No degradation of the neural network performance. Theoretical analyses on CIFAR-10 dataset and VGG-16 network show that our method is capable of providing 10^89 morphing possibilities with only 5% computational overhead and 10% transmission overhead under limited knowledge attack scenario. Further analyses also proved that our method can offer same resilience against full knowledge attack if more resources are provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge