Julia Kimbell

$\texttt{LucidAtlas}$: Learning Uncertainty-Aware, Covariate-Disentangled, Individualized Atlas Representations

Feb 12, 2025Abstract:The goal of this work is to develop principled techniques to extract information from high dimensional data sets with complex dependencies in areas such as medicine that can provide insight into individual as well as population level variation. We develop $\texttt{LucidAtlas}$, an approach that can represent spatially varying information, and can capture the influence of covariates as well as population uncertainty. As a versatile atlas representation, $\texttt{LucidAtlas}$ offers robust capabilities for covariate interpretation, individualized prediction, population trend analysis, and uncertainty estimation, with the flexibility to incorporate prior knowledge. Additionally, we discuss the trustworthiness and potential risks of neural additive models for analyzing dependent covariates and then introduce a marginalization approach to explain the dependence of an individual predictor on the models' response (the atlas). To validate our method, we demonstrate its generalizability on two medical datasets. Our findings underscore the critical role of by-construction interpretable models in advancing scientific discovery. Our code will be publicly available upon acceptance.

NeuralOCT: Airway OCT Analysis via Neural Fields

Mar 15, 2024

Abstract:Optical coherence tomography (OCT) is a popular modality in ophthalmology and is also used intravascularly. Our interest in this work is OCT in the context of airway abnormalities in infants and children where the high resolution of OCT and the fact that it is radiation-free is important. The goal of airway OCT is to provide accurate estimates of airway geometry (in 2D and 3D) to assess airway abnormalities such as subglottic stenosis. We propose $\texttt{NeuralOCT}$, a learning-based approach to process airway OCT images. Specifically, $\texttt{NeuralOCT}$ extracts 3D geometries from OCT scans by robustly bridging two steps: point cloud extraction via 2D segmentation and 3D reconstruction from point clouds via neural fields. Our experiments show that $\texttt{NeuralOCT}$ produces accurate and robust 3D airway reconstructions with an average A-line error smaller than 70 micrometer. Our code will cbe available on GitHub.

NAISR: A 3D Neural Additive Model for Interpretable Shape Representation

Mar 28, 2023

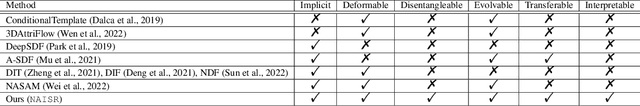

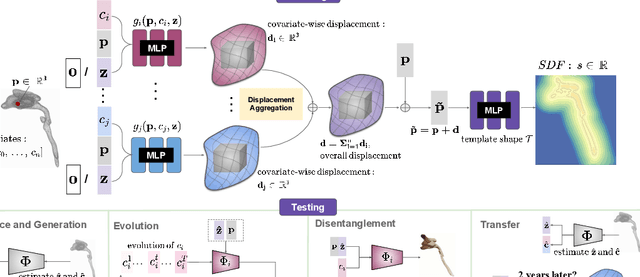

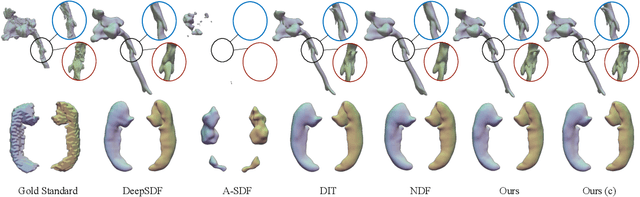

Abstract:Deep implicit functions (DIFs) have emerged as a powerful paradigm for many computer vision tasks such as 3D shape reconstruction, generation, registration, completion, editing, and understanding. However, given a set of 3D shapes with associated covariates there is at present no shape representation method which allows to precisely represent the shapes while capturing the individual dependencies on each covariate. Such a method would be of high utility to researchers to discover knowledge hidden in a population of shapes. We propose a 3D Neural Additive Model for Interpretable Shape Representation (NAISR) which describes individual shapes by deforming a shape atlas in accordance to the effect of disentangled covariates. Our approach captures shape population trends and allows for patient-specific predictions through shape transfer. NAISR is the first approach to combine the benefits of deep implicit shape representations with an atlas deforming according to specified covariates. Although our driving problem is the construction of an airway atlas, NAISR is a general approach for modeling, representing, and investigating shape populations. We evaluate NAISR with respect to shape reconstruction, shape disentanglement, shape evolution, and shape transfer for the pediatric upper airway. Our experiments demonstrate that NAISR achieves competitive shape reconstruction performance while retaining interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge