Joshua Peeples

LGDWT-GS: Local and Global Discrete Wavelet-Regularized 3D Gaussian Splatting for Sparse-View Scene Reconstruction

Jan 23, 2026Abstract:We propose a new method for few-shot 3D reconstruction that integrates global and local frequency regularization to stabilize geometry and preserve fine details under sparse-view conditions, addressing a key limitation of existing 3D Gaussian Splatting (3DGS) models. We also introduce a new multispectral greenhouse dataset containing four spectral bands captured from diverse plant species under controlled conditions. Alongside the dataset, we release an open-source benchmarking package that defines standardized few-shot reconstruction protocols for evaluating 3DGS-based methods. Experiments on our multispectral dataset, as well as standard benchmarks, demonstrate that the proposed method achieves sharper, more stable, and spectrally consistent reconstructions than existing baselines. The dataset and code for this work are publicly available

Evaluating GAN-LSTM for Smart Meter Anomaly Detection in Power Systems

Jan 14, 2026Abstract:Advanced metering infrastructure (AMI) provides high-resolution electricity consumption data that can enhance monitoring, diagnosis, and decision making in modern power distribution systems. Detecting anomalies in these time-series measurements is challenging due to nonlinear, nonstationary, and multi-scale temporal behavior across diverse building types and operating conditions. This work presents a systematic, power-system-oriented evaluation of a GAN-LSTM framework for smart meter anomaly detection using the Large-scale Energy Anomaly Detection (LEAD) dataset, which contains one year of hourly measurements from 406 buildings. The proposed pipeline applies consistent preprocessing, temporal windowing, and threshold selection across all methods, and compares the GAN-LSTM approach against six widely used baselines, including statistical, kernel-based, reconstruction-based, and GAN-based models. Experimental results demonstrate that the GAN-LSTM significantly improves detection performance, achieving an F1-score of 0.89. These findings highlight the potential of adversarial temporal modeling as a practical tool for supporting asset monitoring, non-technical loss detection, and situational awareness in real-world power distribution networks. The code for this work is publicly available

Histogram-based Parameter-efficient Tuning for Passive Sonar Classification

Apr 22, 2025

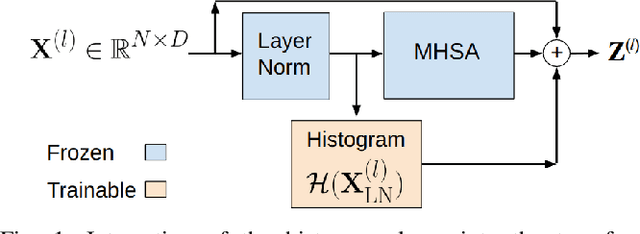

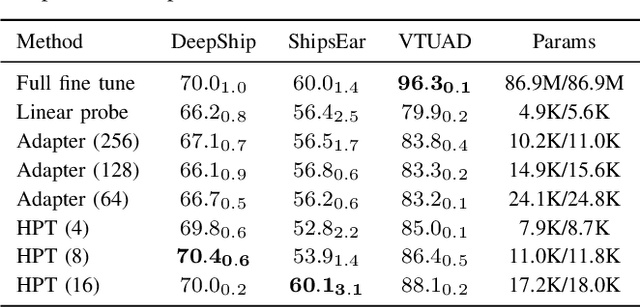

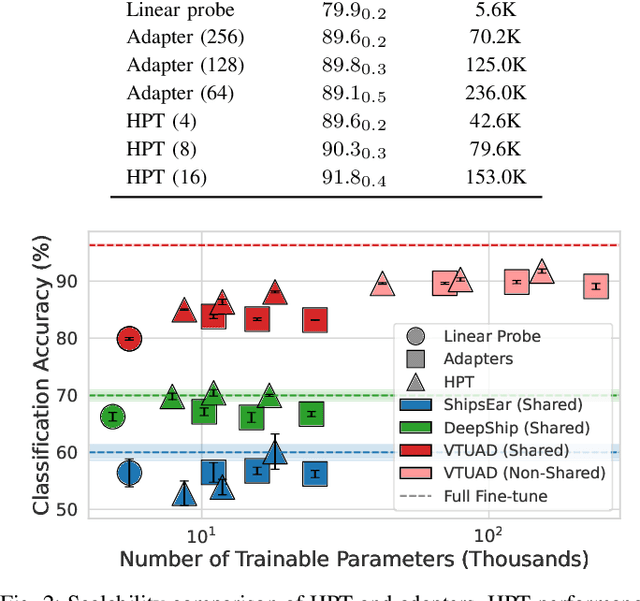

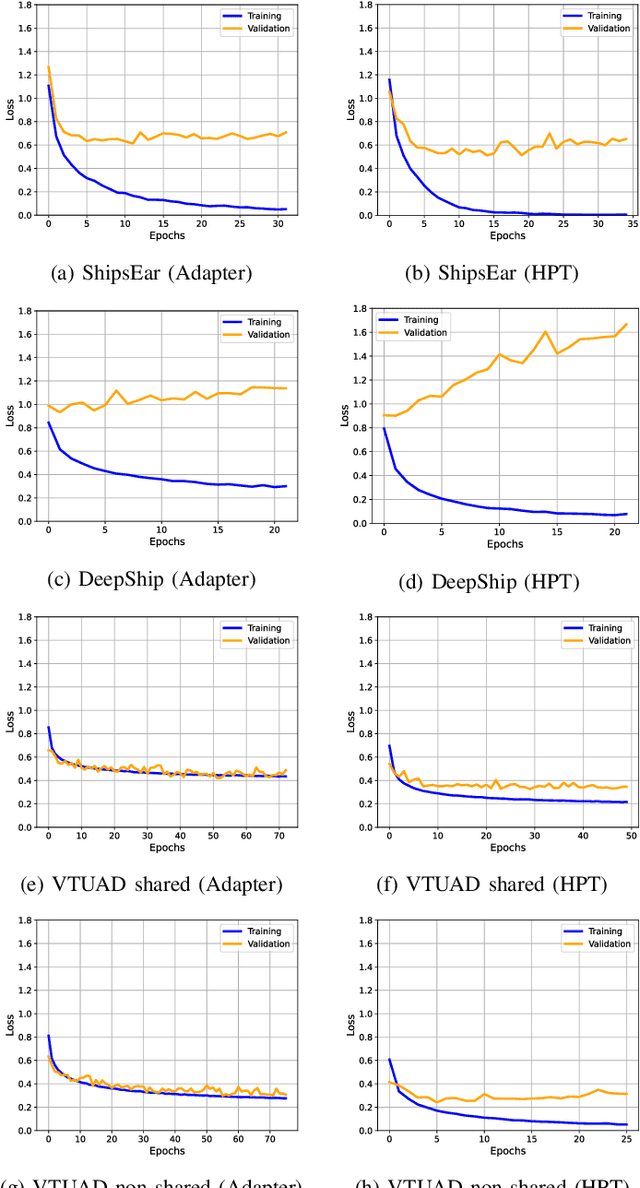

Abstract:Parameter-efficient transfer learning (PETL) methods adapt large artificial neural networks to downstream tasks without fine-tuning the entire model. However, existing additive methods, such as adapters, sometimes struggle to capture distributional shifts in intermediate feature embeddings. We propose a novel histogram-based parameter-efficient tuning (HPT) technique that captures the statistics of the target domain and modulates the embeddings. Experimental results on three downstream passive sonar datasets (ShipsEar, DeepShip, VTUAD) demonstrate that HPT outperforms conventional adapters. Notably, HPT achieves 91.8% vs. 89.8% accuracy on VTUAD. Furthermore, HPT trains faster and yields feature representations closer to those of fully fine-tuned models. Overall, HPT balances parameter savings and performance, providing a distribution-aware alternative to existing adapters and shows a promising direction for scalable transfer learning in resource-constrained environments. The code is publicly available: https://github.com/Advanced-Vision-and-Learning-Lab/HLAST_DeepShip_ParameterEfficient.

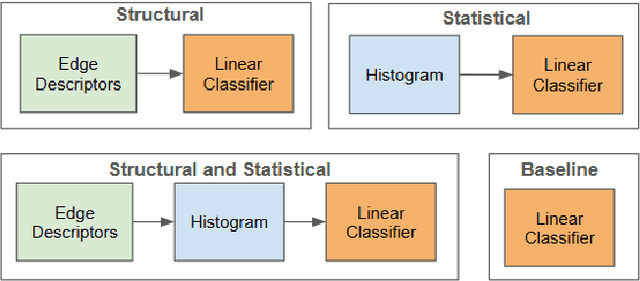

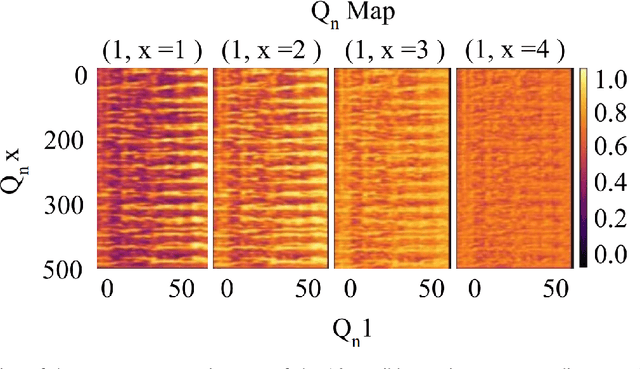

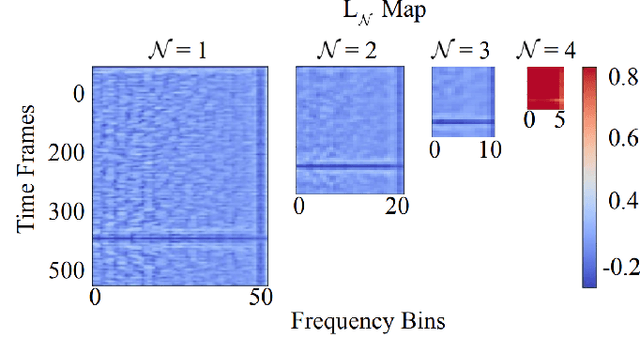

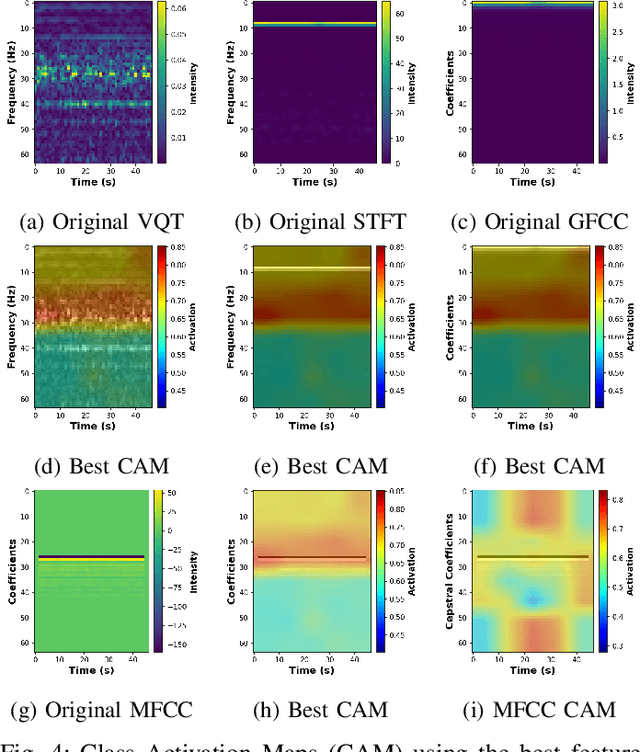

Quantitative Measures for Passive Sonar Texture Analysis

Apr 21, 2025Abstract:Passive sonar signals contain complex characteristics often arising from environmental noise, vessel machinery, and propagation effects. While convolutional neural networks (CNNs) perform well on passive sonar classification tasks, they can struggle with statistical variations that occur in the data. To investigate this limitation, synthetic underwater acoustic datasets are generated that centered on amplitude and period variations. Two metrics are proposed to quantify and validate these characteristics in the context of statistical and structural texture for passive sonar. These measures are applied to real-world passive sonar datasets to assess texture information in the signals and correlate the performances of the models. Results show that CNNs underperform on statistically textured signals, but incorporating explicit statistical texture modeling yields consistent improvements. These findings highlight the importance of quantifying texture information for passive sonar classification.

Benchmarking Suite for Synthetic Aperture Radar Imagery Anomaly Detection (SARIAD) Algorithms

Apr 10, 2025Abstract:Anomaly detection is a key research challenge in computer vision and machine learning with applications in many fields from quality control to radar imaging. In radar imaging, specifically synthetic aperture radar (SAR), anomaly detection can be used for the classification, detection, and segmentation of objects of interest. However, there is no method for developing and benchmarking these methods on SAR imagery. To address this issue, we introduce SAR imagery anomaly detection (SARIAD). In conjunction with Anomalib, a deep-learning library for anomaly detection, SARIAD provides a comprehensive suite of algorithms and datasets for assessing and developing anomaly detection approaches on SAR imagery. SARIAD specifically integrates multiple SAR datasets along with tools to effectively apply various anomaly detection algorithms to SAR imagery. Several anomaly detection metrics and visualizations are available. Overall, SARIAD acts as a central package for benchmarking SAR models and datasets to allow for reproducible research in the field of anomaly detection in SAR imagery. This package is publicly available: https://github.com/Advanced-Vision-and-Learning-Lab/SARIAD.

Patch distribution modeling framework adaptive cosine estimator (PaDiM-ACE) for anomaly detection and localization in synthetic aperture radar imagery

Apr 10, 2025Abstract:This work presents a new approach to anomaly detection and localization in synthetic aperture radar imagery (SAR), expanding upon the existing patch distribution modeling framework (PaDiM). We introduce the adaptive cosine estimator (ACE) detection statistic. PaDiM uses the Mahalanobis distance at inference, an unbounded metric. ACE instead uses the cosine similarity metric, providing bounded anomaly detection scores. The proposed method is evaluated across multiple SAR datasets, with performance metrics including the area under the receiver operating curve (AUROC) at the image and pixel level, aiming for increased performance in anomaly detection and localization of SAR imagery. The code is publicly available: https://github.com/Advanced-Vision-and-Learning-Lab/PaDiM-LACE.

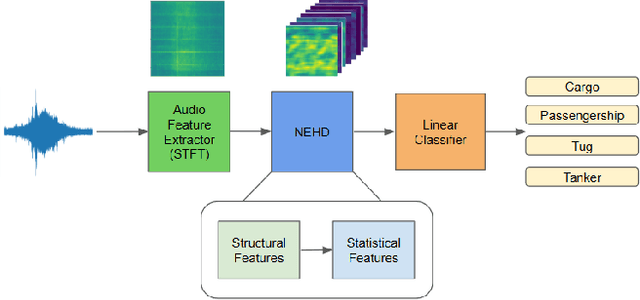

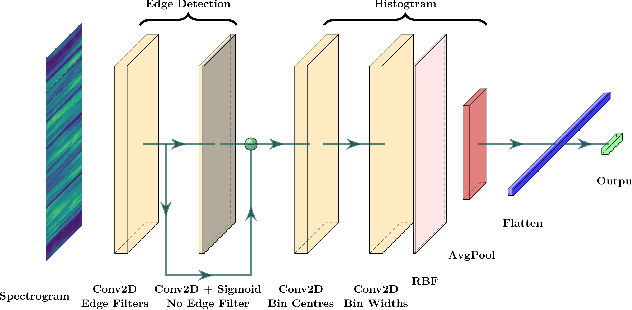

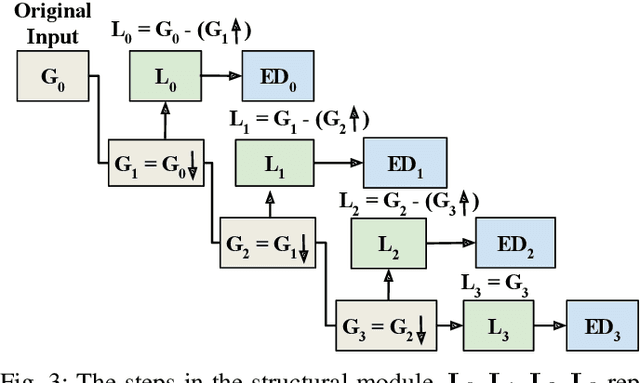

Neural Edge Histogram Descriptors for Underwater Acoustic Target Recognition

Mar 17, 2025

Abstract:Numerous maritime applications rely on the ability to recognize acoustic targets using passive sonar. While there is a growing reliance on pre-trained models for classification tasks, these models often require extensive computational resources and may not perform optimally when transferred to new domains due to dataset variations. To address these challenges, this work adapts the neural edge histogram descriptors (NEHD) method originally developed for image classification, to classify passive sonar signals. We conduct a comprehensive evaluation of statistical and structural texture features, demonstrating that their combination achieves competitive performance with large pre-trained models. The proposed NEHD-based approach offers a lightweight and efficient solution for underwater target recognition, significantly reducing computational costs while maintaining accuracy.

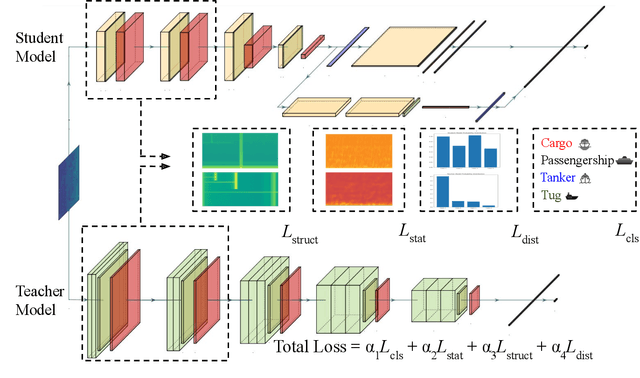

Structural and Statistical Audio Texture Knowledge Distillation (SSATKD) for Passive Sonar Classification

Jan 03, 2025

Abstract:Knowledge distillation has been successfully applied to various audio tasks, but its potential in underwater passive sonar target classification remains relatively unexplored. Existing methods often focus on high-level contextual information while overlooking essential low-level audio texture features needed to capture local patterns in sonar data. To address this gap, the Structural and Statistical Audio Texture Knowledge Distillation (SSATKD) framework is proposed for passive sonar target classification. SSATKD combines high-level contextual information with low-level audio textures by utilizing an Edge Detection Module for structural texture extraction and a Statistical Knowledge Extractor Module to capture signal variability and distribution. Experimental results confirm that SSATKD improves classification accuracy while optimizing memory and computational resources, making it well-suited for resource-constrained environments.

Transfer Learning for Passive Sonar Classification using Pre-trained Audio and ImageNet Models

Sep 20, 2024

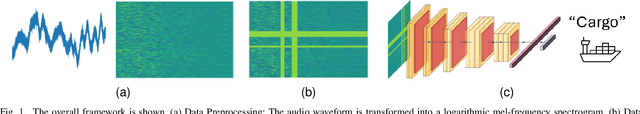

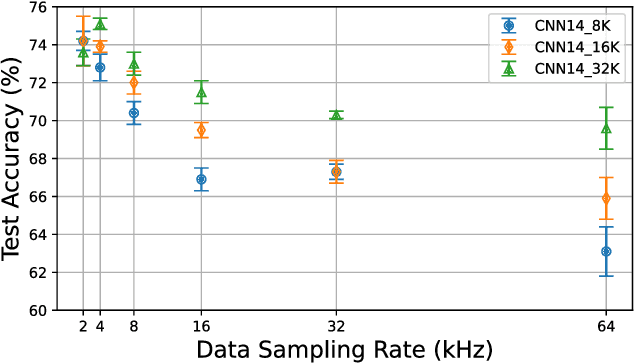

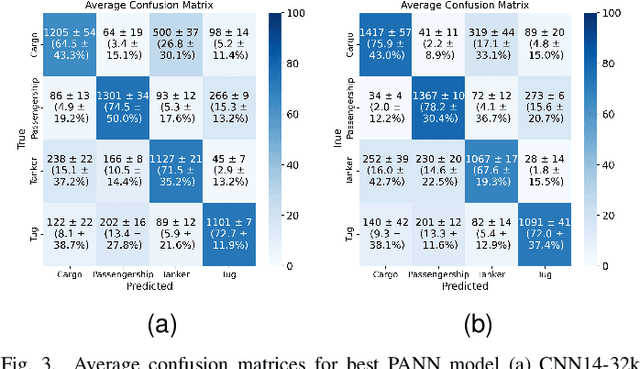

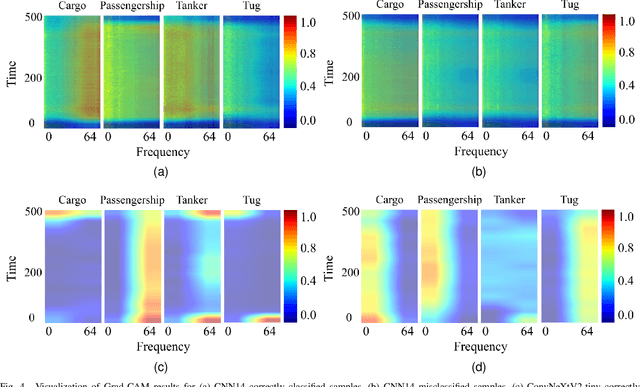

Abstract:Transfer learning is commonly employed to leverage large, pre-trained models and perform fine-tuning for downstream tasks. The most prevalent pre-trained models are initially trained using ImageNet. However, their ability to generalize can vary across different data modalities. This study compares pre-trained Audio Neural Networks (PANNs) and ImageNet pre-trained models within the context of underwater acoustic target recognition (UATR). It was observed that the ImageNet pre-trained models slightly out-perform pre-trained audio models in passive sonar classification. We also analyzed the impact of audio sampling rates for model pre-training and fine-tuning. This study contributes to transfer learning applications of UATR, illustrating the potential of pre-trained models to address limitations caused by scarce, labeled data in the UATR domain.

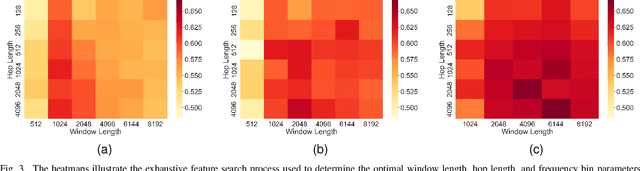

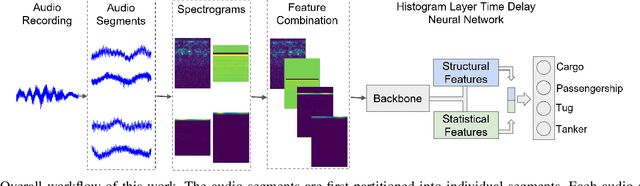

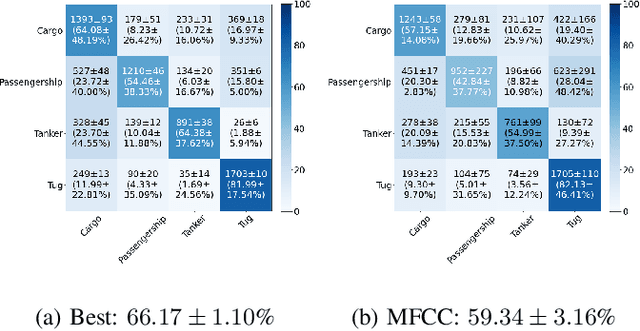

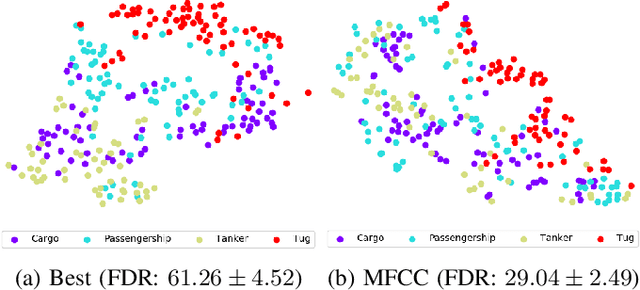

Investigation of Time-Frequency Feature Combinations with Histogram Layer Time Delay Neural Networks

Sep 20, 2024

Abstract:While deep learning has reduced the prevalence of manual feature extraction, transformation of data via feature engineering remains essential for improving model performance, particularly for underwater acoustic signals. The methods by which audio signals are converted into time-frequency representations and the subsequent handling of these spectrograms can significantly impact performance. This work demonstrates the performance impact of using different combinations of time-frequency features in a histogram layer time delay neural network. An optimal set of features is identified with results indicating that specific feature combinations outperform single data features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge