Tejashri Kelhe

Transfer Learning for Passive Sonar Classification using Pre-trained Audio and ImageNet Models

Sep 20, 2024

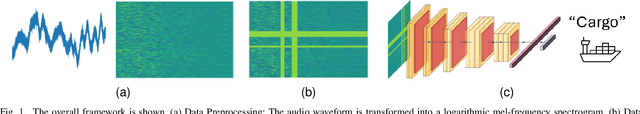

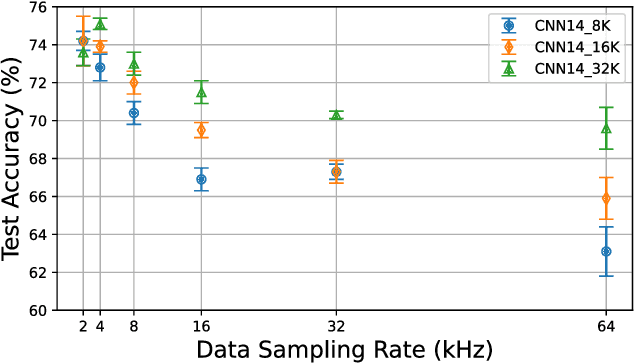

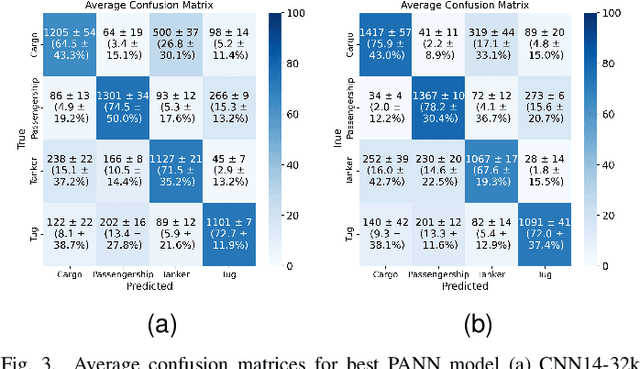

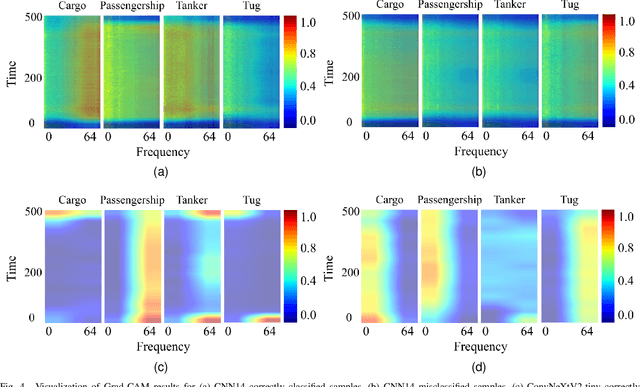

Abstract:Transfer learning is commonly employed to leverage large, pre-trained models and perform fine-tuning for downstream tasks. The most prevalent pre-trained models are initially trained using ImageNet. However, their ability to generalize can vary across different data modalities. This study compares pre-trained Audio Neural Networks (PANNs) and ImageNet pre-trained models within the context of underwater acoustic target recognition (UATR). It was observed that the ImageNet pre-trained models slightly out-perform pre-trained audio models in passive sonar classification. We also analyzed the impact of audio sampling rates for model pre-training and fine-tuning. This study contributes to transfer learning applications of UATR, illustrating the potential of pre-trained models to address limitations caused by scarce, labeled data in the UATR domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge