Joshua Karns

Continuous Ant-Based Neural Topology Search

Nov 21, 2020

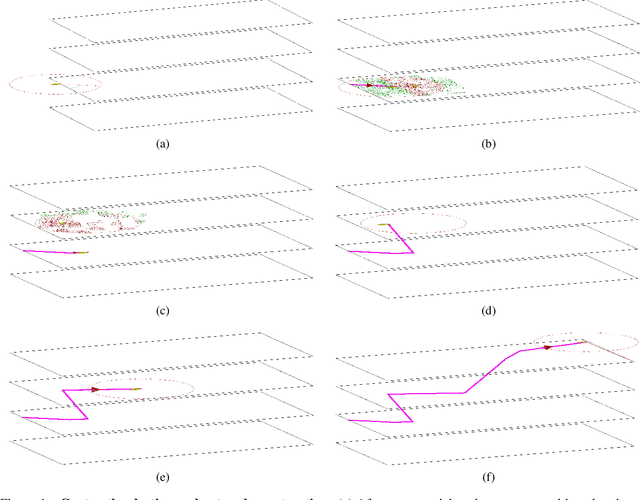

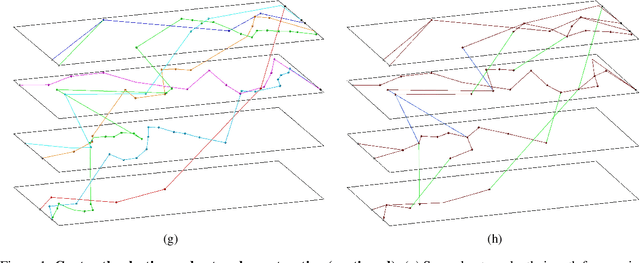

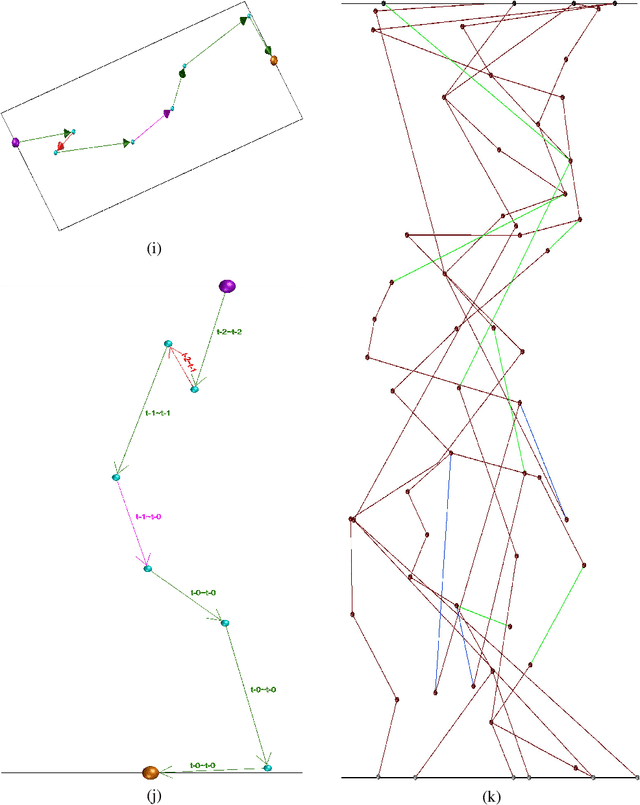

Abstract:This work introduces a novel, nature-inspired neural architecture search (NAS) algorithm based on ant colony optimization, Continuous Ant-based Neural Topology Search (CANTS), which utilizes synthetic ants that move over a continuous search space based on the density and distribution of pheromones, is strongly inspired by how ants move in the real world. The paths taken by the ant agents through the search space are utilized to construct artificial neural networks (ANNs). This continuous search space allows CANTS to automate the design of ANNs of any size, removing a key limitation inherent to many current NAS algorithms that must operate within structures with a size predetermined by the user. CANTS employs a distributed asynchronous strategy which allows it to scale to large-scale high performance computing resources, works with a variety of recurrent memory cell structures, and makes use of a communal weight sharing strategy to reduce training time. The proposed procedure is evaluated on three real-world, time series prediction problems in the field of power systems and compared to two state-of-the-art algorithms. Results show that CANTS is able to provide improved or competitive results on all of these problems, while also being easier to use, requiring half the number of user-specified hyper-parameters.

An Experimental Study of Weight Initialization and Weight Inheritance Effects on Neuroevolution

Sep 26, 2020

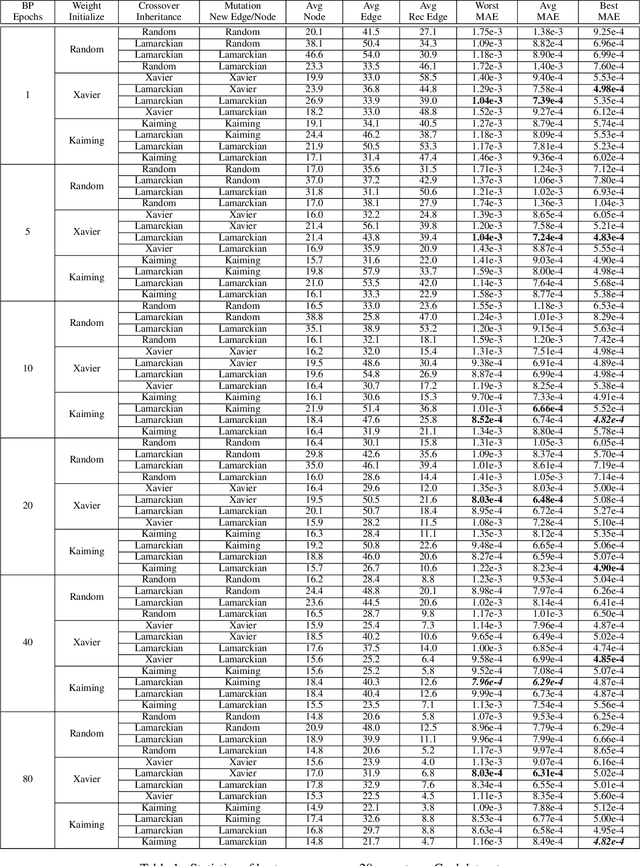

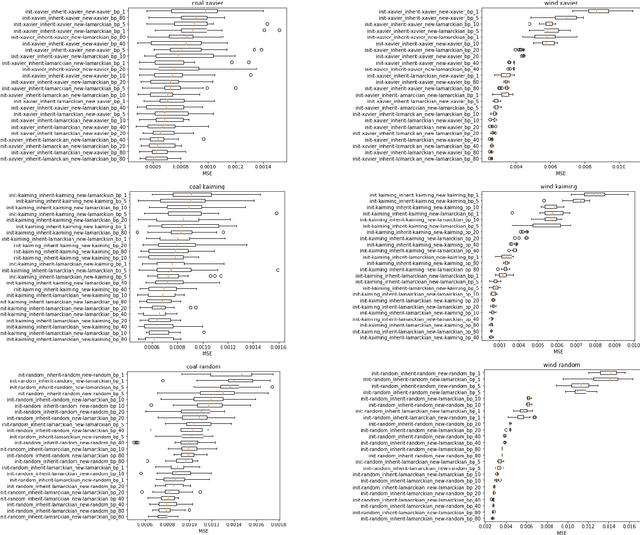

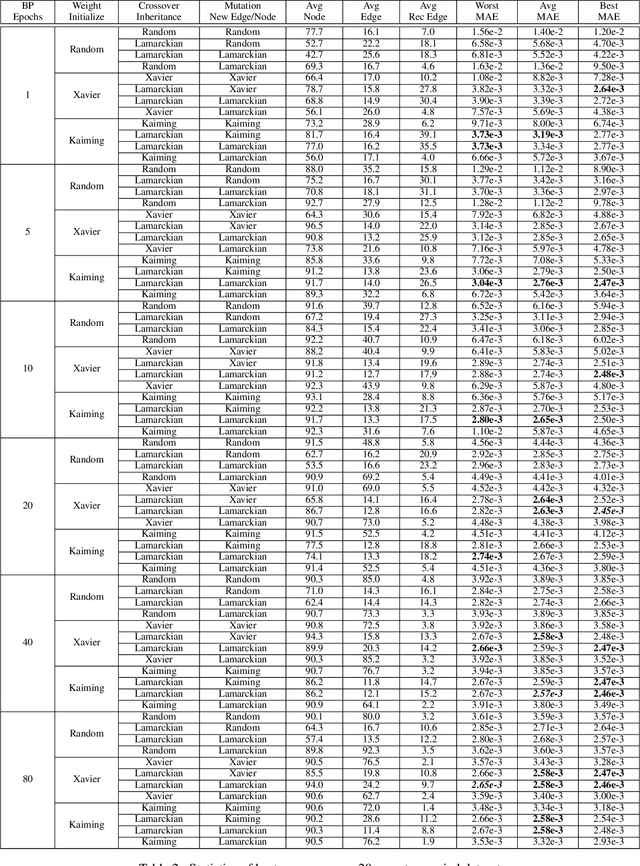

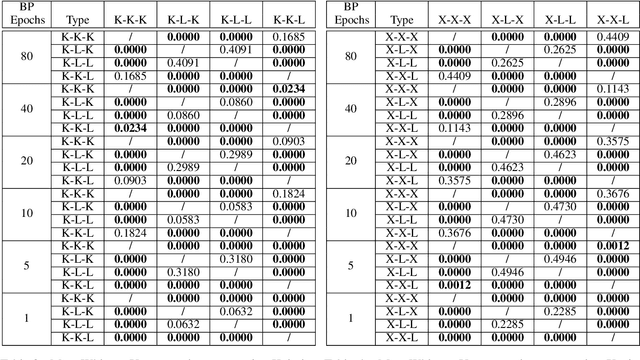

Abstract:Weight initialization is critical in being able to successfully train artificial neural networks (ANNs), and even more so for recurrent neural networks (RNNs) which can easily suffer from vanishing and exploding gradients. In neuroevolution, where evolutionary algorithms are applied to neural architecture search, weights typically need to be initialized at three different times: when initial genomes (ANN architectures) are created at the beginning of the search, when offspring genomes are generated by crossover, and when new nodes or edges are created during mutation. This work explores the difference between using Xavier, Kaiming, and uniform random weight initialization methods, as well as novel Lamarckian weight inheritance methods for initializing new weights during crossover and mutation operations. These are examined using the Evolutionary eXploration of Augmenting Memory Models (EXAMM) neuroevolution algorithm, which is capable of evolving RNNs with a variety of modern memory cells (e.g., LSTM, GRU, MGU, UGRNN and Delta-RNN cells) as well recurrent connections with varying time skips through a high performance island based distributed evolutionary algorithm. Results show that with statistical significance, utilizing the Lamarckian strategies outperforms Kaiming, Xavier and uniform random weight initialization, and can speed neuroevolution by requiring less backpropagation epochs to be evaluated for each generated RNN.

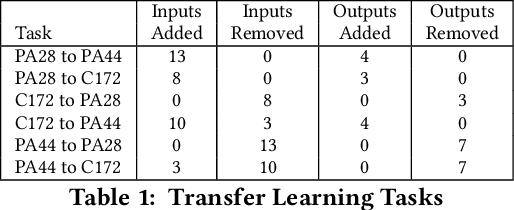

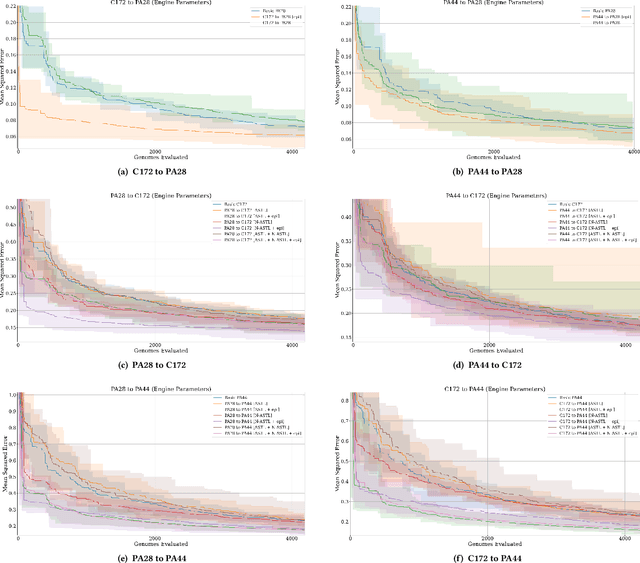

Neuroevolutionary Transfer Learning of Deep Recurrent Neural Networks through Network-Aware Adaptation

Jun 04, 2020

Abstract:Transfer learning entails taking an artificial neural network (ANN) that is trained on a source dataset and adapting it to a new target dataset. While this has been shown to be quite powerful, its use has generally been restricted by architectural constraints. Previously, in order to reuse and adapt an ANN's internal weights and structure, the underlying topology of the ANN being transferred across tasks must remain mostly the same while a new output layer is attached, discarding the old output layer's weights. This work introduces network-aware adaptive structure transfer learning (N-ASTL), an advancement over prior efforts to remove this restriction. N-ASTL utilizes statistical information related to the source network's topology and weight distribution in order to inform how new input and output neurons are to be integrated into the existing structure. Results show improvements over prior state-of-the-art, including the ability to transfer in challenging real-world datasets not previously possible and improved generalization over RNNs trained without transfer.

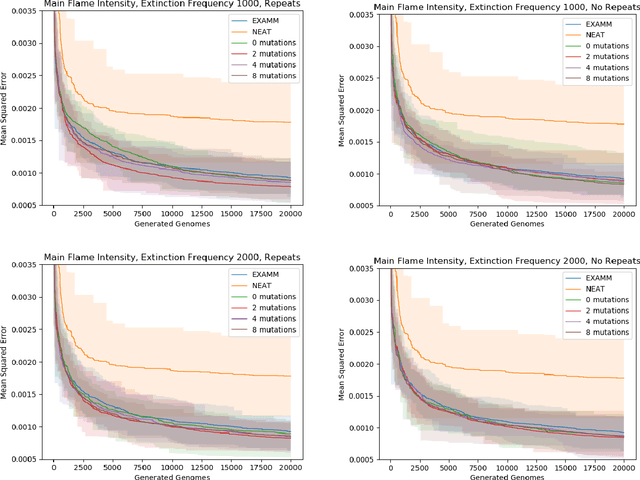

Improving Neuroevolution Using Island Extinction and Repopulation

May 15, 2020

Abstract:Neuroevolution commonly uses speciation strategies to better explore the search space of neural network architectures. One such speciation strategy is through the use of islands, which are also popular in improving performance and convergence of distributed evolutionary algorithms. However, in this approach some islands can become stagnant and not find new best solutions. In this paper, we propose utilizing extinction events and island repopulation to avoid premature convergence. We explore this with the Evolutionary eXploration of Augmenting Memory Models (EXAMM) neuro-evolution algorithm. In this strategy, all members of the worst performing island are killed of periodically and repopulated with mutated versions of the global best genome. This island based strategy is additionally compared to NEAT's (NeuroEvolution of Augmenting Topologies) speciation strategy. Experiments were performed using two different real world time series datasets (coal-fired power plant and aviation flight data). The results show that with statistical significance, this island extinction and repopulation strategy evolves better global best genomes than both EXAMM's original island based strategy and NEAT's speciation strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge