Alexander Ororbia II

Neuroevolutionary Transfer Learning of Deep Recurrent Neural Networks through Network-Aware Adaptation

Jun 04, 2020

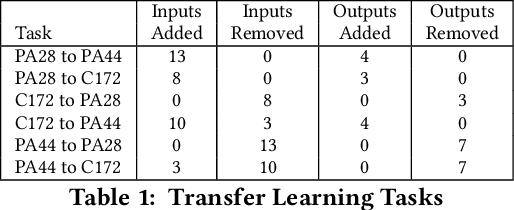

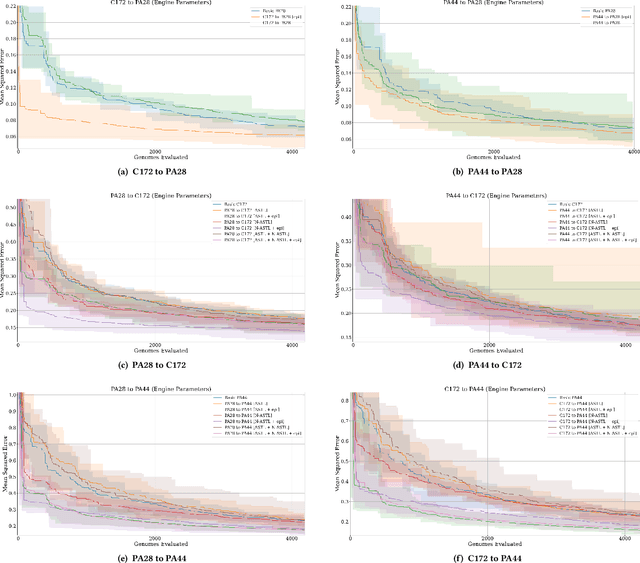

Abstract:Transfer learning entails taking an artificial neural network (ANN) that is trained on a source dataset and adapting it to a new target dataset. While this has been shown to be quite powerful, its use has generally been restricted by architectural constraints. Previously, in order to reuse and adapt an ANN's internal weights and structure, the underlying topology of the ANN being transferred across tasks must remain mostly the same while a new output layer is attached, discarding the old output layer's weights. This work introduces network-aware adaptive structure transfer learning (N-ASTL), an advancement over prior efforts to remove this restriction. N-ASTL utilizes statistical information related to the source network's topology and weight distribution in order to inform how new input and output neurons are to be integrated into the existing structure. Results show improvements over prior state-of-the-art, including the ability to transfer in challenging real-world datasets not previously possible and improved generalization over RNNs trained without transfer.

A Hybrid Algorithm for Metaheuristic Optimization

May 26, 2019

Abstract:We propose a novel, flexible algorithm for combining together metaheuristicoptimizers for non-convex optimization problems. Our approach treatsthe constituent optimizers as a team of complex agents that communicateinformation amongst each other at various intervals during the simulationprocess. The information produced by each individual agent can be combinedin various ways via higher-level operators. In our experiments on keybenchmark functions, we investigate how the performance of our algorithmvaries with respect to several of its key modifiable properties. Finally,we apply our proposed algorithm to classification problems involving theoptimization of support-vector machine classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge