Joseph N Stember

Uncertainty Quantification in Detecting Choroidal Metastases on MRI via Evolutionary Strategies

Apr 12, 2024Abstract:Uncertainty quantification plays a vital role in facilitating the practical implementation of AI in radiology by addressing growing concerns around trustworthiness. Given the challenges associated with acquiring large, annotated datasets in this field, there is a need for methods that enable uncertainty quantification in small data AI approaches tailored to radiology images. In this study, we focused on uncertainty quantification within the context of the small data evolutionary strategies-based technique of deep neuroevolution (DNE). Specifically, we employed DNE to train a simple Convolutional Neural Network (CNN) with MRI images of the eyes for binary classification. The goal was to distinguish between normal eyes and those with metastatic tumors called choroidal metastases. The training set comprised 18 images with choroidal metastases and 18 without tumors, while the testing set contained a tumor-to-normal ratio of 15:15. We trained CNN model weights via DNE for approximately 40,000 episodes, ultimately reaching a convergence of 100% accuracy on the training set. We saved all models that achieved maximal training set accuracy. Then, by applying these models to the testing set, we established an ensemble method for uncertainty quantification.The saved set of models produced distributions for each testing set image between the two classes of normal and tumor-containing. The relative frequencies permitted uncertainty quantification of model predictions. Intriguingly, we found that subjective features appreciated by human radiologists explained images for which uncertainty was high, highlighting the significance of uncertainty quantification in AI-driven radiological analyses.

Deep Neuroevolution Squeezes More out of Small Neural Networks and Small Training Sets: Sample Application to MRI Brain Sequence Classification

Dec 24, 2021

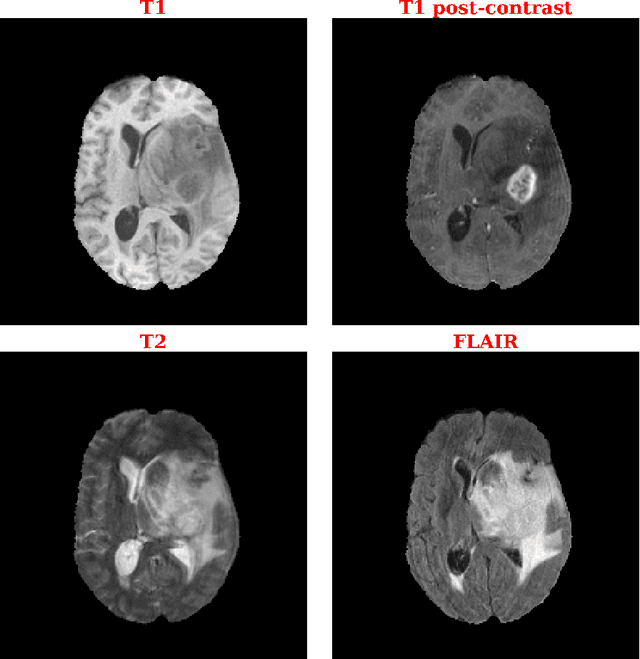

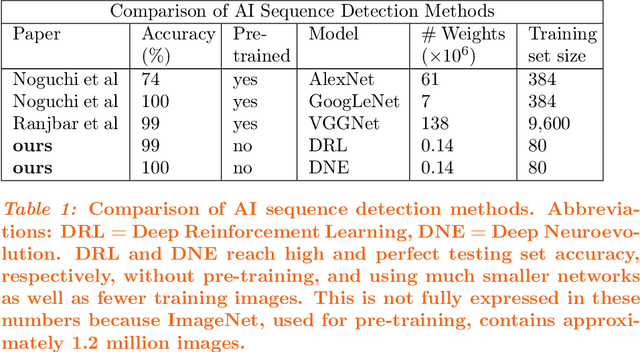

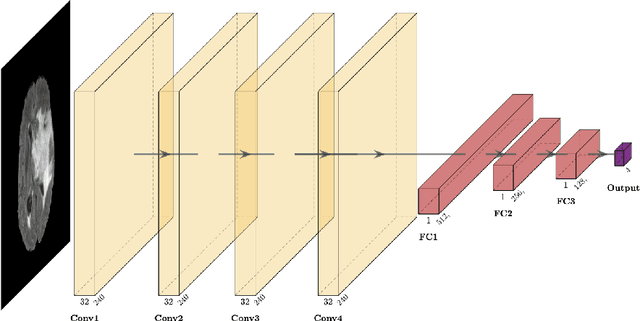

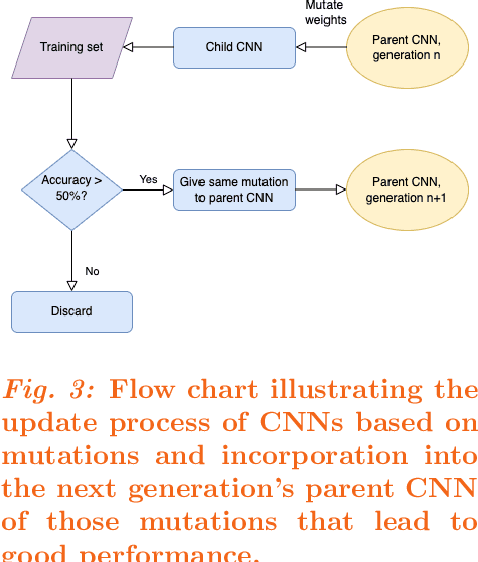

Abstract:Purpose: Deep Neuroevolution (DNE) holds the promise of providing radiology artificial intelligence (AI) that performs well with small neural networks and small training sets. We seek to realize this potential via a proof-of-principle application to MRI brain sequence classification. Methods: We analyzed a training set of 20 patients, each with four sequences/weightings: T1, T1 post-contrast, T2, and T2-FLAIR. We trained the parameters of a relatively small convolutional neural network (CNN) as follows: First, we randomly mutated the CNN weights. We then measured the CNN training set accuracy, using the latter as the fitness evaluation metric. The fittest child CNNs were identified. We incorporated their mutations into the parent CNN. This selectively mutated parent became the next generation's parent CNN. We repeated this process for approximately 50,000 generations. Results: DNE achieved monotonic convergence to 100% training set accuracy. DNE also converged monotonically to 100% testing set accuracy. Conclusions: DNE can achieve perfect accuracy with small training sets and small CNNs. Particularly when combined with Deep Reinforcement Learning, DNE may provide a path forward in the quest to make radiology AI more human-like in its ability to learn. DNE may very well turn out to be a key component of the much-anticipated meta-learning regime of radiology AI algorithms that can adapt to new tasks and new image types, similar to human radiologists.

Reinforcement learning using Deep Q Networks and Q learning accurately localizes brain tumors on MRI with very small training sets

Oct 21, 2020

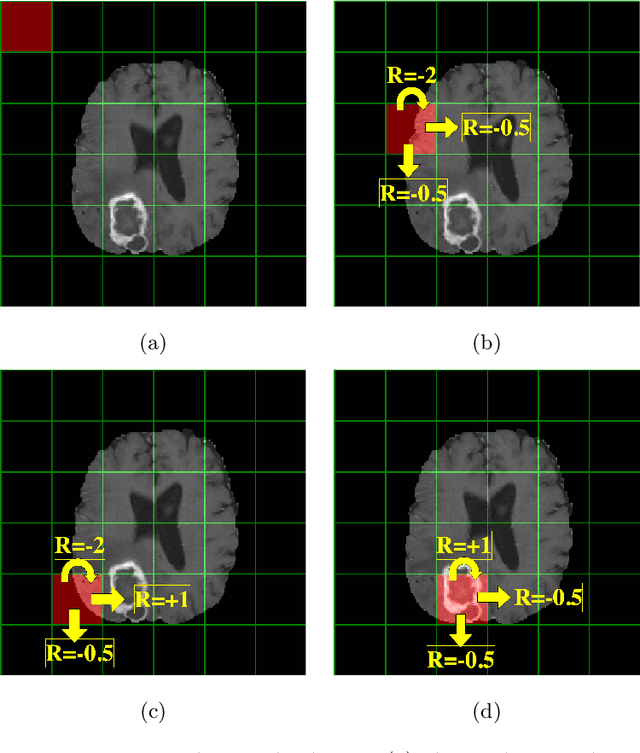

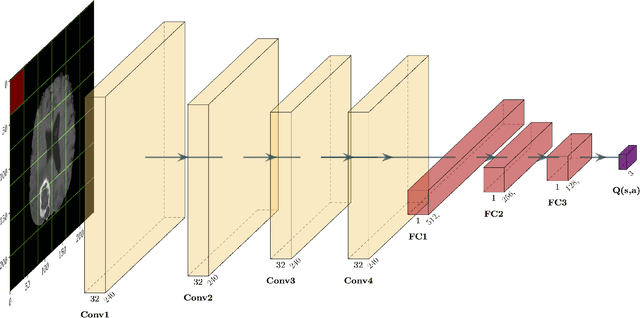

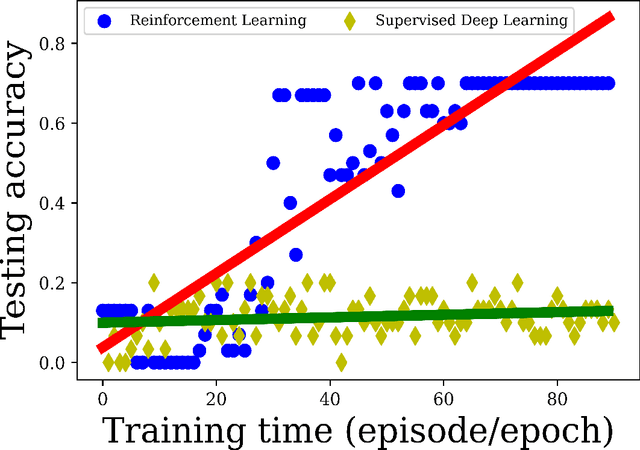

Abstract:Purpose Supervised deep learning in radiology suffers from notorious inherent limitations: 1) It requires large, hand-annotated data sets, 2) It is non-generalizable, and 3) It lacks explainability and intuition. We have recently proposed Reinforcement Learning to address all threes. However, we applied it to images with radiologist eye tracking points, which limits the state-action space. Here we generalize the Deep-Q Learning to a gridworld-based environment, so that only the images and image masks are required. Materials and Methods We trained a Deep Q network on 30 two-dimensional image slices from the BraTS brain tumor database. Each image contained one lesion. We then tested the trained Deep Q network on a separate set of 30 testing set images. For comparison, we also trained and tested a keypoint detection supervised deep learning network for the same set of training / testing images. Results Whereas the supervised approach quickly overfit the training data, and predicably performed poorly on the testing set (11\% accuracy), the Deep-Q learning approach showed progressive improved generalizability to the testing set over training time, reaching 70\% accuracy. Conclusion We have shown a proof-of-principle application of reinforcement learning to radiological images, here using 2D contrast-enhanced MRI brain images with the goal of localizing brain tumors. This represents a generalization of recent work to a gridworld setting, naturally suitable for analyzing medical images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge