José Manuel Gómez-Pérez

SPACE-IDEAS: A Dataset for Salient Information Detection in Space Innovation

Mar 25, 2024Abstract:Detecting salient parts in text using natural language processing has been widely used to mitigate the effects of information overflow. Nevertheless, most of the datasets available for this task are derived mainly from academic publications. We introduce SPACE-IDEAS, a dataset for salient information detection from innovation ideas related to the Space domain. The text in SPACE-IDEAS varies greatly and includes informal, technical, academic and business-oriented writing styles. In addition to a manually annotated dataset we release an extended version that is annotated using a large generative language model. We train different sentence and sequential sentence classifiers, and show that the automatically annotated dataset can be leveraged using multitask learning to train better classifiers.

Capturing Pertinent Symbolic Features for Enhanced Content-Based Misinformation Detection

Jan 29, 2024Abstract:Preventing the spread of misinformation is challenging. The detection of misleading content presents a significant hurdle due to its extreme linguistic and domain variability. Content-based models have managed to identify deceptive language by learning representations from textual data such as social media posts and web articles. However, aggregating representative samples of this heterogeneous phenomenon and implementing effective real-world applications is still elusive. Based on analytical work on the language of misinformation, this paper analyzes the linguistic attributes that characterize this phenomenon and how representative of such features some of the most popular misinformation datasets are. We demonstrate that the appropriate use of pertinent symbolic knowledge in combination with neural language models is helpful in detecting misleading content. Our results achieve state-of-the-art performance in misinformation datasets across the board, showing that our approach offers a valid and robust alternative to multi-task transfer learning without requiring any additional training data. Furthermore, our results show evidence that structured knowledge can provide the extra boost required to address a complex and unpredictable real-world problem like misinformation detection, not only in terms of accuracy but also time efficiency and resource utilization.

Textual Entailment for Effective Triple Validation in Object Prediction

Jan 29, 2024Abstract:Knowledge base population seeks to expand knowledge graphs with facts that are typically extracted from a text corpus. Recently, language models pretrained on large corpora have been shown to contain factual knowledge that can be retrieved using cloze-style strategies. Such approach enables zero-shot recall of facts, showing competitive results in object prediction compared to supervised baselines. However, prompt-based fact retrieval can be brittle and heavily depend on the prompts and context used, which may produce results that are unintended or hallucinatory.We propose to use textual entailment to validate facts extracted from language models through cloze statements. Our results show that triple validation based on textual entailment improves language model predictions in different training regimes. Furthermore, we show that entailment-based triple validation is also effective to validate candidate facts extracted from other sources including existing knowledge graphs and text passages where named entities are recognized.

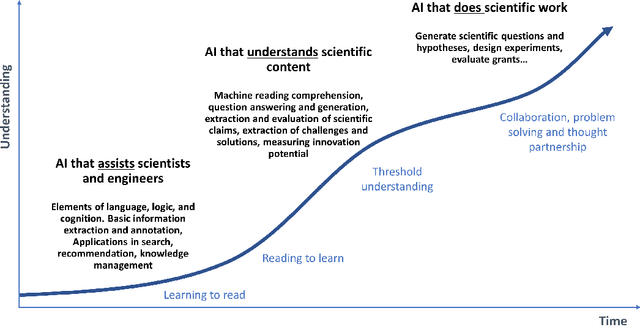

Towards Language-driven Scientific AI

Oct 31, 2022Abstract:Inspired by recent and revolutionary developments in AI, particularly in language understanding and generation, we set about designing AI systems that are able to address complex scientific tasks that challenge human capabilities to make new discoveries. Central to our approach is the notion of natural language as core representation, reasoning, and exchange format between scientific AI and human scientists. In this paper, we identify and discuss some of the main research challenges to accomplish such vision.

Generating Quizzes to Support Training on Quality Management and Assurance in Space Science and Engineering

Oct 07, 2022

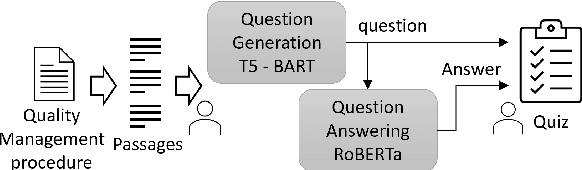

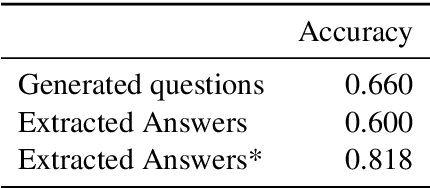

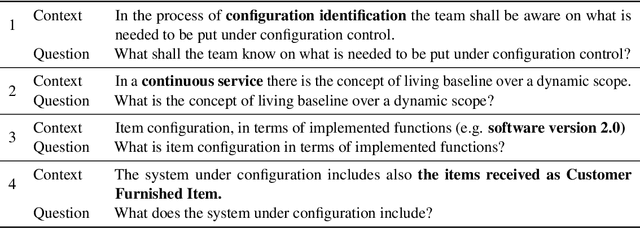

Abstract:Quality management and assurance is key for space agencies to guarantee the success of space missions, which are high-risk and extremely costly. In this paper, we present a system to generate quizzes, a common resource to evaluate the effectiveness of training sessions, from documents about quality assurance procedures in the Space domain. Our system leverages state of the art auto-regressive models like T5 and BART to generate questions, and a RoBERTa model to extract answers for such questions, thus verifying their suitability.

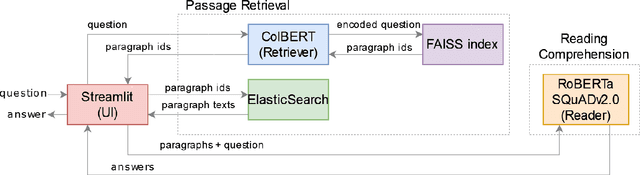

SpaceQA: Answering Questions about the Design of Space Missions and Space Craft Concepts

Oct 07, 2022

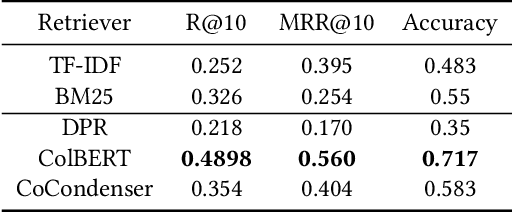

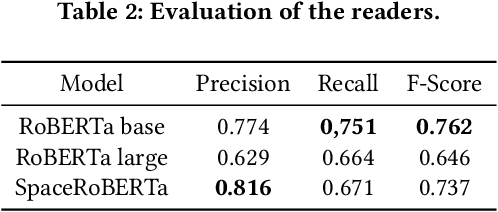

Abstract:We present SpaceQA, to the best of our knowledge the first open-domain QA system in Space mission design. SpaceQA is part of an initiative by the European Space Agency (ESA) to facilitate the access, sharing and reuse of information about Space mission design within the agency and with the public. We adopt a state-of-the-art architecture consisting of a dense retriever and a neural reader and opt for an approach based on transfer learning rather than fine-tuning due to the lack of domain-specific annotated data. Our evaluation on a test set produced by ESA is largely consistent with the results originally reported by the evaluated retrievers and confirms the need of fine tuning for reading comprehension. As of writing this paper, ESA is piloting SpaceQA internally.

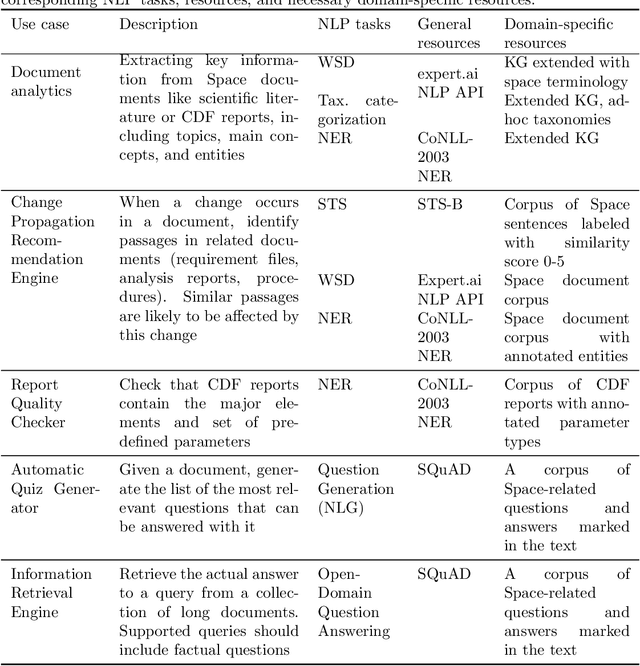

Artificial Intelligence and Natural Language Processing and Understanding in Space: Four ESA Case Studies

Oct 07, 2022

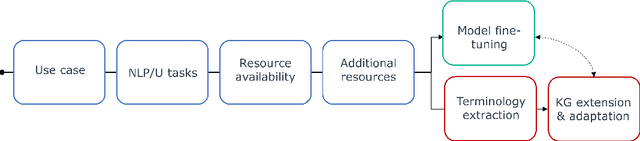

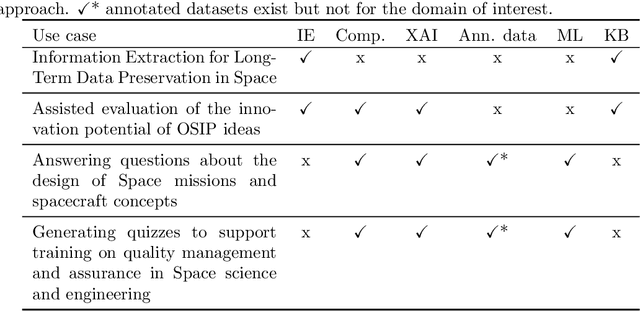

Abstract:The European Space Agency is well known as a powerful force for scientific discovery in numerous areas related to Space. The amount and depth of the knowledge produced throughout the different missions carried out by ESA and their contribution to scientific progress is enormous, involving large collections of documents like scientific publications, feasibility studies, technical reports, and quality management procedures, among many others. Through initiatives like the Open Space Innovation Platform, ESA also acts as a hub for new ideas coming from the wider community across different challenges, contributing to a virtuous circle of scientific discovery and innovation. Handling such wealth of information, of which large part is unstructured text, is a colossal task that goes beyond human capabilities, hence requiring automation. In this paper, we present a methodological framework based on artificial intelligence and natural language processing and understanding to automatically extract information from Space documents, generating value from it, and illustrate such framework through several case studies implemented across different functional areas of ESA, including Mission Design, Quality Assurance, Long-Term Data Preservation, and the Open Space Innovation Platform. In doing so, we demonstrate the value of these technologies in several tasks ranging from effortlessly searching and recommending Space information to automatically determining how innovative an idea can be, answering questions about Space, and generating quizzes regarding quality procedures. Each of these accomplishments represents a step forward in the application of increasingly intelligent AI systems in Space, from structuring and facilitating information access to intelligent systems capable to understand and reason with such information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge