Jorge Azorin-Lopez

Marker-Based Extrinsic Calibration Method for Accurate Multi-Camera 3D Reconstruction

May 05, 2025Abstract:Accurate 3D reconstruction using multi-camera RGB-D systems critically depends on precise extrinsic calibration to achieve proper alignment between captured views. In this paper, we introduce an iterative extrinsic calibration method that leverages the geometric constraints provided by a three-dimensional marker to significantly improve calibration accuracy. Our proposed approach systematically segments and refines marker planes through clustering, regression analysis, and iterative reassignment techniques, ensuring robust geometric correspondence across camera views. We validate our method comprehensively in both controlled environments and practical real-world settings within the Tech4Diet project, aimed at modeling the physical progression of patients undergoing nutritional treatments. Experimental results demonstrate substantial reductions in alignment errors, facilitating accurate and reliable 3D reconstructions.

When Deep Learning Meets Data Alignment: A Review on Deep Registration Networks

Mar 06, 2020

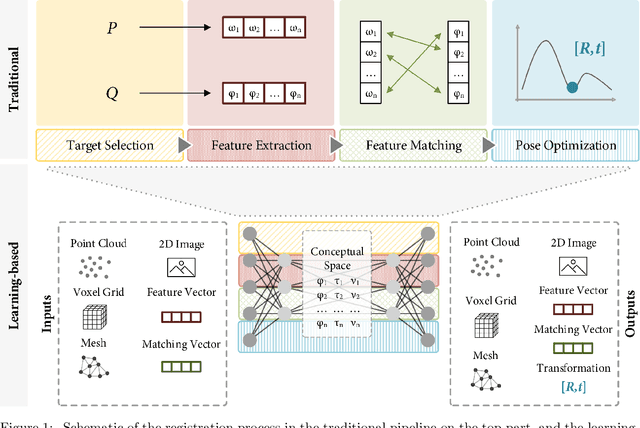

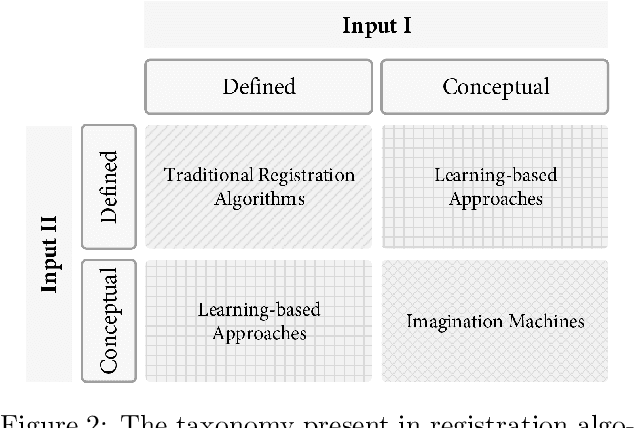

Abstract:Registration is the process that computes the transformation that aligns sets of data. Commonly, a registration process can be divided into four main steps: target selection, feature extraction, feature matching, and transform computation for the alignment. The accuracy of the result depends on multiple factors, the most significant are the quantity of input data, the presence of noise, outliers and occlusions, the quality of the extracted features, real-time requirements and the type of transformation, especially those ones defined by multiple parameters, like non-rigid deformations. Recent advancements in machine learning could be a turning point in these issues, particularly with the development of deep learning (DL) techniques, which are helping to improve multiple computer vision problems through an abstract understanding of the input data. In this paper, a review of deep learning-based registration methods is presented. We classify the different papers proposing a framework extracted from the traditional registration pipeline to analyse the new learning-based proposal strengths. Deep Registration Networks (DRNs) try to solve the alignment task either replacing part of the traditional pipeline with a network or fully solving the registration problem. The main conclusions extracted are, on the one hand, 1) learning-based registration techniques cannot always be clearly classified in the traditional pipeline. 2) These approaches allow more complex inputs like conceptual models as well as the traditional 3D datasets. 3) In spite of the generality of learning, the current proposals are still ad hoc solutions. Finally, 4) this is a young topic that still requires a large effort to reach general solutions able to cope with the problems that affect traditional approaches.

3D non-rigid registration using color: Color Coherent Point Drift

Feb 05, 2018

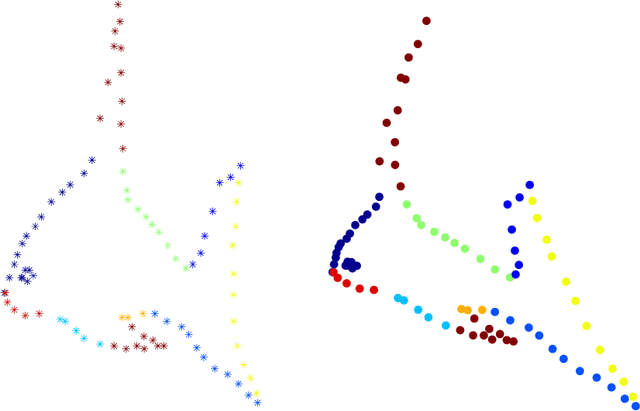

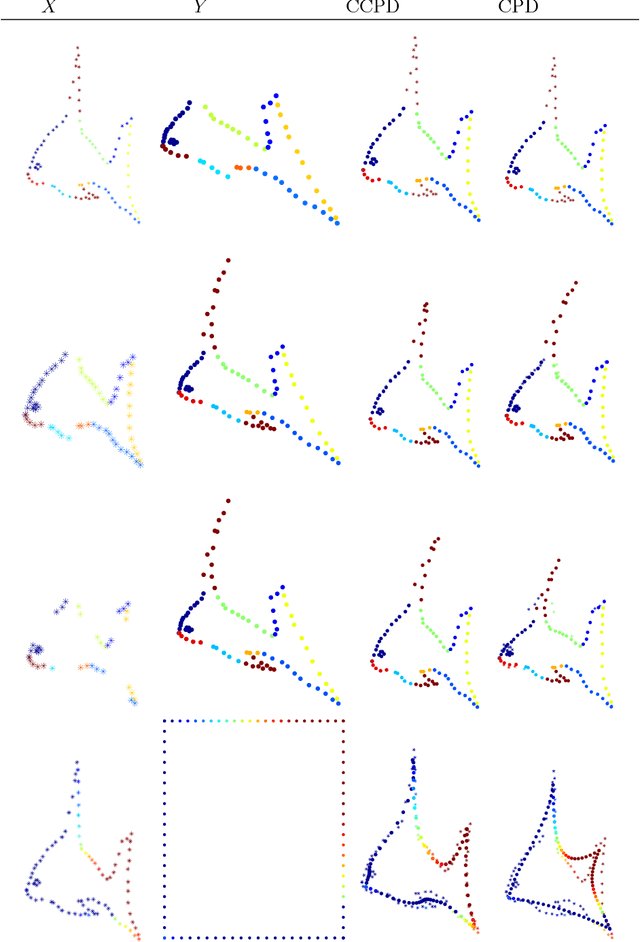

Abstract:Research into object deformations using computer vision techniques has been under intense study in recent years. A widely used technique is 3D non-rigid registration to estimate the transformation between two instances of a deforming structure. Despite many previous developments on this topic, it remains a challenging problem. In this paper we propose a novel approach to non-rigid registration combining two data spaces in order to robustly calculate the correspondences and transformation between two data sets. In particular, we use point color as well as 3D location as these are the common outputs of RGB-D cameras. We have propose the Color Coherent Point Drift (CCPD) algorithm (an extension of the CPD method [1]). Evaluation is performed using synthetic and real data. The synthetic data includes easy shapes that allow evaluation of the effect of noise, outliers and missing data. Moreover, an evaluation of realistic figures obtained using Blensor is carried out. Real data acquired using a general purpose Primesense Carmine sensor is used to validate the CCPD for real shapes. For all tests, the proposed method is compared to the original CPD showing better results in registration accuracy in most cases.

μ-MAR: Multiplane 3D Marker based Registration for Depth-sensing Cameras

Aug 04, 2017

Abstract:Many applications including object reconstruction, robot guidance, and scene mapping require the registration of multiple views from a scene to generate a complete geometric and appearance model of it. In real situations, transformations between views are unknown an it is necessary to apply expert inference to estimate them. In the last few years, the emergence of low-cost depth-sensing cameras has strengthened the research on this topic, motivating a plethora of new applications. Although they have enough resolution and accuracy for many applications, some situations may not be solved with general state-of-the-art registration methods due to the Signal-to-Noise ratio (SNR) and the resolution of the data provided. The problem of working with low SNR data, in general terms, may appear in any 3D system, then it is necessary to propose novel solutions in this aspect. In this paper, we propose a method, {\mu}-MAR, able to both coarse and fine register sets of 3D points provided by low-cost depth-sensing cameras, despite it is not restricted to these sensors, into a common coordinate system. The method is able to overcome the noisy data problem by means of using a model-based solution of multiplane registration. Specifically, it iteratively registers 3D markers composed by multiple planes extracted from points of multiple views of the scene. As the markers and the object of interest are static in the scenario, the transformations obtained for the markers are applied to the object in order to reconstruct it. Experiments have been performed using synthetic and real data. The synthetic data allows a qualitative and quantitative evaluation by means of visual inspection and Hausdorff distance respectively. The real data experiments show the performance of the proposal using data acquired by a Primesense Carmine RGB-D sensor. The method has been compared to several state-of-the-art methods. The ...

Three-dimensional planar model estimation using multi-constraint knowledge based on k-means and RANSAC

Aug 03, 2017

Abstract:Plane model extraction from three-dimensional point clouds is a necessary step in many different applications such as planar object reconstruction, indoor mapping and indoor localization. Different RANdom SAmple Consensus (RANSAC)-based methods have been proposed for this purpose in recent years. In this study, we propose a novel method-based on RANSAC called Multiplane Model Estimation, which can estimate multiple plane models simultaneously from a noisy point cloud using the knowledge extracted from a scene (or an object) in order to reconstruct it accurately. This method comprises two steps: first, it clusters the data into planar faces that preserve some constraints defined by knowledge related to the object (e.g., the angles between faces); and second, the models of the planes are estimated based on these data using a novel multi-constraint RANSAC. We performed experiments in the clustering and RANSAC stages, which showed that the proposed method performed better than state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge