Joost Wagenaar

Benchmarking AutoML algorithms on a collection of synthetic classification problems

Dec 14, 2022Abstract:Automated machine learning (AutoML) algorithms have grown in popularity due to their high performance and flexibility to adapt to different problems and data sets. With the increasing number of AutoML algorithms, deciding which would best suit a given problem becomes increasingly more work. Therefore, it is essential to use complex and challenging benchmarks which would be able to differentiate the AutoML algorithms from each other. This paper compares the performance of four different AutoML algorithms: Tree-based Pipeline Optimization Tool (TPOT), Auto-Sklearn, Auto-Sklearn 2, and H2O AutoML. We use the Diverse and Generative ML benchmark (DIGEN), a diverse set of synthetic datasets derived from generative functions designed to highlight the strengths and weaknesses of the performance of common machine learning algorithms. We confirm that AutoML can identify pipelines that perform well on all included datasets. Most AutoML algorithms performed similarly without much room for improvement; however, some were more consistent than others at finding high-performing solutions for some datasets.

Applying Autonomous Hybrid Agent-based Computing to Difficult Optimization Problems

Oct 24, 2022

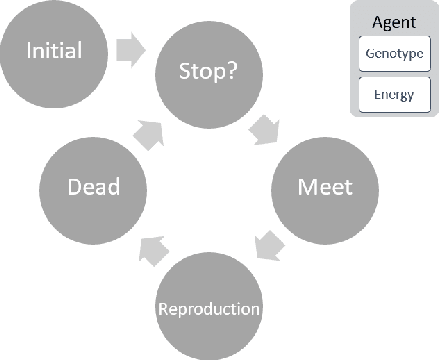

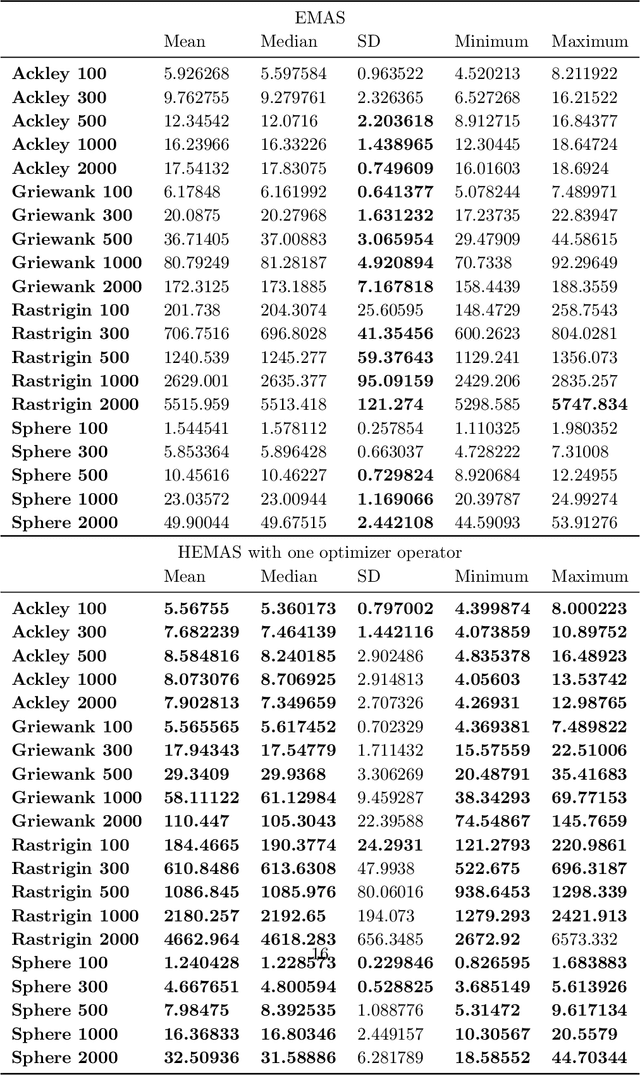

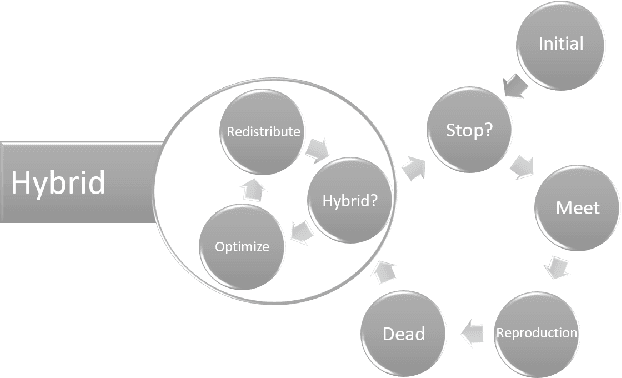

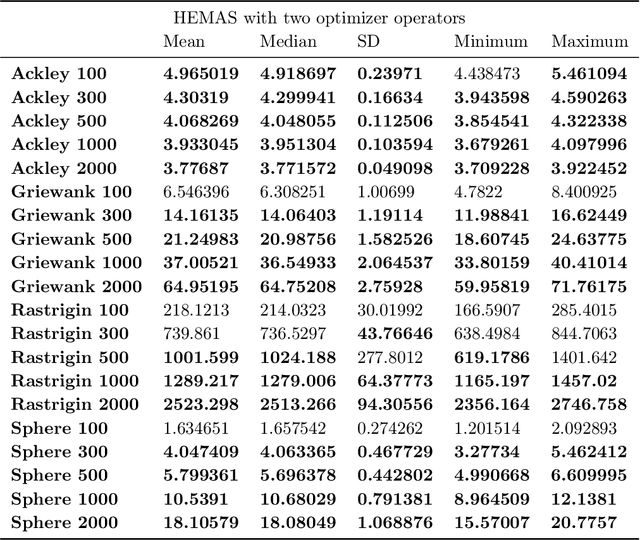

Abstract:Evolutionary multi-agent systems (EMASs) are very good at dealing with difficult, multi-dimensional problems, their efficacy was proven theoretically based on analysis of the relevant Markov-Chain based model. Now the research continues on introducing autonomous hybridization into EMAS. This paper focuses on a proposed hybrid version of the EMAS, and covers selection and introduction of a number of hybrid operators and defining rules for starting the hybrid steps of the main algorithm. Those hybrid steps leverage existing, well-known and proven to be efficient metaheuristics, and integrate their results into the main algorithm. The discussed modifications are evaluated based on a number of difficult continuous-optimization benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge