Jonathan Waltman

Patch-Based Image Hallucination for Super Resolution with Detail Reconstruction from Similar Sample Images

Jun 03, 2018

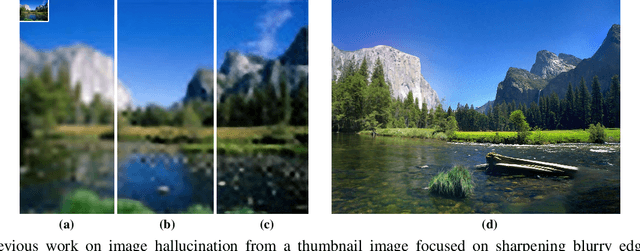

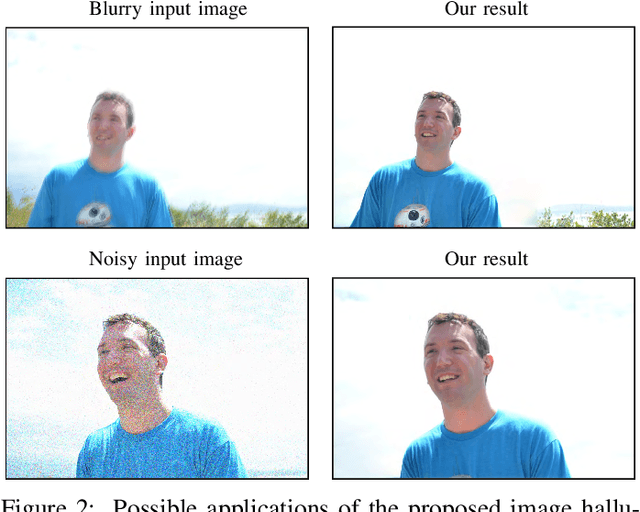

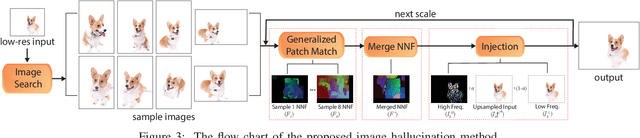

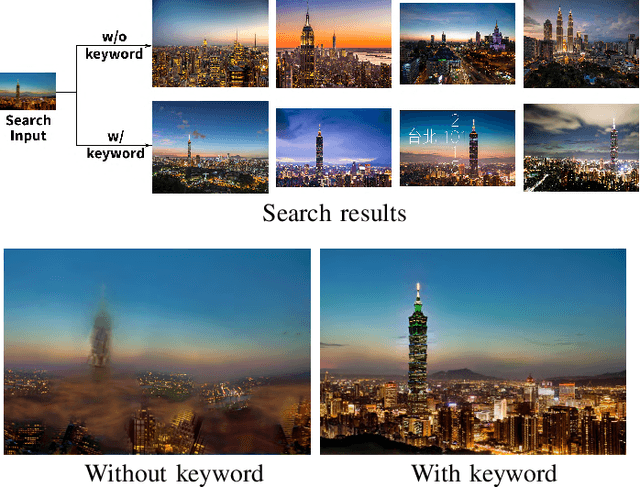

Abstract:Image hallucination and super-resolution have been studied for decades, and many approaches have been proposed to upsample low-resolution images using information from the images themselves, multiple example images, or large image databases. However, most of this work has focused exclusively on small magnification levels because the algorithms simply sharpen the blurry edges in the upsampled images - no actual new detail is typically reconstructed in the final result. In this paper, we present a patch-based algorithm for image hallucination which, for the first time, properly synthesizes novel high frequency detail. To do this, we pose the synthesis problem as a patch-based optimization which inserts coherent, high-frequency detail from contextually-similar images of the same physical scene/subject provided from either a personal image collection or a large online database. The resulting image is visually plausible and contains coherent high frequency information. We demonstrate the robustness of our algorithm by testing it on a large number of images and show that its performance is considerably superior to all state-of-the-art approaches, a result that is verified to be statistically significant through a randomized user study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge