Jonathan P. Keating

The Persistence of Neural Collapse Despite Low-Rank Bias: An Analytic Perspective Through Unconstrained Features

Oct 30, 2024Abstract:Modern deep neural networks have been observed to exhibit a simple structure in their final layer features and weights, commonly referred to as neural collapse. This phenomenon has also been noted in layers beyond the final one, an extension known as deep neural collapse. Recent findings indicate that such a structure is generally not optimal in the deep unconstrained feature model, an approximation of an expressive network. This is attributed to a low-rank bias induced by regularization, which favors solutions with lower-rank than those typically associated with deep neural collapse. In this work, we extend these observations to the cross-entropy loss and analyze how the low-rank bias influences various solutions. Additionally, we explore how this bias induces specific structures in the singular values of the weights at global optima. Furthermore, we examine the loss surface of these models and provide evidence that the frequent observation of deep neural collapse in practice, despite its suboptimality, may result from its higher degeneracy on the loss surface.

Unifying Low Dimensional Observations in Deep Learning Through the Deep Linear Unconstrained Feature Model

Apr 09, 2024Abstract:Modern deep neural networks have achieved high performance across various tasks. Recently, researchers have noted occurrences of low-dimensional structure in the weights, Hessian's, gradients, and feature vectors of these networks, spanning different datasets and architectures when trained to convergence. In this analysis, we theoretically demonstrate these observations arising, and show how they can be unified within a generalized unconstrained feature model that can be considered analytically. Specifically, we consider a previously described structure called Neural Collapse, and its multi-layer counterpart, Deep Neural Collapse, which emerges when the network approaches global optima. This phenomenon explains the other observed low-dimensional behaviours on a layer-wise level, such as the bulk and outlier structure seen in Hessian spectra, and the alignment of gradient descent with the outlier eigenspace of the Hessian. Empirical results in both the deep linear unconstrained feature model and its non-linear equivalent support these predicted observations.

The Loss Surfaces of Neural Networks with General Activation Functions

Apr 16, 2020

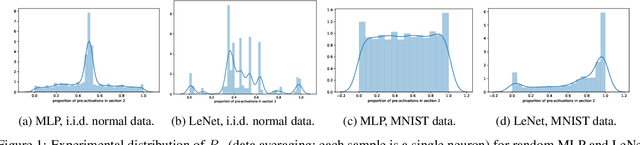

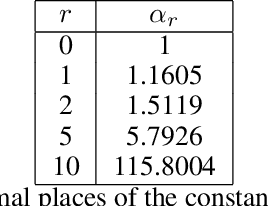

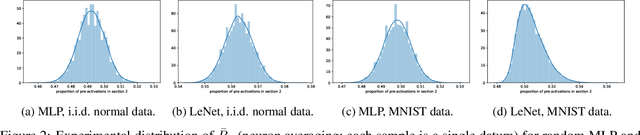

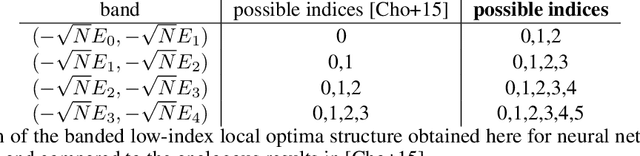

Abstract:We present results extending the foundational work of Choromanska et al (2015) on the complexity of the loss surfaces of multi-layer neural networks. We remove the strict reliance on specifically ReLU activation functions and obtain broadly the same results for general activation functions. This is achieved with piece-wise linear approximations to general activation functions, Kac-Rice calculations akin to those of Auffinger, Ben Arous and \v{C}ern\`y (2013), Fyodorov (2004), Fyodorov and Williams (2007) and asymptotic analysis made possible by supersymmetric methods. Our results strengthen the case for the conclusions of Choromanska et al (2015) and the calculations contain various novel details required to deal with certain perturbations to the classical spin-glass calculations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge