Jolina Li

Learning Zero-Shot Material States Segmentation, by Implanting Natural Image Patterns in Synthetic Data

Mar 14, 2024Abstract:Visual understanding and segmentation of materials and their states is fundamental for understanding the physical world. The infinite textures, shapes, and often blurry boundaries formed by materials make this task particularly hard to generalize. Whether it's identifying wet regions of a surface, minerals in rocks, infected regions in plants, or pollution in water, each material state has its own unique form. For neural nets to learn general class-agnostic materials segmentation it is necessary to first collect and annotate data that capture this complexity. Collecting and manually annotating real-world images is limited by the cost and precision of manual labor. In contrast, synthetic CGI data is highly accurate and almost cost-free but fails to replicate the vast diversity of the material world. This work offers a method to bridge this crucial gap, by implanting patterns extracted from real-world images, in synthetic data. Hence, patterns automatically collected from natural images are used to map materials into synthetic scenes. This unsupervised approach allows the generated data to capture the vast complexity of the real world while maintaining the precision and scale of synthetic data. We also present the first general benchmark for class-agnostic material state segmentation. The benchmark contains a wide range of real-world images of material states, from cooking, food, rocks, construction, plants, and liquids each in various states (wet/dry/stained/cooked/burned/worn/rusted/sediment/foam...). The annotation includes both partial similarity between regions with similar but not identical materials, and hard segmentation of only points of the exact same material state. We show that net trains on MatSeg significantly outperform existing state-of-the-art methods on this task. The dataset, code, and trained model are available.

One-shot recognition of any material anywhere using contrastive learning with physics-based rendering

Dec 14, 2022

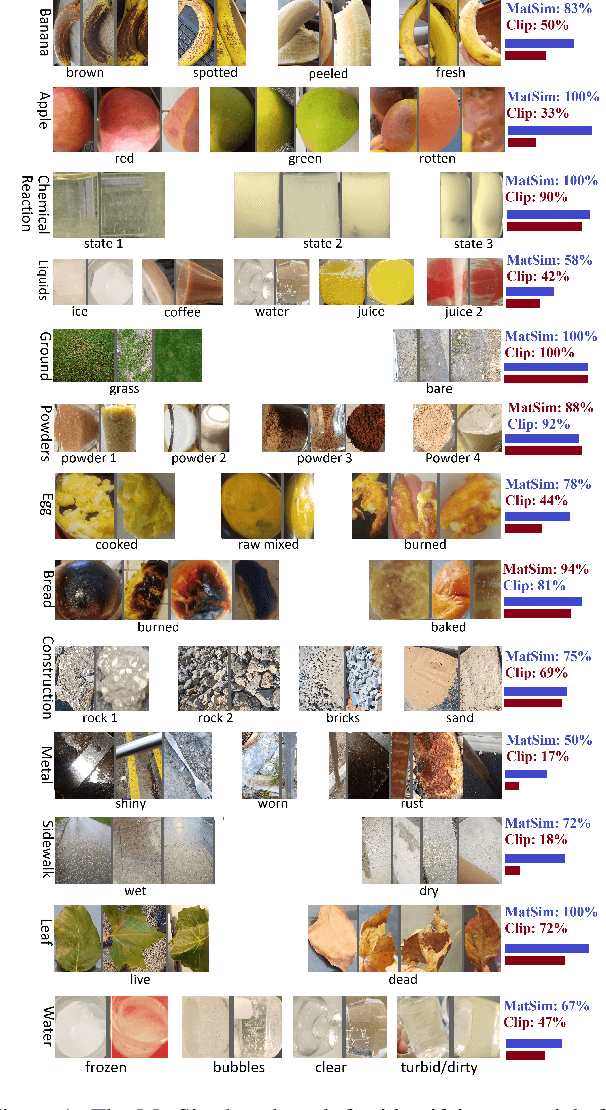

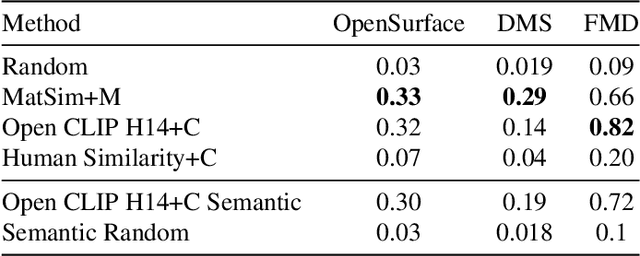

Abstract:We present MatSim: a synthetic dataset, a benchmark, and a method for computer vision based recognition of similarities and transitions between materials and textures, focusing on identifying any material under any conditions using one or a few examples (one-shot learning). The visual recognition of materials is essential to everything from examining food while cooking to inspecting agriculture, chemistry, and industrial products. In this work, we utilize giant repositories used by computer graphics artists to generate a new CGI dataset for material similarity. We use physics-based rendering (PBR) repositories for visual material simulation, assign these materials random 3D objects, and render images with a vast range of backgrounds and illumination conditions (HDRI). We add a gradual transition between materials to support applications with a smooth transition between states (like gradually cooked food). We also render materials inside transparent containers to support beverage and chemistry lab use cases. We then train a contrastive learning network to generate a descriptor that identifies unfamiliar materials using a single image. We also present a new benchmark for a few-shot material recognition that contains a wide range of real-world examples, including the state of a chemical reaction, rotten/fresh fruits, states of food, different types of construction materials, types of ground, and many other use cases involving material states, transitions and subclasses. We show that a network trained on the MatSim synthetic dataset outperforms state-of-the-art models like Clip on the benchmark, despite being tested on material classes that were not seen during training. The dataset, benchmark, code and trained models are available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge