Jokin Labaien

Improving Transformers using Faithful Positional Encoding

May 16, 2024Abstract:We propose a new positional encoding method for a neural network architecture called the Transformer. Unlike the standard sinusoidal positional encoding, our approach is based on solid mathematical grounds and has a guarantee of not losing information about the positional order of the input sequence. We show that the new encoding approach systematically improves the prediction performance in the time-series classification task.

Diagnostic Spatio-temporal Transformer with Faithful Encoding

May 26, 2023Abstract:This paper addresses the task of anomaly diagnosis when the underlying data generation process has a complex spatio-temporal (ST) dependency. The key technical challenge is to extract actionable insights from the dependency tensor characterizing high-order interactions among temporal and spatial indices. We formalize the problem as supervised dependency discovery, where the ST dependency is learned as a side product of multivariate time-series classification. We show that temporal positional encoding used in existing ST transformer works has a serious limitation in capturing higher frequencies (short time scales). We propose a new positional encoding with a theoretical guarantee, based on discrete Fourier transform. We also propose a new ST dependency discovery framework, which can provide readily consumable diagnostic information in both spatial and temporal directions. Finally, we demonstrate the utility of the proposed model, DFStrans (Diagnostic Fourier-based Spatio-temporal Transformer), in a real industrial application of building elevator control.

DA-DGCEx: Ensuring Validity of Deep Guided Counterfactual Explanations With Distribution-Aware Autoencoder Loss

Apr 22, 2021

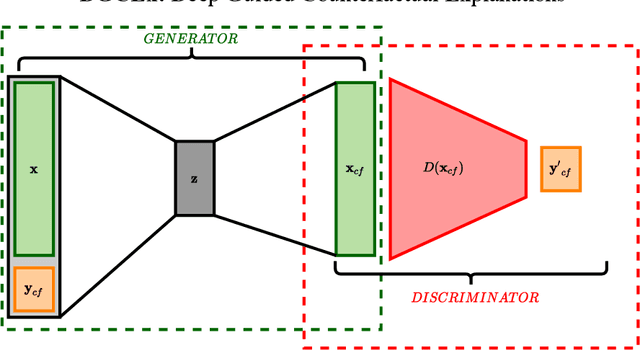

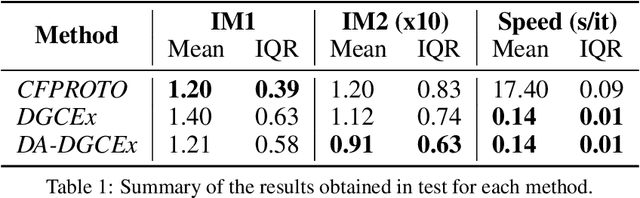

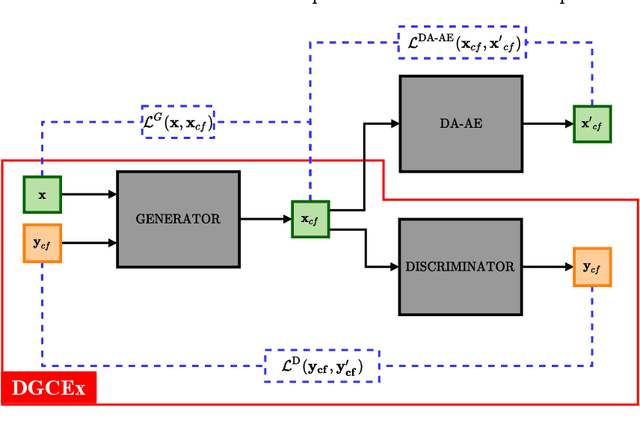

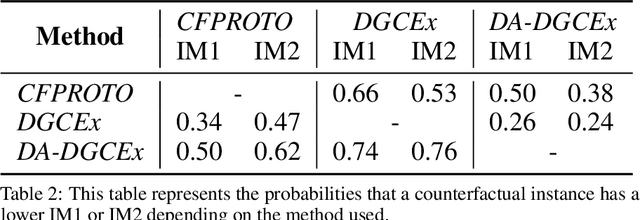

Abstract:Deep Learning has become a very valuable tool in different fields, and no one doubts the learning capacity of these models. Nevertheless, since Deep Learning models are often seen as black boxes due to their lack of interpretability, there is a general mistrust in their decision-making process. To find a balance between effectiveness and interpretability, Explainable Artificial Intelligence (XAI) is gaining popularity in recent years, and some of the methods within this area are used to generate counterfactual explanations. The process of generating these explanations generally consists of solving an optimization problem for each input to be explained, which is unfeasible when real-time feedback is needed. To speed up this process, some methods have made use of autoencoders to generate instant counterfactual explanations. Recently, a method called Deep Guided Counterfactual Explanations (DGCEx) has been proposed, which trains an autoencoder attached to a classification model, in order to generate straightforward counterfactual explanations. However, this method does not ensure that the generated counterfactual instances are close to the data manifold, so unrealistic counterfactual instances may be generated. To overcome this issue, this paper presents Distribution Aware Deep Guided Counterfactual Explanations (DA-DGCEx), which adds a term to the DGCEx cost function that penalizes out of distribution counterfactual instances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge