John-Dylan Haynes

Synthesis and Perceptual Scaling of High Resolution Natural Images Using Stable Diffusion

Oct 16, 2024

Abstract:Natural scenes are of key interest for visual perception. Previous work on natural scenes has frequently focused on collections of discrete images with considerable physical differences from stimulus to stimulus. For many purposes it would, however, be desirable to have sets of natural images that vary smoothly along a continuum (for example in order to measure quantitative properties such as thresholds or precisions). This problem has typically been addressed by morphing a source into a target image. However, this approach yields transitions between images that primarily follow their low-level physical features and that can be semantically unclear or ambiguous. Here, in contrast, we used a different approach (Stable Diffusion XL) to synthesise a custom stimulus set of photorealistic images that are characterized by gradual transitions where each image is a clearly interpretable but unique exemplar from the same category. We developed natural scene stimulus sets from six categories with 18 objects each. For each object we generated 10 graded variants that are ordered along a perceptual continuum. We validated the image set psychophysically in a large sample of participants, ensuring that stimuli for each exemplar have varying levels of perceptual confusability. This image set is of interest for studies on visual perception, attention and short- and long-term memory.

Uncovering convolutional neural network decisions for diagnosing multiple sclerosis on conventional MRI using layer-wise relevance propagation

Apr 18, 2019

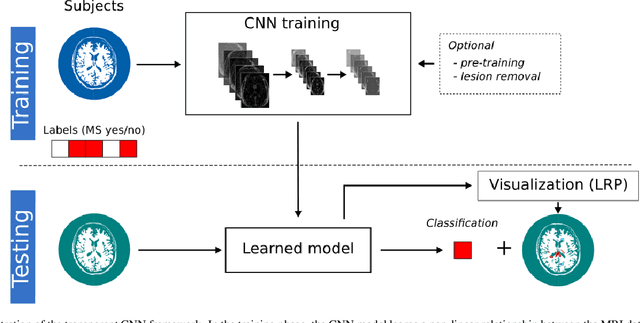

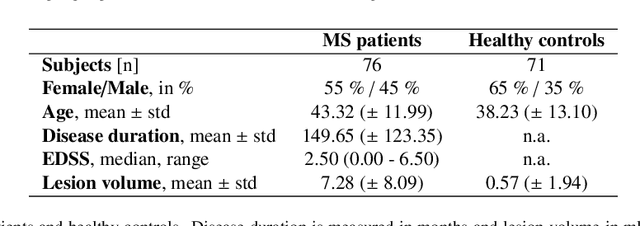

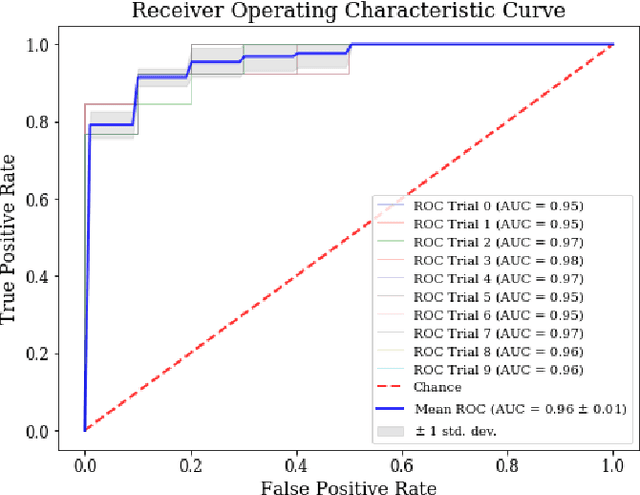

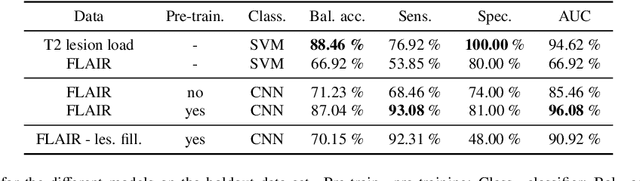

Abstract:Machine learning-based imaging diagnostics has recently reached or even superseded the level of clinical experts in several clinical domains. However, classification decisions of a trained machine learning system are typically non-transparent, a major hindrance for clinical integration, error tracking or knowledge discovery. In this study, we present a transparent deep learning framework relying on convolutional neural networks (CNNs) and layer-wise relevance propagation (LRP) for diagnosing multiple sclerosis (MS). MS is commonly diagnosed utilizing a combination of clinical presentation and conventional magnetic resonance imaging (MRI), specifically the occurrence and presentation of white matter lesions in T2-weighted images. We hypothesized that using LRP in a naive predictive model would enable us to uncover relevant image features that a trained CNN uses for decision-making. Since imaging markers in MS are well-established this would enable us to validate the respective CNN model. First, we pre-trained a CNN on MRI data from the Alzheimer's Disease Neuroimaging Initiative (n = 921), afterwards specializing the CNN to discriminate between MS patients and healthy controls (n = 147). Using LRP, we then produced a heatmap for each subject in the holdout set depicting the voxel-wise relevance for a particular classification decision. The resulting CNN model resulted in a balanced accuracy of 87.04% and an area under the curve of 96.08% in a receiver operating characteristic curve. The subsequent LRP visualization revealed that the CNN model focuses indeed on individual lesions, but also incorporates additional information such as lesion location, non-lesional white matter or gray matter areas such as the thalamus, which are established conventional and advanced MRI markers in MS. We conclude that LRP and the proposed framework have the capability to make diagnostic decisions of...

Visualizing Convolutional Networks for MRI-based Diagnosis of Alzheimer's Disease

Aug 08, 2018

Abstract:Visualizing and interpreting convolutional neural networks (CNNs) is an important task to increase trust in automatic medical decision making systems. In this study, we train a 3D CNN to detect Alzheimer's disease based on structural MRI scans of the brain. Then, we apply four different gradient-based and occlusion-based visualization methods that explain the network's classification decisions by highlighting relevant areas in the input image. We compare the methods qualitatively and quantitatively. We find that all four methods focus on brain regions known to be involved in Alzheimer's disease, such as inferior and middle temporal gyrus. While the occlusion-based methods focus more on specific regions, the gradient-based methods pick up distributed relevance patterns. Additionally, we find that the distribution of relevance varies across patients, with some having a stronger focus on the temporal lobe, whereas for others more cortical areas are relevant. In summary, we show that applying different visualization methods is important to understand the decisions of a CNN, a step that is crucial to increase clinical impact and trust in computer-based decision support systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge