Johannes Langer

The Dual Role of Abstracting over the Irrelevant in Symbolic Explanations: Cognitive Effort vs. Understanding

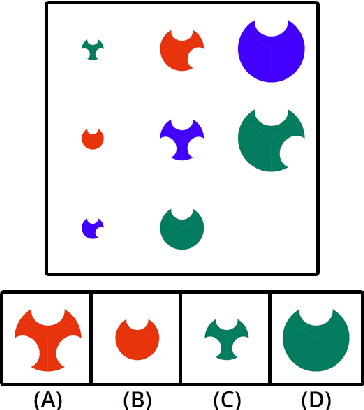

Feb 03, 2026Abstract:Explanations are central to human cognition, yet AI systems often produce outputs that are difficult to understand. While symbolic AI offers a transparent foundation for interpretability, raw logical traces often impose a high extraneous cognitive load. We investigate how formal abstractions, specifically removal and clustering, impact human reasoning performance and cognitive effort. Utilizing Answer Set Programming (ASP) as a formal framework, we define a notion of irrelevant details to be abstracted over to obtain simplified explanations. Our cognitive experiments, in which participants classified stimuli across domains with explanations derived from an answer set program, show that clustering details significantly improve participants' understanding, while removal of details significantly reduce cognitive effort, supporting the hypothesis that abstraction enhances human-centered symbolic explanations.

Can humans teach machines to code?

Apr 30, 2024

Abstract:The goal of inductive program synthesis is for a machine to automatically generate a program from user-supplied examples of the desired behaviour of the program. A key underlying assumption is that humans can provide examples of sufficient quality to teach a concept to a machine. However, as far as we are aware, this assumption lacks both empirical and theoretical support. To address this limitation, we explore the question `Can humans teach machines to code?'. To answer this question, we conduct a study where we ask humans to generate examples for six programming tasks, such as finding the maximum element of a list. We compare the performance of a program synthesis system trained on (i) human-provided examples, (ii) randomly sampled examples, and (iii) expert-provided examples. Our results show that, on most of the tasks, non-expert participants did not provide sufficient examples for a program synthesis system to learn an accurate program. Our results also show that non-experts need to provide more examples than both randomly sampled and expert-provided examples.

Explanatory machine learning for sequential human teaching

May 20, 2022

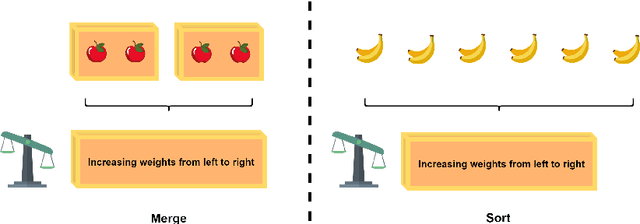

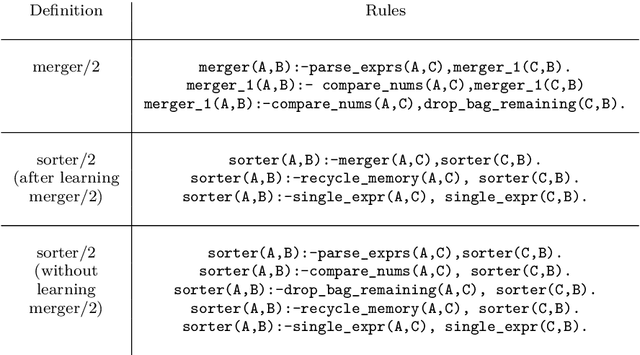

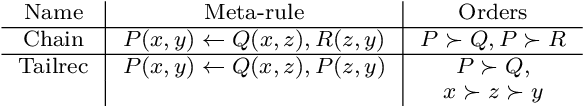

Abstract:The topic of comprehensibility of machine-learned theories has recently drawn increasing attention. Inductive Logic Programming (ILP) uses logic programming to derive logic theories from small data based on abduction and induction techniques. Learned theories are represented in the form of rules as declarative descriptions of obtained knowledge. In earlier work, the authors provided the first evidence of a measurable increase in human comprehension based on machine-learned logic rules for simple classification tasks. In a later study, it was found that the presentation of machine-learned explanations to humans can produce both beneficial and harmful effects in the context of game learning. We continue our investigation of comprehensibility by examining the effects of the ordering of concept presentations on human comprehension. In this work, we examine the explanatory effects of curriculum order and the presence of machine-learned explanations for sequential problem-solving. We show that 1) there exist tasks A and B such that learning A before B has a better human comprehension with respect to learning B before A and 2) there exist tasks A and B such that the presence of explanations when learning A contributes to improved human comprehension when subsequently learning B. We propose a framework for the effects of sequential teaching on comprehension based on an existing definition of comprehensibility and provide evidence for support from data collected in human trials. Empirical results show that sequential teaching of concepts with increasing complexity a) has a beneficial effect on human comprehension and b) leads to human re-discovery of divide-and-conquer problem-solving strategies, and c) studying machine-learned explanations allows adaptations of human problem-solving strategy with better performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge