Joe Hays

Collision-Free Inverse Kinematics Through QP Optimization (iKinQP)

Aug 29, 2023Abstract:Robotic manipulators are often designed with more actuated degrees-of-freedom than required to fully control an end effector's position and orientation. These "redundant" manipulators can allow infinite joint configurations that satisfy a particular task-space position and orientation, providing more possibilities for the manipulator to traverse a smooth collision-free trajectory. However, finding such a trajectory is non-trivial because the inverse kinematics for redundant manipulators cannot typically be solved analytically. Many strategies have been developed to tackle this problem, including Jacobian pseudo-inverse method, rapidly-expanding-random tree (RRT) motion planning, and quadratic programming (QP) based methods. Here, we present a flexible inverse kinematics-based QP strategy (iKinQP). Because it is independent of robot dynamics, the algorithm is relatively light-weight, and able to run in real-time in step with torque control. Collisions are defined as kinematic trees of elementary geometries, making the algorithm agnostic to the method used to determine what collisions are in the environment. Collisions are treated as hard constraints which guarantees the generation of collision-free trajectories. Trajectory smoothness is accomplished through the QP optimization. Our algorithm was evaluated for computational efficiency, smoothness, and its ability to provide trackable trajectories. It was shown that iKinQP is capable of providing smooth, collision-free trajectories at real-time rates.

Synaptic motor adaptation: A three-factor learning rule for adaptive robotic control in spiking neural networks

Jun 02, 2023

Abstract:Legged robots operating in real-world environments must possess the ability to rapidly adapt to unexpected conditions, such as changing terrains and varying payloads. This paper introduces the Synaptic Motor Adaptation (SMA) algorithm, a novel approach to achieving real-time online adaptation in quadruped robots through the utilization of neuroscience-derived rules of synaptic plasticity with three-factor learning. To facilitate rapid adaptation, we meta-optimize a three-factor learning rule via gradient descent to adapt to uncertainty by approximating an embedding produced by privileged information using only locally accessible onboard sensing data. Our algorithm performs similarly to state-of-the-art motor adaptation algorithms and presents a clear path toward achieving adaptive robotics with neuromorphic hardware.

Learning to learn online with neuromodulated synaptic plasticity in spiking neural networks

Jun 28, 2022Abstract:We propose that in order to harness our understanding of neuroscience toward machine learning, we must first have powerful tools for training brain-like models of learning. Although substantial progress has been made toward understanding the dynamics of learning in the brain, neuroscience-derived models of learning have yet to demonstrate the same performance capabilities as methods in deep learning such as gradient descent. Inspired by the successes of machine learning using gradient descent, we demonstrate that models of neuromodulated synaptic plasticity from neuroscience can be trained in Spiking Neural Networks (SNNs) with a framework of learning to learn through gradient descent to address challenging online learning problems. This framework opens a new path toward developing neuroscience inspired online learning algorithms.

Learning To Estimate Regions Of Attraction Of Autonomous Dynamical Systems Using Physics-Informed Neural Networks

Nov 18, 2021

Abstract:When learning to perform motor tasks in a simulated environment, neural networks must be allowed to explore their action space to discover new potentially viable solutions. However, in an online learning scenario with physical hardware, this exploration must be constrained by relevant safety considerations in order to avoid damage to the agent's hardware and environment. We aim to address this problem by training a neural network, which we will refer to as a "safety network", to estimate the region of attraction (ROA) of a controlled autonomous dynamical system. This safety network can thereby be used to quantify the relative safety of proposed control actions and prevent the selection of damaging actions. Here we present our development of the safety network by training an artificial neural network (ANN) to represent the ROA of several autonomous dynamical system benchmark problems. The training of this network is predicated upon both Lyapunov theory and neural solutions to partial differential equations (PDEs). By learning to approximate the viscosity solution to a specially chosen PDE that contains the dynamics of the system of interest, the safety network learns to approximate a particular function, similar to a Lyapunov function, whose zero level set is boundary of the ROA. We train our safety network to solve these PDEs in a semi-supervised manner following a modified version of the Physics Informed Neural Network (PINN) approach, utilizing a loss function that penalizes disagreement with the PDE's initial and boundary conditions, as well as non-zero residual and variational terms. In future work we intend to apply this technique to reinforcement learning agents during motor learning tasks.

Stable Lifelong Learning: Spiking neurons as a solution to instability in plastic neural networks

Nov 07, 2021

Abstract:Synaptic plasticity poses itself as a powerful method of self-regulated unsupervised learning in neural networks. A recent resurgence of interest has developed in utilizing Artificial Neural Networks (ANNs) together with synaptic plasticity for intra-lifetime learning. Plasticity has been shown to improve the learning capabilities of these networks in generalizing to novel environmental circumstances. However, the long-term stability of these trained networks has yet to be examined. This work demonstrates that utilizing plasticity together with ANNs leads to instability beyond the pre-specified lifespan used during training. This instability can lead to the dramatic decline of reward seeking behavior, or quickly lead to reaching environment terminal states. This behavior is shown to hold consistent for several plasticity rules on two different environments across many training time-horizons: a cart-pole balancing problem and a quadrupedal locomotion problem. We present a solution to this instability through the use of spiking neurons.

SpikePropamine: Differentiable Plasticity in Spiking Neural Networks

Jun 04, 2021

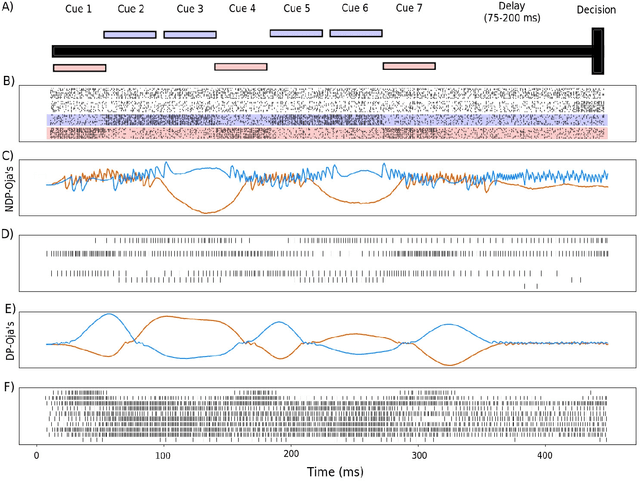

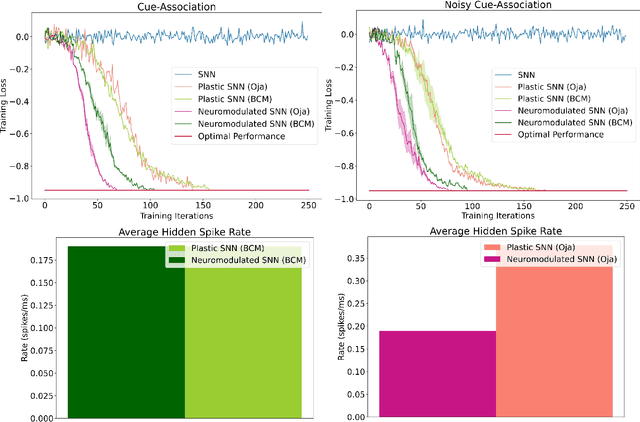

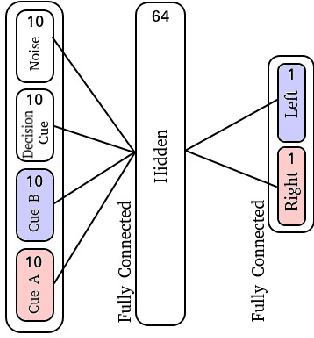

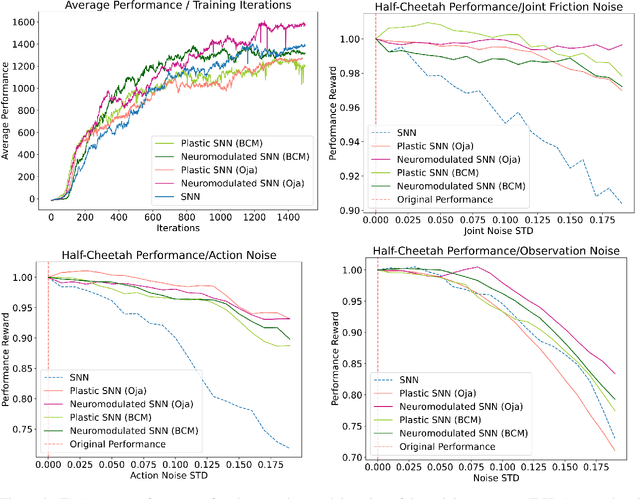

Abstract:The adaptive changes in synaptic efficacy that occur between spiking neurons have been demonstrated to play a critical role in learning for biological neural networks. Despite this source of inspiration, many learning focused applications using Spiking Neural Networks (SNNs) retain static synaptic connections, preventing additional learning after the initial training period. Here, we introduce a framework for simultaneously learning the underlying fixed-weights and the rules governing the dynamics of synaptic plasticity and neuromodulated synaptic plasticity in SNNs through gradient descent. We further demonstrate the capabilities of this framework on a series of challenging benchmarks, learning the parameters of several plasticity rules including BCM, Oja's, and their respective set of neuromodulatory variants. The experimental results display that SNNs augmented with differentiable plasticity are sufficient for solving a set of challenging temporal learning tasks that a traditional SNN fails to solve, even in the presence of significant noise. These networks are also shown to be capable of producing locomotion on a high-dimensional robotic learning task, where near-minimal degradation in performance is observed in the presence of novel conditions not seen during the initial training period.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge