Joao Regateiro

Deformation-Guided Unsupervised Non-Rigid Shape Matching

Nov 27, 2023

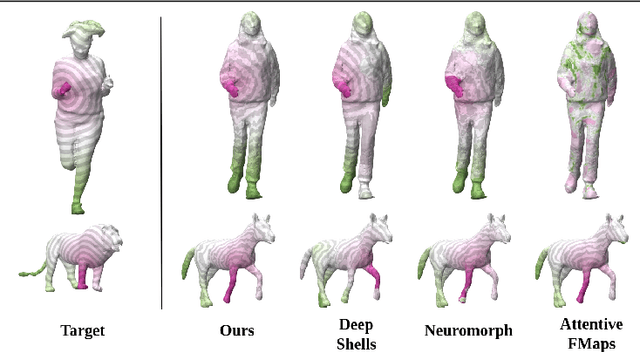

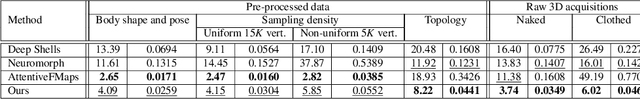

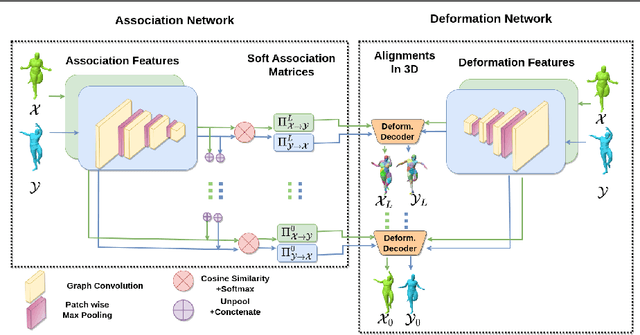

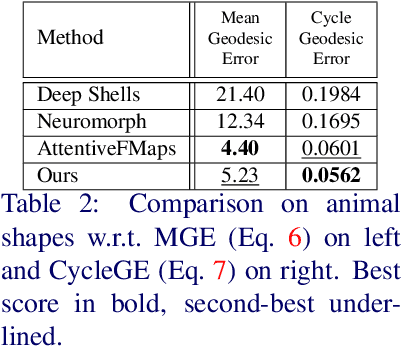

Abstract:We present an unsupervised data-driven approach for non-rigid shape matching. Shape matching identifies correspondences between two shapes and is a fundamental step in many computer vision and graphics applications. Our approach is designed to be particularly robust when matching shapes digitized using 3D scanners that contain fine geometric detail and suffer from different types of noise including topological noise caused by the coalescence of spatially close surface regions. We build on two strategies. First, using a hierarchical patch based shape representation we match shapes consistently in a coarse to fine manner, allowing for robustness to noise. This multi-scale representation drastically reduces the dimensionality of the problem when matching at the coarsest scale, rendering unsupervised learning feasible. Second, we constrain this hierarchical matching to be reflected in 3D by fitting a patch-wise near-rigid deformation model. Using this constraint, we leverage spatial continuity at different scales to capture global shape properties, resulting in matchings that generalize well to data with different deformations and noise characteristics. Experiments demonstrate that our approach obtains significantly better results on raw 3D scans than state-of-the-art methods, while performing on-par on standard test scenarios.

3D Human Shape Style Transfer

Sep 03, 2021

Abstract:We consider the problem of modifying/replacing the shape style of a real moving character with those of an arbitrary static real source character. Traditional solutions follow a pose transfer strategy, from the moving character to the source character shape, that relies on skeletal pose parametrization. In this paper, we explore an alternative approach that transfers the source shape style onto the moving character. The expected benefit is to avoid the inherently difficult pose to shape conversion required with skeletal parametrization applied on real characters. To this purpose, we consider image style transfer techniques and investigate how to adapt them to 3D human shapes. Adaptive Instance Normalisation (AdaIN) and SPADE architectures have been demonstrated to efficiently and accurately transfer the style of an image onto another while preserving the original image structure. Where AdaIN contributes with a module to perform style transfer through the statistics of the subjects and SPADE contribute with a residual block architecture to refine the quality of the style transfer. We demonstrate that these approaches are extendable to the 3D shape domain by proposing a convolutional neural network that applies the same principle of preserving the shape structure (shape pose) while transferring the style of a new subject shape. The generated results are supervised through a discriminator module to evaluate the realism of the shape, whilst enforcing the decoder to synthesise plausible shapes and improve the style transfer for unseen subjects. Our experiments demonstrate an average of $\approx 56\%$ qualitative and quantitative improvements over the baseline in shape transfer through optimization-based and learning-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge