Aymen Merrouche

Combining Neural Fields and Deformation Models for Non-Rigid 3D Motion Reconstruction from Partial Data

Dec 11, 2024

Abstract:We introduce a novel, data-driven approach for reconstructing temporally coherent 3D motion from unstructured and potentially partial observations of non-rigidly deforming shapes. Our goal is to achieve high-fidelity motion reconstructions for shapes that undergo near-isometric deformations, such as humans wearing loose clothing. The key novelty of our work lies in its ability to combine implicit shape representations with explicit mesh-based deformation models, enabling detailed and temporally coherent motion reconstructions without relying on parametric shape models or decoupling shape and motion. Each frame is represented as a neural field decoded from a feature space where observations over time are fused, hence preserving geometric details present in the input data. Temporal coherence is enforced with a near-isometric deformation constraint between adjacent frames that applies to the underlying surface in the neural field. Our method outperforms state-of-the-art approaches, as demonstrated by its application to human and animal motion sequences reconstructed from monocular depth videos.

Deformation-Guided Unsupervised Non-Rigid Shape Matching

Nov 27, 2023

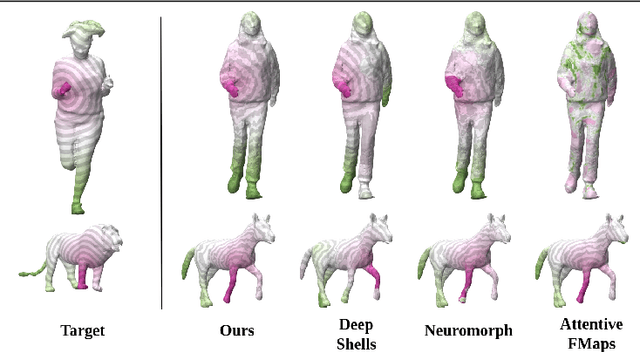

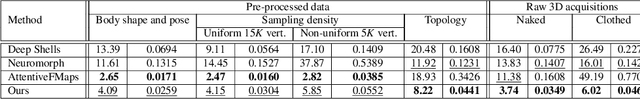

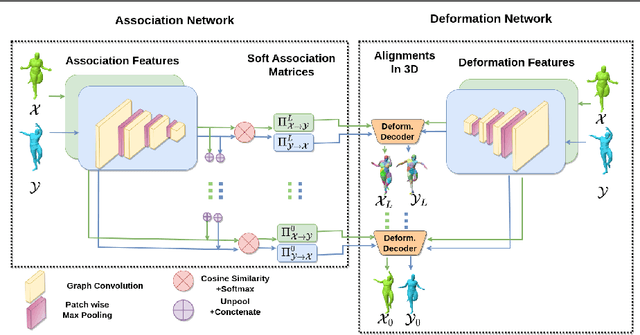

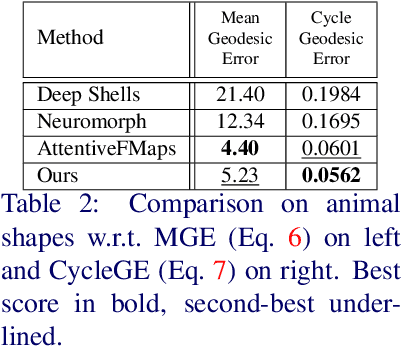

Abstract:We present an unsupervised data-driven approach for non-rigid shape matching. Shape matching identifies correspondences between two shapes and is a fundamental step in many computer vision and graphics applications. Our approach is designed to be particularly robust when matching shapes digitized using 3D scanners that contain fine geometric detail and suffer from different types of noise including topological noise caused by the coalescence of spatially close surface regions. We build on two strategies. First, using a hierarchical patch based shape representation we match shapes consistently in a coarse to fine manner, allowing for robustness to noise. This multi-scale representation drastically reduces the dimensionality of the problem when matching at the coarsest scale, rendering unsupervised learning feasible. Second, we constrain this hierarchical matching to be reflected in 3D by fitting a patch-wise near-rigid deformation model. Using this constraint, we leverage spatial continuity at different scales to capture global shape properties, resulting in matchings that generalize well to data with different deformations and noise characteristics. Experiments demonstrate that our approach obtains significantly better results on raw 3D scans than state-of-the-art methods, while performing on-par on standard test scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge