Joao F. Santos

Beam Profiling and Beamforming Modeling for mmWave NextG Networks

Aug 23, 2024Abstract:This paper presents an experimental study on mmWave beam profiling on a mmWave testbed, and develops a machine learning model for beamforming based on the experiment data. The datasets we have obtained from the beam profiling and the machine learning model for beamforming are valuable for a broad set of network design problems, such as network topology optimization, user equipment association, power allocation, and beam scheduling, in complex and dynamic mmWave networks. We have used two commercial-grade mmWave testbeds with operational frequencies on the 27 Ghz and 71 GHz, respectively, for beam profiling. The obtained datasets were used to train the machine learning model to estimate the received downlink signal power, and data rate at the receivers (user equipment with different geographical locations in the range of a transmitter (base station). The results have shown high prediction accuracy with low mean square error (loss), indicating the model's ability to estimate the received signal power or data rate at each individual receiver covered by a beam. The dataset and the machine learning-based beamforming model can assist researchers in optimizing various network design problems for mmWave networks.

Radio Access Technology Characterisation Through Object Detection

Jul 27, 2020

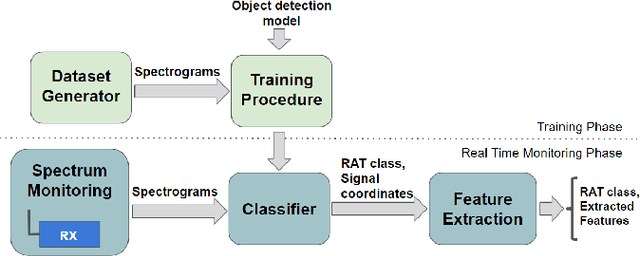

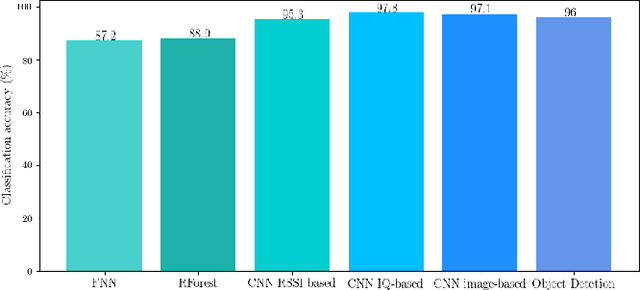

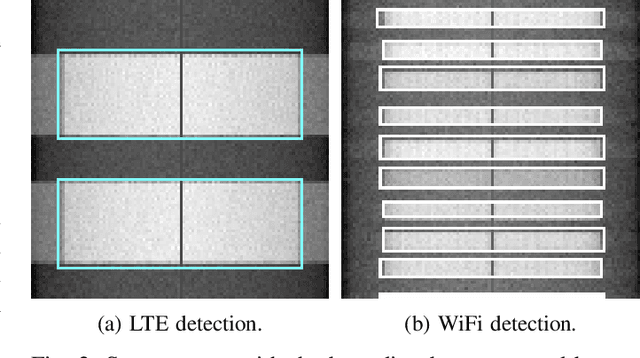

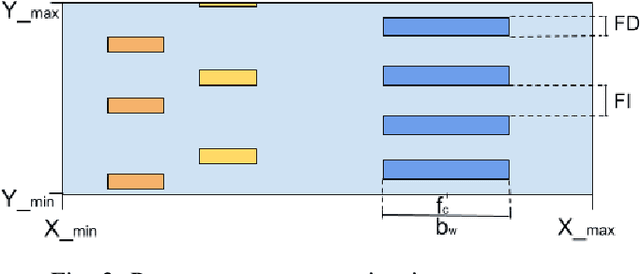

Abstract:\ac{RAT} classification and monitoring are essential for efficient coexistence of different communication systems in shared spectrum. Shared spectrum, including operation in license-exempt bands, is envisioned in the \ac{5G} standards (e.g., 3GPP Rel. 16). In this paper, we propose a \ac{ML} approach to characterise the spectrum utilisation and facilitate the dynamic access to it. Recent advances in \acp{CNN} enable us to perform waveform classification by processing spectrograms as images. In contrast to other \ac{ML} methods that can only provide the class of the monitored \acp{RAT}, the solution we propose can recognise not only different \acp{RAT} in shared spectrum, but also identify critical parameters such as inter-frame duration, frame duration, centre frequency, and signal bandwidth by using object detection and a feature extraction module to extract features from spectrograms. We have implemented and evaluated our solution using a dataset of commercial transmissions, as well as in a \ac{SDR} testbed environment. The scenario evaluated was the coexistence of WiFi and LTE transmissions in shared spectrum. Our results show that our approach has an accuracy of 96\% in the classification of \acp{RAT} from a dataset that captures transmissions of regular user communications. It also shows that the extracted features can be precise within a margin of 2\%, %of the size of the image, and is capable of detect above 94\% of objects under a broad range of transmission power levels and interference conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge