Jinwook Jung

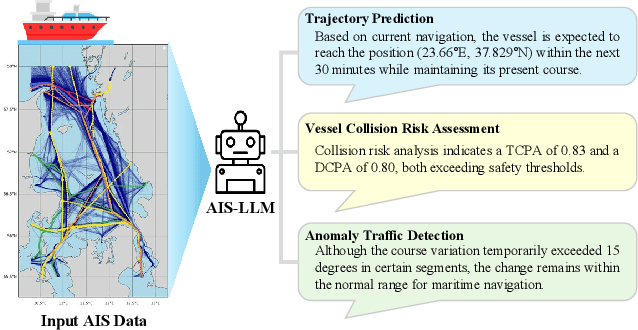

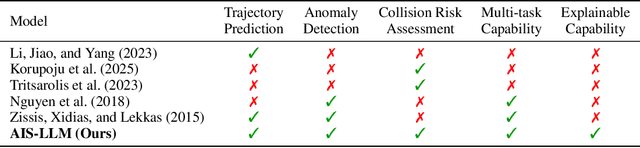

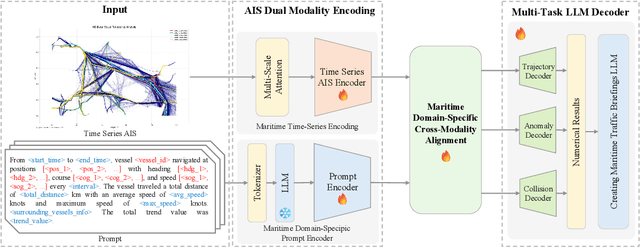

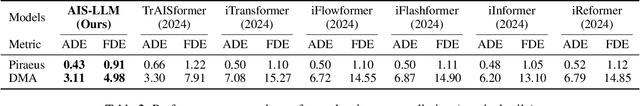

AIS-LLM: A Unified Framework for Maritime Trajectory Prediction, Anomaly Detection, and Collision Risk Assessment with Explainable Forecasting

Aug 11, 2025

Abstract:With the increase in maritime traffic and the mandatory implementation of the Automatic Identification System (AIS), the importance and diversity of maritime traffic analysis tasks based on AIS data, such as vessel trajectory prediction, anomaly detection, and collision risk assessment, is rapidly growing. However, existing approaches tend to address these tasks individually, making it difficult to holistically consider complex maritime situations. To address this limitation, we propose a novel framework, AIS-LLM, which integrates time-series AIS data with a large language model (LLM). AIS-LLM consists of a Time-Series Encoder for processing AIS sequences, an LLM-based Prompt Encoder, a Cross-Modality Alignment Module for semantic alignment between time-series data and textual prompts, and an LLM-based Multi-Task Decoder. This architecture enables the simultaneous execution of three key tasks: trajectory prediction, anomaly detection, and risk assessment of vessel collisions within a single end-to-end system. Experimental results demonstrate that AIS-LLM outperforms existing methods across individual tasks, validating its effectiveness. Furthermore, by integratively analyzing task outputs to generate situation summaries and briefings, AIS-LLM presents the potential for more intelligent and efficient maritime traffic management.

Mitigating Parameter Interference in Model Merging via Sharpness-Aware Fine-Tuning

Apr 20, 2025Abstract:Large-scale deep learning models with a pretraining-finetuning paradigm have led to a surge of numerous task-specific models fine-tuned from a common pre-trained model. Recently, several research efforts have been made on merging these large models into a single multi-task model, particularly with simple arithmetic on parameters. Such merging methodology faces a central challenge: interference between model parameters fine-tuned on different tasks. Few recent works have focused on designing a new fine-tuning scheme that can lead to small parameter interference, however at the cost of the performance of each task-specific fine-tuned model and thereby limiting that of a merged model. To improve the performance of a merged model, we note that a fine-tuning scheme should aim for (1) smaller parameter interference and (2) better performance of each fine-tuned model on the corresponding task. In this work, we aim to design a new fine-tuning objective function to work towards these two goals. In the course of this process, we find such objective function to be strikingly similar to sharpness-aware minimization (SAM) objective function, which aims to achieve generalization by finding flat minima. Drawing upon our observation, we propose to fine-tune pre-trained models via sharpness-aware minimization. The experimental and theoretical results showcase the effectiveness and orthogonality of our proposed approach, improving performance upon various merging and fine-tuning methods. Our code is available at https://github.com/baiklab/SAFT-Merge.

PBVS 2024 Solution: Self-Supervised Learning and Sampling Strategies for SAR Classification in Extreme Long-Tail Distribution

Dec 17, 2024

Abstract:The Multimodal Learning Workshop (PBVS 2024) aims to improve the performance of automatic target recognition (ATR) systems by leveraging both Synthetic Aperture Radar (SAR) data, which is difficult to interpret but remains unaffected by weather conditions and visible light, and Electro-Optical (EO) data for simultaneous learning. The subtask, known as the Multi-modal Aerial View Imagery Challenge - Classification, focuses on predicting the class label of a low-resolution aerial image based on a set of SAR-EO image pairs and their respective class labels. The provided dataset consists of SAR-EO pairs, characterized by a severe long-tail distribution with over a 1000-fold difference between the largest and smallest classes, making typical long-tail methods difficult to apply. Additionally, the domain disparity between the SAR and EO datasets complicates the effectiveness of standard multimodal methods. To address these significant challenges, we propose a two-stage learning approach that utilizes self-supervised techniques, combined with multimodal learning and inference through SAR-to-EO translation for effective EO utilization. In the final testing phase of the PBVS 2024 Multi-modal Aerial View Image Challenge - Classification (SAR Classification) task, our model achieved an accuracy of 21.45%, an AUC of 0.56, and a total score of 0.30, placing us 9th in the competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge