Jinwoo Park

COMET: Codebook-based Online-adaptive Multi-scale Embedding for Time-series Anomaly Detection

Feb 03, 2026Abstract:Time series anomaly detection is a critical task across various industrial domains. However, capturing temporal dependencies and multivariate correlations within patch-level representation learning remains underexplored, and reliance on single-scale patterns limits the detection of anomalies across different temporal ranges. Furthermore, focusing on normal data representations makes models vulnerable to distribution shifts at inference time. To address these limitations, we propose Codebook-based Online-adaptive Multi-scale Embedding for Time-series anomaly detection (COMET), which consists of three key components: (1) Multi-scale Patch Encoding captures temporal dependencies and inter-variable correlations across multiple patch scales. (2) Vector-Quantized Coreset learns representative normal patterns via codebook and detects anomalies with a dual-score combining quantization error and memory distance. (3) Online Codebook Adaptation generates pseudo-labels based on codebook entries and dynamically adapts the model at inference through contrastive learning. Experiments on five benchmark datasets demonstrate that COMET achieves the best performance in 36 out of 45 evaluation metrics, validating its effectiveness across diverse environments.

Learning and Optimizing the Efficacy of Spatio-Temporal Task Allocation under Temporal and Resource Constraints

Jan 05, 2026Abstract:Complex multi-robot missions often require heterogeneous teams to jointly optimize task allocation, scheduling, and path planning to improve team performance under strict constraints. We formalize these complexities into a new class of problems, dubbed Spatio-Temporal Efficacy-optimized Allocation for Multi-robot systems (STEAM). STEAM builds upon trait-based frameworks that model robots using their capabilities (e.g., payload and speed), but goes beyond the typical binary success-failure model by explicitly modeling the efficacy of allocations as trait-efficacy maps. These maps encode how the aggregated capabilities assigned to a task determine performance. Further, STEAM accommodates spatio-temporal constraints, including a user-specified time budget (i.e., maximum makespan). To solve STEAM problems, we contribute a novel algorithm named Efficacy-optimized Incremental Task Allocation Graph Search (E-ITAGS) that simultaneously optimizes task performance and respects time budgets by interleaving task allocation, scheduling, and path planning. Motivated by the fact that trait-efficacy maps are difficult, if not impossible, to specify, E-ITAGS efficiently learns them using a realizability-aware active learning module. Our approach is realizability-aware since it explicitly accounts for the fact that not all combinations of traits are realizable by the robots available during learning. Further, we derive experimentally-validated bounds on E-ITAGS' suboptimality with respect to efficacy. Detailed numerical simulations and experiments using an emergency response domain demonstrate that E-ITAGS generates allocations of higher efficacy compared to baselines, while respecting resource and spatio-temporal constraints. We also show that our active learning approach is sample efficient and establishes a principled tradeoff between data and computational efficiency.

Unveiling Key Aspects of Fine-Tuning in Sentence Embeddings: A Representation Rank Analysis

May 18, 2024

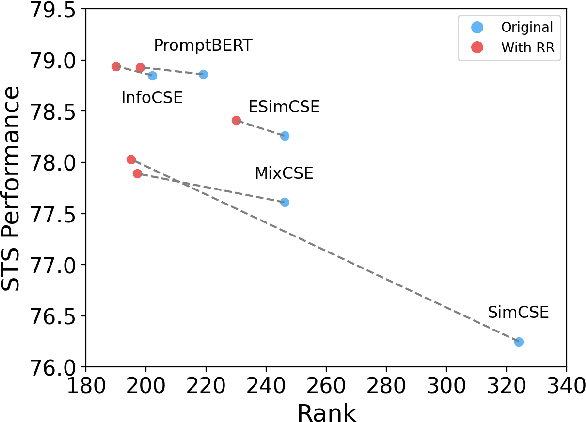

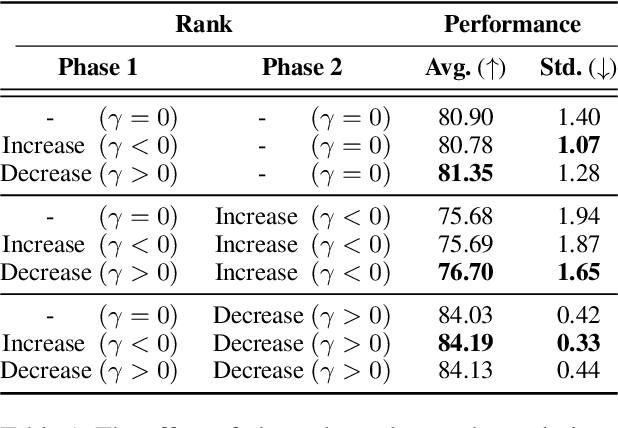

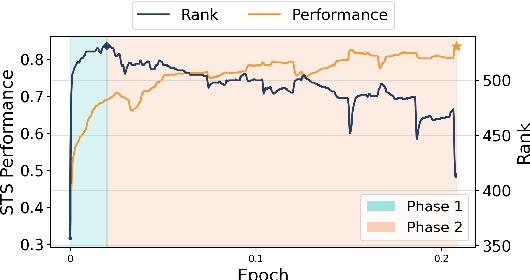

Abstract:The latest advancements in unsupervised learning of sentence embeddings predominantly involve employing contrastive learning-based (CL-based) fine-tuning over pre-trained language models. In this study, we analyze the latest sentence embedding methods by adopting representation rank as the primary tool of analysis. We first define Phase 1 and Phase 2 of fine-tuning based on when representation rank peaks. Utilizing these phases, we conduct a thorough analysis and obtain essential findings across key aspects, including alignment and uniformity, linguistic abilities, and correlation between performance and rank. For instance, we find that the dynamics of the key aspects can undergo significant changes as fine-tuning transitions from Phase 1 to Phase 2. Based on these findings, we experiment with a rank reduction (RR) strategy that facilitates rapid and stable fine-tuning of the latest CL-based methods. Through empirical investigations, we showcase the efficacy of RR in enhancing the performance and stability of five state-of-the-art sentence embedding methods.

Deep reinforcement learning for guidewire navigation in coronary artery phantom

Oct 05, 2021

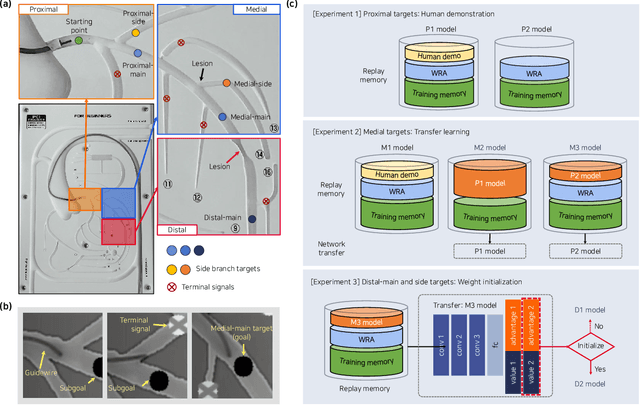

Abstract:In percutaneous intervention for treatment of coronary plaques, guidewire navigation is a primary procedure for stent delivery. Steering a flexible guidewire within coronary arteries requires considerable training, and the non-linearity between the control operation and the movement of the guidewire makes precise manipulation difficult. Here, we introduce a deep reinforcement learning(RL) framework for autonomous guidewire navigation in a robot-assisted coronary intervention. Using Rainbow, a segment-wise learning approach is applied to determine how best to accelerate training using human demonstrations with deep Q-learning from demonstrations (DQfD), transfer learning, and weight initialization. `State' for RL is customized as a focus window near the guidewire tip, and subgoals are placed to mitigate a sparse reward problem. The RL agent improves performance, eventually enabling the guidewire to reach all valid targets in `stable' phase. Our framework opens anew direction in the automation of robot-assisted intervention, providing guidance on RL in physical spaces involving mechanical fatigue.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge