Jing-Yuan Xia

A Meta-Learning Based Gradient Descent Algorithm for MU-MIMO Beamforming

Oct 27, 2022

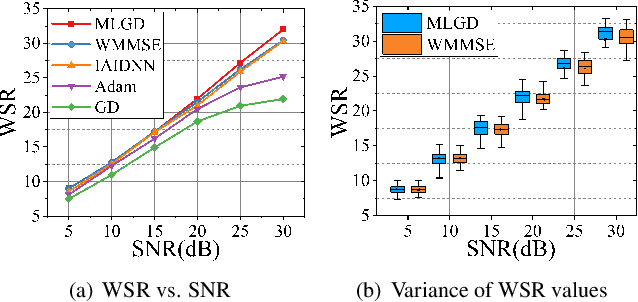

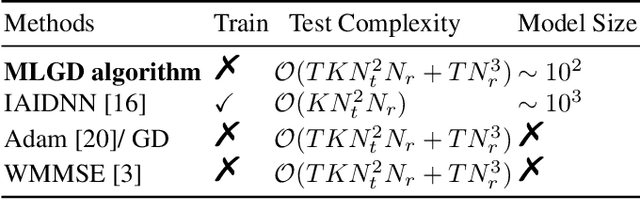

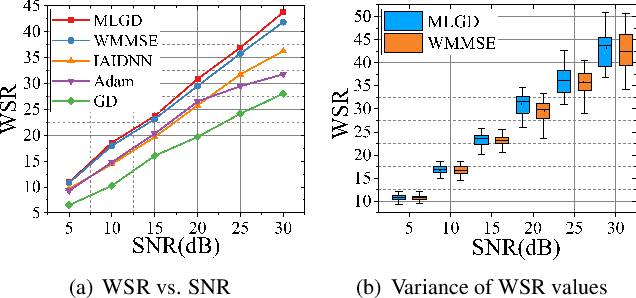

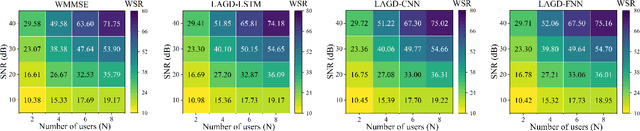

Abstract:Multi-user multiple-input multiple-output (MU-MIMO) beamforming design is typically formulated as a non-convex weighted sum rate (WSR) maximization problem that is known to be NP-hard. This problem is solved either by iterative algorithms, which suffer from slow convergence, or more recently by using deep learning tools, which require time-consuming pre-training process. In this paper, we propose a low-complexity meta-learning based gradient descent algorithm. A meta network with lightweight architecture is applied to learn an adaptive gradient descent update rule to directly optimize the beamformer. This lightweight network is trained during the iterative optimization process, which we refer to as \emph{training while solving}, which removes both the training process and the data-dependency of existing deep learning based solutions.Extensive simulations show that the proposed method achieves superior WSR performance compared to existing learning-based approaches as well as the conventional WMMSE algorithm, while enjoying much lower computational load.

A Learning Aided Gradient Descent for MISO Beamforming

Jun 21, 2022

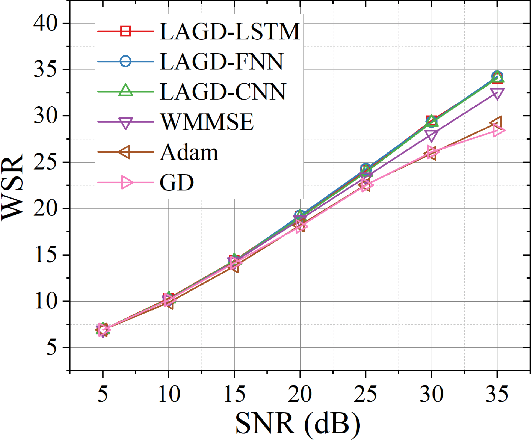

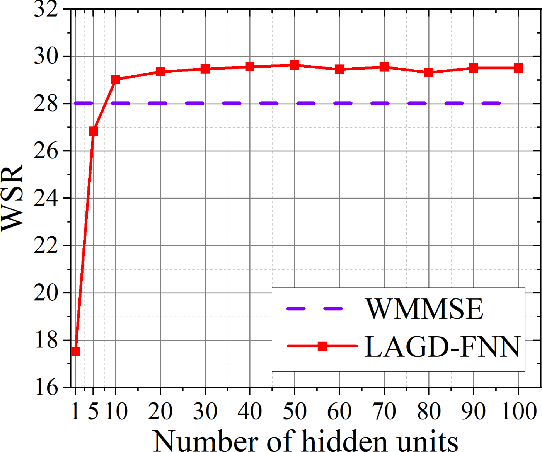

Abstract:This paper proposes a learning aided gradient descent (LAGD) algorithm to solve the weighted sum rate (WSR) maximization problem for multiple-input single-output (MISO) beamforming. The proposed LAGD algorithm directly optimizes the transmit precoder through implicit gradient descent based iterations, at each of which the optimization strategy is determined by a neural network, and thus, is dynamic and adaptive. At each instance of the problem, this network is initialized randomly, and updated throughout the iterative solution process. Therefore, the LAGD algorithm can be implemented at any signal-to-noise ratio (SNR) and for arbitrary antenna/user numbers, does not require labelled data or training prior to deployment. Numerical results show that the LAGD algorithm can outperform of the well-known WMMSE algorithm as well as other learning-based solutions with a modest computational complexity. Our code is available at https://github.com/XiaGroup/LAGD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge