Jinchegn Hu

Potential Auto-driving Threat: Universal Rain-removal Attack

Nov 18, 2022

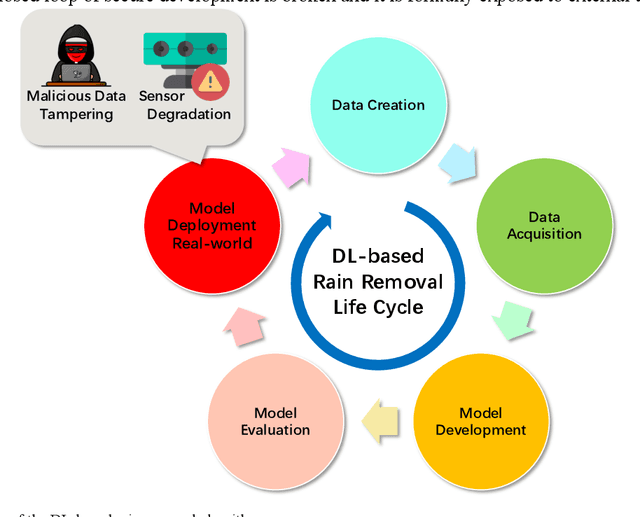

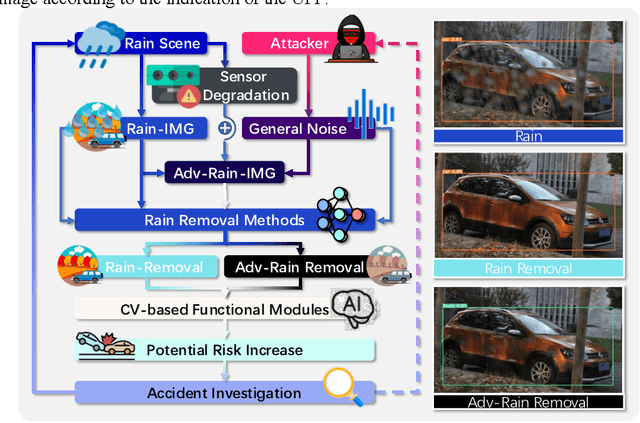

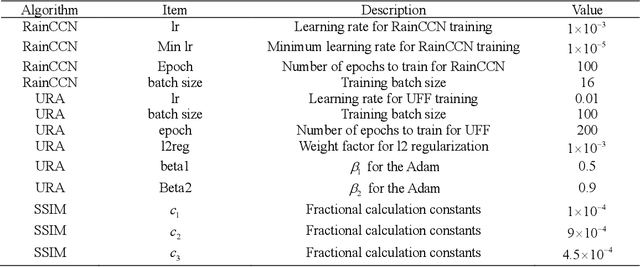

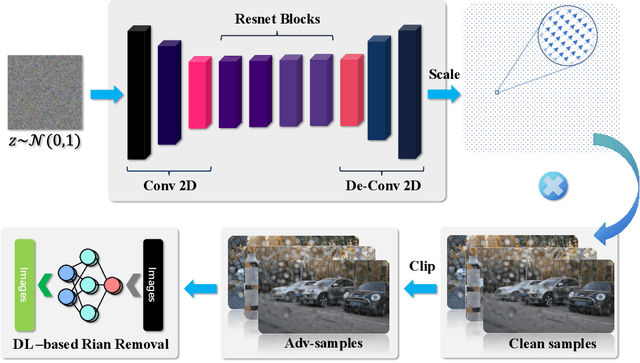

Abstract:The problem of robustness in adverse weather conditions is considered a significant challenge for computer vision algorithms in the applicants of autonomous driving. Image rain removal algorithms are a general solution to this problem. They find a deep connection between raindrops/rain-streaks and images by mining the hidden features and restoring information about the rain-free environment based on the powerful representation capabilities of neural networks. However, previous research has focused on architecture innovations and has yet to consider the vulnerability issues that already exist in neural networks. This research gap hints at a potential security threat geared toward the intelligent perception of autonomous driving in the rain. In this paper, we propose a universal rain-removal attack (URA) on the vulnerability of image rain-removal algorithms by generating a non-additive spatial perturbation that significantly reduces the similarity and image quality of scene restoration. Notably, this perturbation is difficult to recognise by humans and is also the same for different target images. Thus, URA could be considered a critical tool for the vulnerability detection of image rain-removal algorithms. It also could be developed as a real-world artificial intelligence attack method. Experimental results show that URA can reduce the scene repair capability by 39.5% and the image generation quality by 26.4%, targeting the state-of-the-art (SOTA) single-image rain-removal algorithms currently available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge