Jihyeun Yoon

Bin-wise Temperature Scaling (BTS): Improvement in Confidence Calibration Performance through Simple Scaling Techniques

Sep 23, 2019

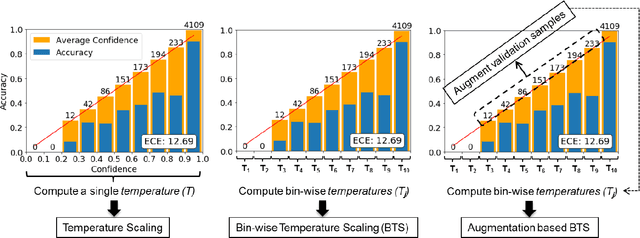

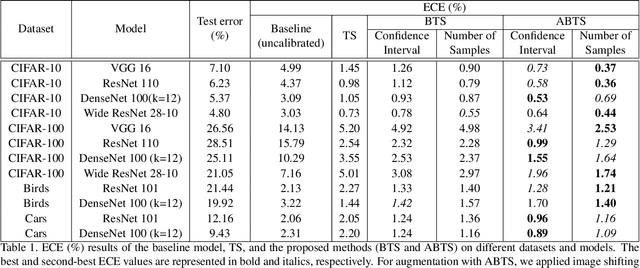

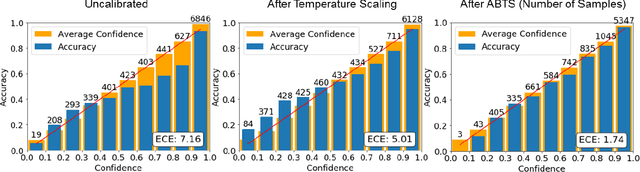

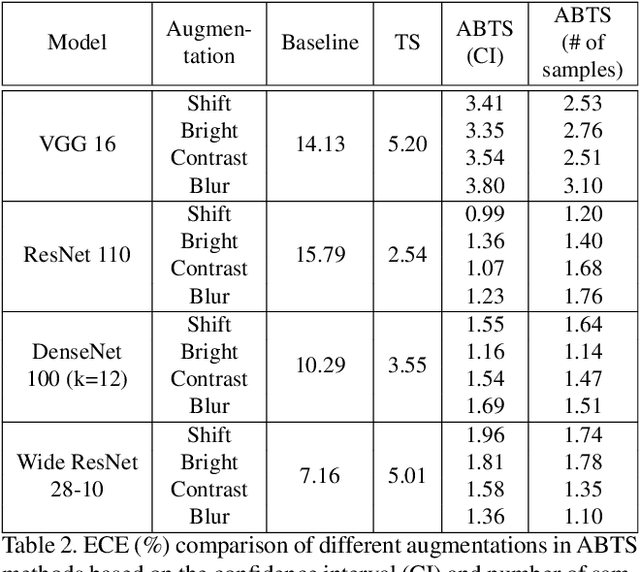

Abstract:The prediction reliability of neural networks is important in many applications. Specifically, in safety-critical domains, such as cancer prediction or autonomous driving, a reliable confidence of model's prediction is critical for the interpretation of the results. Modern deep neural networks have achieved a significant improvement in performance for many different image classification tasks. However, these networks tend to be poorly calibrated in terms of output confidence. Temperature scaling is an efficient post-processing-based calibration scheme and obtains well calibrated results. In this study, we leverage the concept of temperature scaling to build a sophisticated bin-wise scaling. Furthermore, we adopt augmentation of validation samples for elaborated scaling. The proposed methods consistently improve calibration performance with various datasets and deep convolutional neural network models.

Propagated Perturbation of Adversarial Attack for well-known CNNs: Empirical Study and its Explanation

Sep 23, 2019

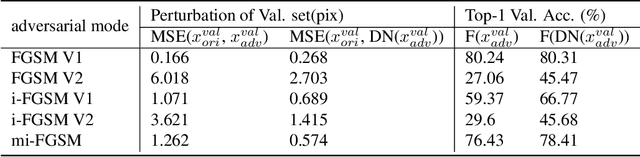

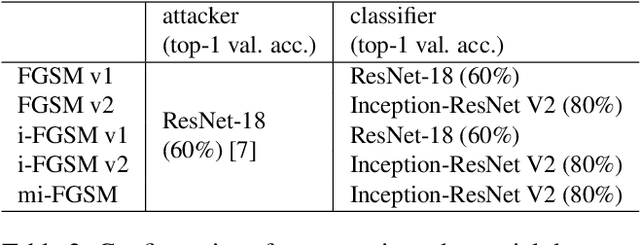

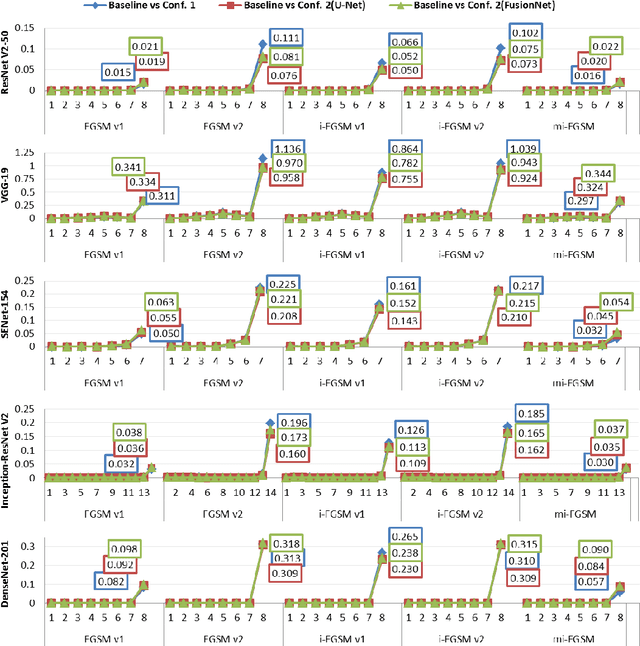

Abstract:Deep Neural Network based classifiers are known to be vulnerable to perturbations of inputs constructed by an adversarial attack to force misclassification. Most studies have focused on how to make vulnerable noise by gradient based attack methods or to defense model from adversarial attack. The use of the denoiser model is one of a well-known solution to reduce the adversarial noise although classification performance had not significantly improved. In this study, we aim to analyze the propagation of adversarial attack as an explainable AI(XAI) point of view. Specifically, we examine the trend of adversarial perturbations through the CNN architectures. To analyze the propagated perturbation, we measured normalized Euclidean Distance and cosine distance in each CNN layer between the feature map of the perturbed image passed through denoiser and the non-perturbed original image. We used five well-known CNN based classifiers and three gradient-based adversarial attacks. From the experimental results, we observed that in most cases, Euclidean Distance explosively increases in the final fully connected layer while cosine distance fluctuated and disappeared at the last layer. This means that the use of denoiser can decrease the amount of noise. However, it failed to defense accuracy degradation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge