Hyemin Jung

Gradient Surgery for One-shot Unlearning on Generative Model

Jul 18, 2023

Abstract:Recent regulation on right-to-be-forgotten emerges tons of interest in unlearning pre-trained machine learning models. While approximating a straightforward yet expensive approach of retrain-from-scratch, recent machine unlearning methods unlearn a sample by updating weights to remove its influence on the weight parameters. In this paper, we introduce a simple yet effective approach to remove a data influence on the deep generative model. Inspired by works in multi-task learning, we propose to manipulate gradients to regularize the interplay of influence among samples by projecting gradients onto the normal plane of the gradients to be retained. Our work is agnostic to statistics of the removal samples, outperforming existing baselines while providing theoretical analysis for the first time in unlearning a generative model.

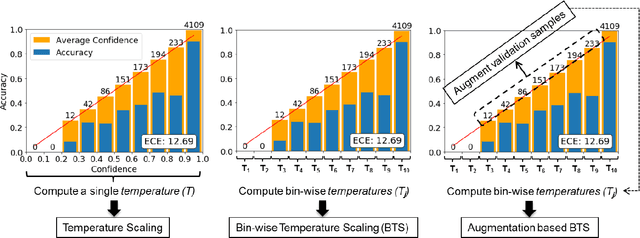

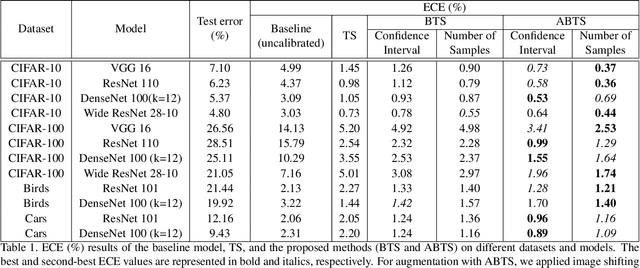

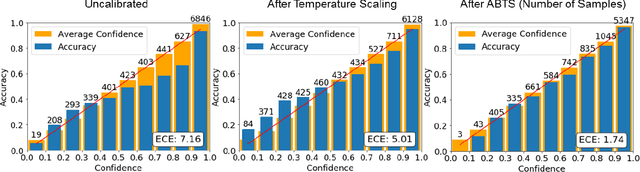

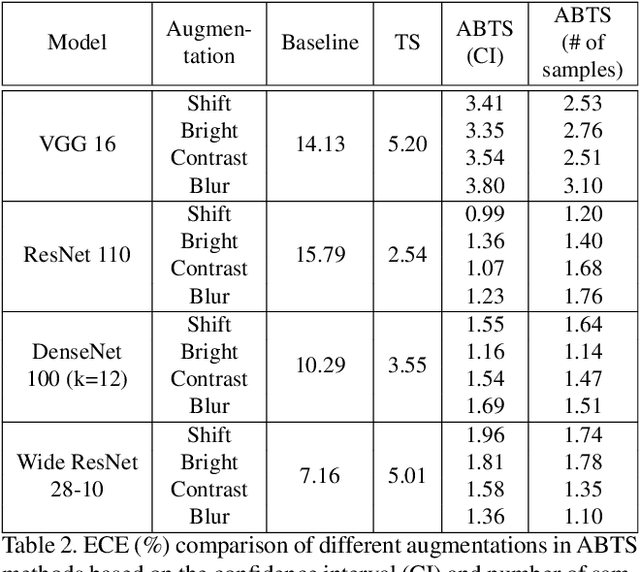

Bin-wise Temperature Scaling (BTS): Improvement in Confidence Calibration Performance through Simple Scaling Techniques

Sep 23, 2019

Abstract:The prediction reliability of neural networks is important in many applications. Specifically, in safety-critical domains, such as cancer prediction or autonomous driving, a reliable confidence of model's prediction is critical for the interpretation of the results. Modern deep neural networks have achieved a significant improvement in performance for many different image classification tasks. However, these networks tend to be poorly calibrated in terms of output confidence. Temperature scaling is an efficient post-processing-based calibration scheme and obtains well calibrated results. In this study, we leverage the concept of temperature scaling to build a sophisticated bin-wise scaling. Furthermore, we adopt augmentation of validation samples for elaborated scaling. The proposed methods consistently improve calibration performance with various datasets and deep convolutional neural network models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge