Jihyeong Jung

Self-Explainable Temporal Graph Networks based on Graph Information Bottleneck

Jun 19, 2024

Abstract:Temporal Graph Neural Networks (TGNN) have the ability to capture both the graph topology and dynamic dependencies of interactions within a graph over time. There has been a growing need to explain the predictions of TGNN models due to the difficulty in identifying how past events influence their predictions. Since the explanation model for a static graph cannot be readily applied to temporal graphs due to its inability to capture temporal dependencies, recent studies proposed explanation models for temporal graphs. However, existing explanation models for temporal graphs rely on post-hoc explanations, requiring separate models for prediction and explanation, which is limited in two aspects: efficiency and accuracy of explanation. In this work, we propose a novel built-in explanation framework for temporal graphs, called Self-Explainable Temporal Graph Networks based on Graph Information Bottleneck (TGIB). TGIB provides explanations for event occurrences by introducing stochasticity in each temporal event based on the Information Bottleneck theory. Experimental results demonstrate the superiority of TGIB in terms of both the link prediction performance and explainability compared to state-of-the-art methods. This is the first work that simultaneously performs prediction and explanation for temporal graphs in an end-to-end manner.

Unsupervised Episode Generation for Graph Meta-learning

Jun 27, 2023

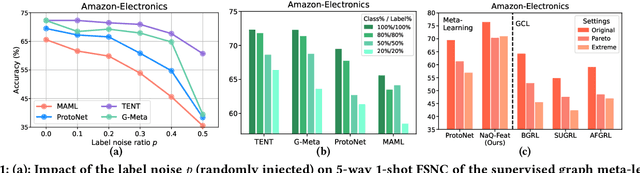

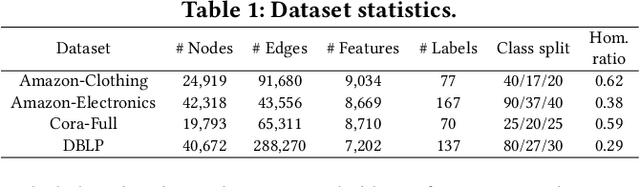

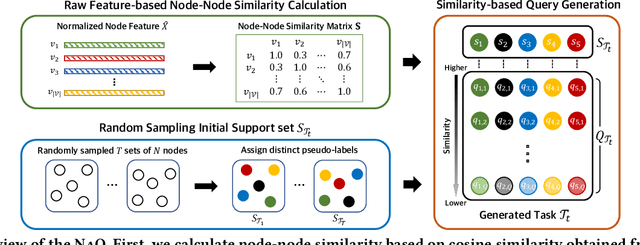

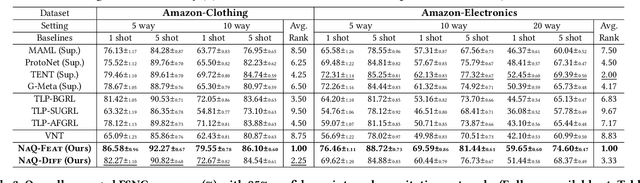

Abstract:In this paper, we investigate Unsupervised Episode Generation methods to solve Few-Shot Node-Classification (FSNC) problem via Meta-learning without labels. Dominant meta-learning methodologies for FSNC were developed under the existence of abundant labeled nodes for training, which however may not be possible to obtain in the real-world. Although few studies have been proposed to tackle the label-scarcity problem, they still rely on a limited amount of labeled data, which hinders the full utilization of the information of all nodes in a graph. Despite the effectiveness of Self-Supervised Learning (SSL) approaches on FSNC without labels, they mainly learn generic node embeddings without consideration on the downstream task to be solved, which may limit its performance. In this work, we propose unsupervised episode generation methods to benefit from their generalization ability for FSNC tasks while resolving label-scarcity problem. We first propose a method that utilizes graph augmentation to generate training episodes called g-UMTRA, which however has several drawbacks, i.e., 1) increased training time due to the computation of augmented features and 2) low applicability to existing baselines. Hence, we propose Neighbors as Queries (NaQ), which generates episodes from structural neighbors found by graph diffusion. Our proposed methods are model-agnostic, that is, they can be plugged into any existing graph meta-learning models, while not sacrificing much of their performance or sometimes even improving them. We provide theoretical insights to support why our unsupervised episode generation methodologies work, and extensive experimental results demonstrate the potential of our unsupervised episode generation methods for graph meta-learning towards FSNC problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge