Jiawei Wen

Revenue Maximization of Airbnb Marketplace using Search Results

Nov 16, 2019

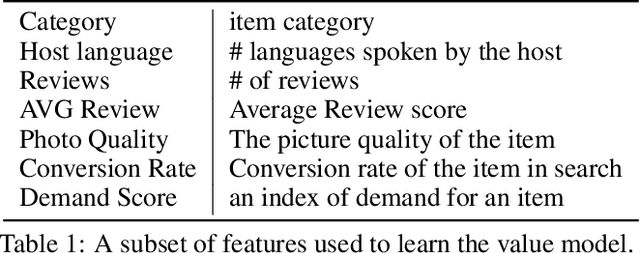

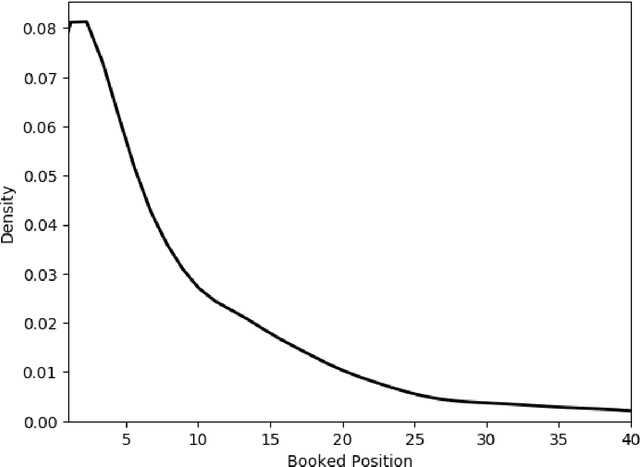

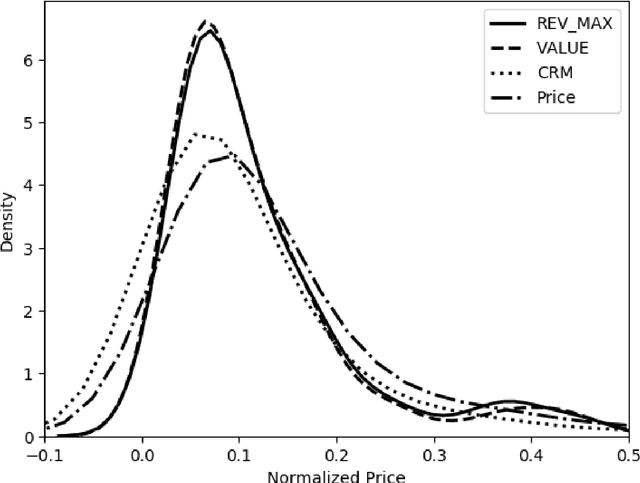

Abstract:Correctly pricing products or services in an online marketplace presents a challenging problem and one of the critical factors for the success of the business. When users are looking to buy an item they typically search for it. Query relevance models are used at this stage to retrieve and rank the items on the search page from most relevant to least relevant. The presented items are naturally "competing" against each other for user purchases. We provide a practical two-stage model to price this set of retrieved items for which distributions of their values are learned. The initial output of the pricing strategy is a price vector for the top displayed items in one search event. We later aggregate these results over searches to provide the supplier with the optimal price for each item. We applied our solution to large-scale search data obtained from Airbnb Experiences marketplace. Offline evaluation results show that our strategy improves upon baseline pricing strategies on key metrics by at least +20% in terms of booking regret and +55% in terms of revenue potential.

ET-Lasso: Efficient Tuning of Lasso for High-Dimensional Data

Oct 10, 2018

Abstract:The L1 regularization (Lasso) has proven to be a versatile tool to select relevant features and estimate the model coefficients simultaneously. Despite its popularity, it is very challenging to guarantee the feature selection consistency of Lasso. One way to improve the feature selection consistency is to select an ideal tuning parameter. Traditional tuning criteria mainly focus on minimizing the estimated prediction error or maximizing the posterior model probability, such as cross-validation and BIC, which may either be time-consuming or fail to control the false discovery rate (FDR) when the number of features is extremely large. The other way is to introduce pseudo-features to learn the importance of the original ones. Recently, the Knockoff filter is proposed to control the FDR when performing feature selection. However, its performance is sensitive to the choice of the expected FDR threshold. Motivated by these ideas, we propose a new method using pseudo-features to obtain an ideal tuning parameter. In particular, we present the Efficient Tuning of Lasso (ET-Lasso) to separate active and inactive features by adding permuted features as pseudo-features in linear models. The pseudo-features are constructed to be inactive by nature, which can be used to obtain a cutoff to select the tuning parameter that separates active and inactive features. Experimental studies on both simulations and real-world data applications are provided to show that ET-Lasso can effectively and efficiently select active features under a wide range of different scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge