Jiawei Fan

ScaleKD: Strong Vision Transformers Could Be Excellent Teachers

Nov 11, 2024

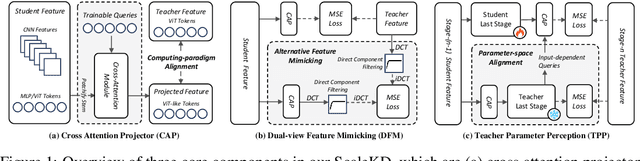

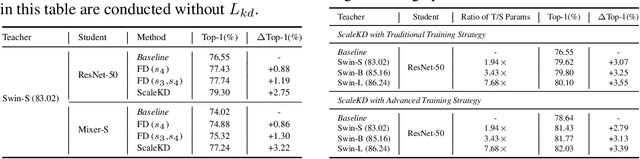

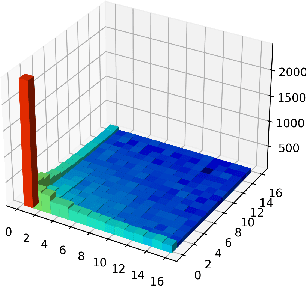

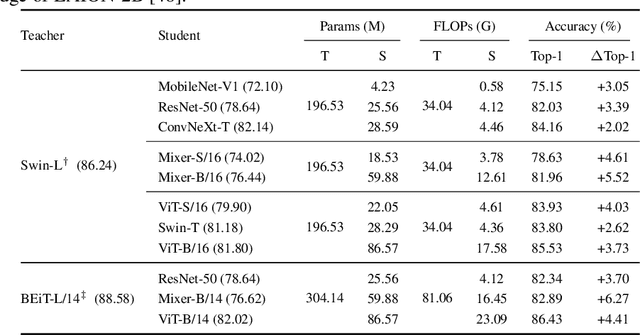

Abstract:In this paper, we question if well pre-trained vision transformer (ViT) models could be used as teachers that exhibit scalable properties to advance cross architecture knowledge distillation (KD) research, in the context of using large-scale datasets for evaluation. To make this possible, our analysis underlines the importance of seeking effective strategies to align (1) feature computing paradigm differences, (2) model scale differences, and (3) knowledge density differences. By combining three coupled components namely cross attention projector, dual-view feature mimicking and teacher parameter perception tailored to address the above problems, we present a simple and effective KD method, called ScaleKD. Our method can train student backbones that span across a variety of convolutional neural network (CNN), multi-layer perceptron (MLP), and ViT architectures on image classification datasets, achieving state-of-the-art distillation performance. For instance, taking a well pre-trained Swin-L as the teacher model, our method gets 75.15%|82.03%|84.16%|78.63%|81.96%|83.93%|83.80%|85.53% top-1 accuracies for MobileNet-V1|ResNet-50|ConvNeXt-T|Mixer-S/16|Mixer-B/16|ViT-S/16|Swin-T|ViT-B/16 models trained on ImageNet-1K dataset from scratch, showing 3.05%|3.39%|2.02%|4.61%|5.52%|4.03%|2.62%|3.73% absolute gains to the individually trained counterparts. Intriguingly, when scaling up the size of teacher models or their pre-training datasets, our method showcases the desired scalable properties, bringing increasingly larger gains to student models. The student backbones trained by our method transfer well on downstream MS-COCO and ADE20K datasets. More importantly, our method could be used as a more efficient alternative to the time-intensive pre-training paradigm for any target student model if a strong pre-trained ViT is available, reducing the amount of viewed training samples up to 195x.

Development of a Platform to Enable Real Time, Non-disruptive Testing and Early Fault Detection of Critical High Voltage Transformers and Switchgears in High Speed-rail

Oct 01, 2024Abstract:Partial discharge (PD) incidents can occur in critical components of high-speed rail electric systems, such as transformers and switchgears, due to localized insulation defects that cannot withstand electric stress, leading to potential flashovers. These incidents can escalate over time, resulting in breakdowns, downtime, and safety risks. Fortunately, PD activities emit radio frequency (RF) signals, allowing for the development of a hardware platform for real-time, non-invasive PD detection and monitoring. The system uses an RF antenna and high-speed data acquisition to scan signals across a configurable frequency range (100MHz to 3GHz), utilizing intermediate frequency modulation and sliding frequency windows for detailed analysis. When signals exceed a threshold, the system records the events, capturing both raw signal data and spectrum snapshots. Real-time data is streamed to a cloud server, offering remote access through a dedicated smartphone application, enabling maintenance teams to monitor and respond promptly. Laboratory testing has confirmed the system's ability to accurately capture RF signals and provide real-time PD monitoring, enhancing the reliability and safety of high-speed rail infrastructure.

Augmentation-Free Dense Contrastive Knowledge Distillation for Efficient Semantic Segmentation

Dec 07, 2023

Abstract:In recent years, knowledge distillation methods based on contrastive learning have achieved promising results on image classification and object detection tasks. However, in this line of research, we note that less attention is paid to semantic segmentation. Existing methods heavily rely on data augmentation and memory buffer, which entail high computational resource demands when applying them to handle semantic segmentation that requires to preserve high-resolution feature maps for making dense pixel-wise predictions. In order to address this problem, we present Augmentation-free Dense Contrastive Knowledge Distillation (Af-DCD), a new contrastive distillation learning paradigm to train compact and accurate deep neural networks for semantic segmentation applications. Af-DCD leverages a masked feature mimicking strategy, and formulates a novel contrastive learning loss via taking advantage of tactful feature partitions across both channel and spatial dimensions, allowing to effectively transfer dense and structured local knowledge learnt by the teacher model to a target student model while maintaining training efficiency. Extensive experiments on five mainstream benchmarks with various teacher-student network pairs demonstrate the effectiveness of our approach. For instance, the DeepLabV3-Res18|DeepLabV3-MBV2 model trained by Af-DCD reaches 77.03%|76.38% mIOU on Cityscapes dataset when choosing DeepLabV3-Res101 as the teacher, setting new performance records. Besides that, Af-DCD achieves an absolute mIOU improvement of 3.26%|3.04%|2.75%|2.30%|1.42% compared with individually trained counterpart on Cityscapes|Pascal VOC|Camvid|ADE20K|COCO-Stuff-164K. Code is available at https://github.com/OSVAI/Af-DCD

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge